by Rhawn Joseph, Ph.D.

Rhawn Joseph, Ph.D.

BrainMind.com

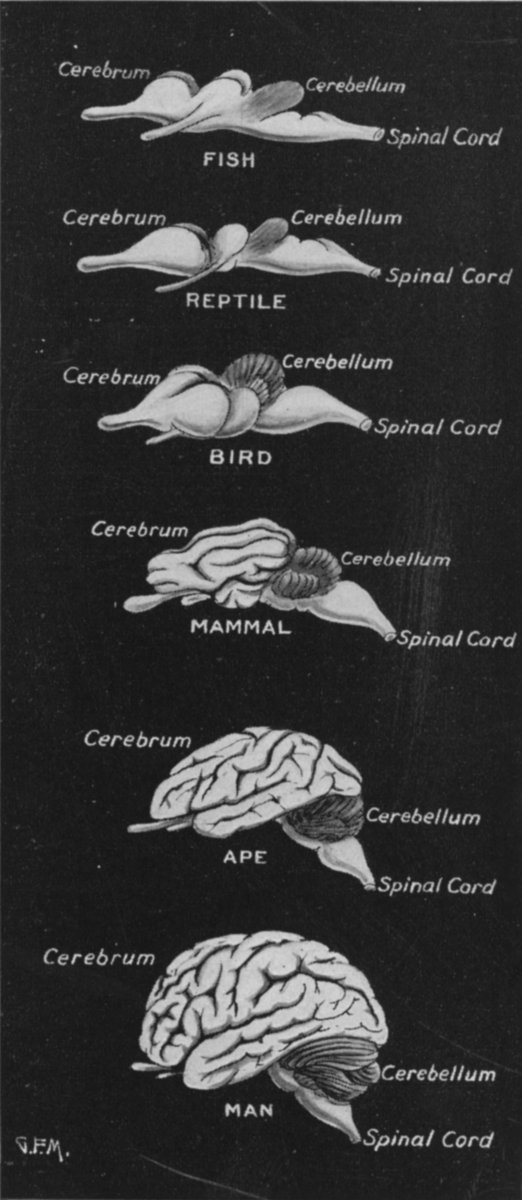

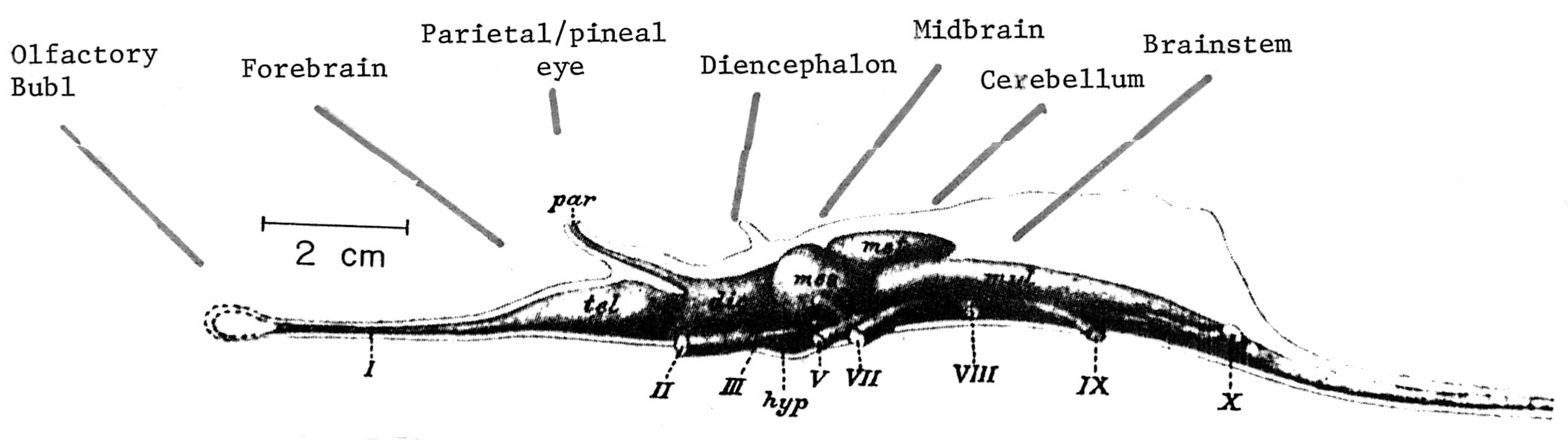

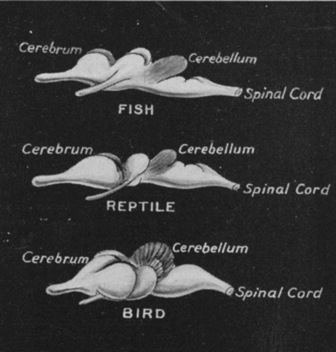

Long before amphibians left the sea to take up life on land and in the air, fish had already learned to detect and analyze sounds made in the water. This was made possible by a structure located along both sides of the fish's body, called the lateral line. The lateral line is very sensitive to vibrations including those made by sound. The mammalian auditory system, however, did not evolve from the lateral line but from a cluster of nerve cells located within the brainstem, the vestibular nucleus 1.

The human brainstem vestibular nucleus is very sensitive to vibrations and gravitational influences and helps to mediates balance and movement, such as in swimming and running 2. Over the course of evolution, it is through the vestibular nucleus and the amygdala where the first neuronal rudiments of "hearing" and the analysis of sound also took place. Among all mammals this is a function the vestibular nuclei continues to serve via the signals it receives from the inner ear; the brain's outpost for detecting certain vibratory waves of molecules and their frequency of ocurrence.

Indeed, it is via vibration and tactual sensations that individuals who are deaf are able to "hear" sound and music. This is also why it is possible to feel music, i.e. the vibrations emanating from a high amplitude speaker. Vibrations, of course, are a function and a result of movement, and movement and physical contact is a prime source of sound. In fact, the auditory system appears to have evolved so as to detect sounds which arise when something else moves; i.e. a food source or a predator.

Be it mammal or human, the auditory system remains specialized to perceive sudden transient sounds which are typically made by the movement of other animals (such as prey or predators). The auditory system, however, remains tightly linked with the limbic system as well as the motor systems which enable it to alert the rest of the brain to possible danger 3. The amygdala continually samples auditory events so as to detect those which are of emotional and motivational significance.

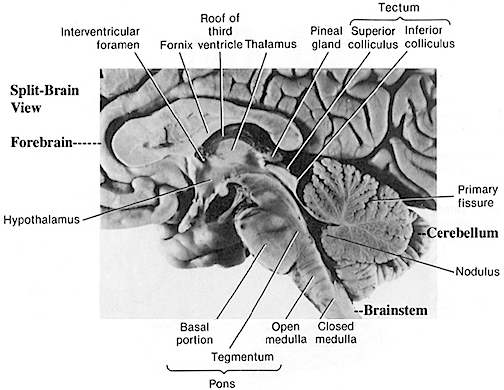

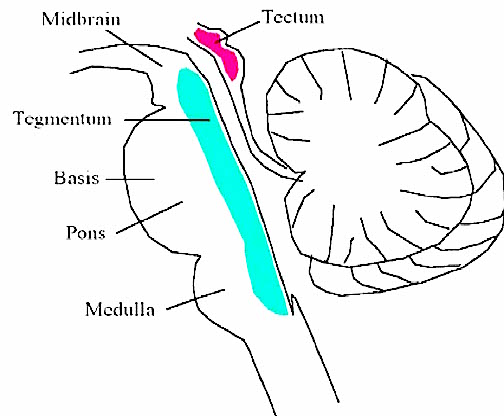

Initially, however, the auditory system also evolved so that organisms could turn and orient toward and localize unexpected sound sources. This was made possible by messages transmitted to the midbrain inferior tectum, which has also been called the inferior colliculus. This includes things that go bump in the night such as the settling of the house and the creaking of the floor, all of which can give rise to alarm reactions in the listener (in their amygdala). This is a sense humans and their ancestors have maintained for well over several million years, its main purpose being to maximize survival. In fact, the tectum (also called the colliculi, or, little coolas (or butts)) of the midbrain (as well as the amygdala of the limbic system) was the main source of auditory analysis two hundred million years before the evolution of neocortex, some 100 million years ago. This is a function it continues to perform among modern day reptiles, amphibians, sharks, as well as mammals.

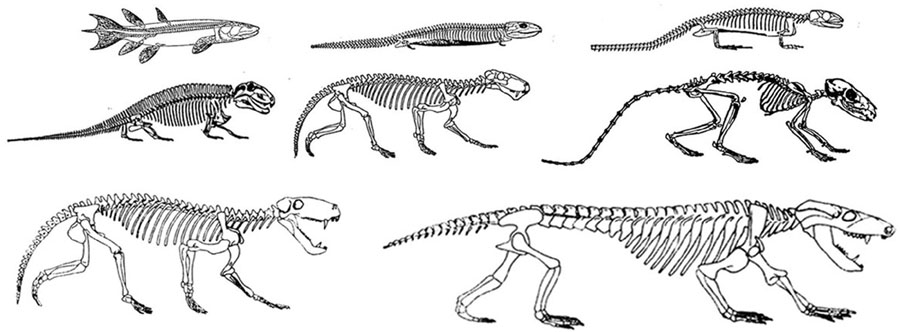

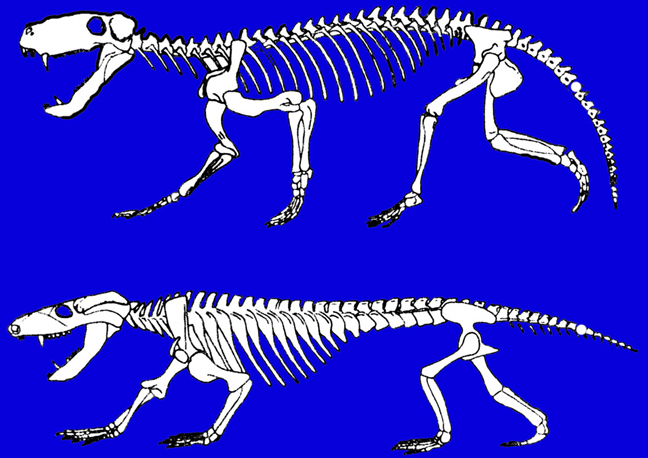

However, it was not until the appearance of the repto-mammals, the therapsids, some 250 million years ago, that anything resembling an inner or even a middle ear first appeared 4. It was probably at this time that sound first came to serve as a means of purposeful and complex communication. Nevertheless, somewhere between 70 to 100 million years ago, when the first true mammals appeared on the scene (creatures who evolved from the therapsids), vocalizing assumed an even greater importance in communication 5. This in turn was made possible via further refinements within the inner ear and the development of auditory sensitive neocortex in the temporal lobe.

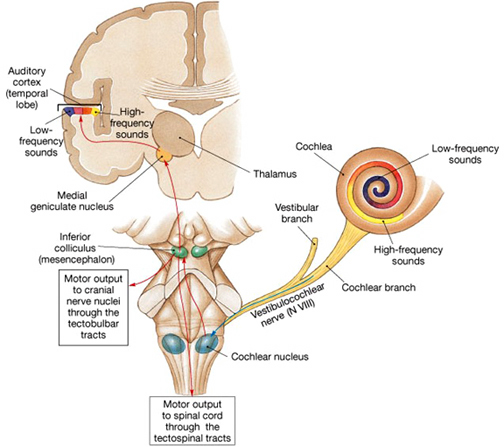

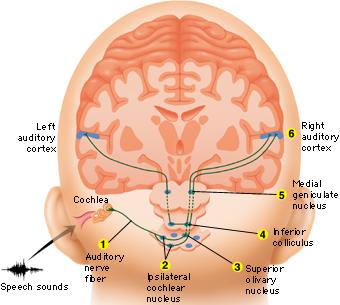

AUDITORY TRANSMISSION FROM THE COCHLEA TO THE TEMPORAL LOBE

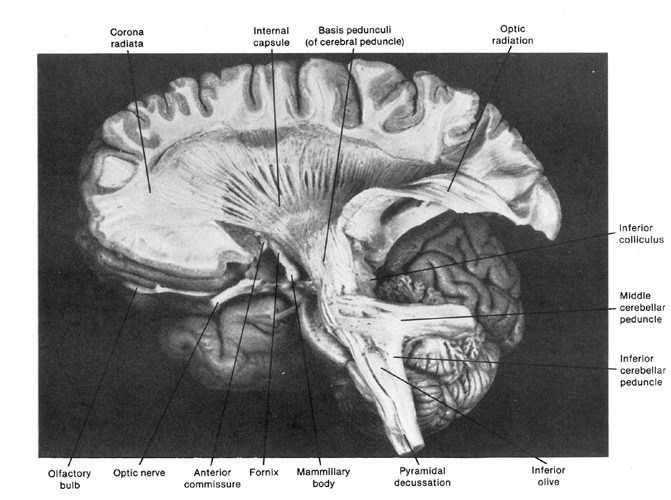

Within the cochlea of the inner ear are tiny hair cells which serve as sensory receptors. These cells give rise to axons which form the cochlear division of the 8th cranial nerve; i.e. the auditory nerve. This rope of fibers exits the inner ear and travels to and terminates in the cochlear nucleus which overlaps and is located immediately adjacent to the vestibular-nucleus from which it evolved within the brainstem.

Among mammals the cochlear nucleus in turn projects auditory information to three different collections of nuclei. These are the superior olivary complex, the nucleus of the lateral lemniscus, and, as noted, the inferior colliculi/tectum (the superior portion of which is concerned exclusively with vision and which forms the optic lobe) 6.

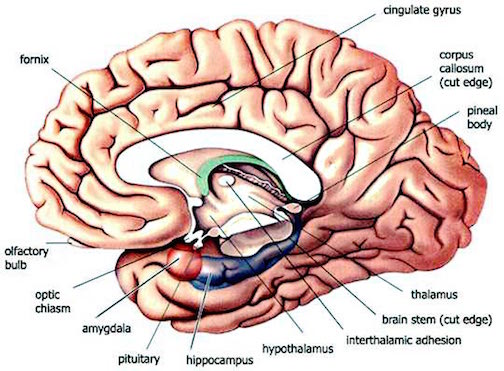

A considerable degree of information analysis occurs in each of these areas before being relayed to yet other brain regions such as the amygdala (which extracts those features which are emotionally or motivationally significant), and to the thalamus where yet further analysis occurs. The thalamus (which sits above the hypothalamus at the center of the brain) is a very ancient sensory integration center which probably first evolved well over 450 million years ago.

Among mammals, from the thalamus and amygdala, auditory signals are transmitted to the primary auditory receiving area located in the neocortex of the superior temporal lobe. This primary auditory area is referred to as Heschl's gyrus. Here auditory signals undergo extensive analysis and reanalysis and simple associations begin to be formed 7. However, by time it has reached the neocortex, auditory signals have undergone extensive analysis by the thalamus, amygdala, and the other ancient structures mentioned above.

Unlike the primary visual and somesthetic areas located within the neocortex of the occipital and parietal lobes which receive signals from only one half of the body or visual space, the primary auditory region receives some input from both ears, and from both halves of auditory space. This is a consequence of the considerable cross-talk which occurs between different subcortical nuclei as information is relayed to and from various regions prior to transfer to the neocortex 8. Predominantly, however, the right ear transmits to the left cerebral neocortex and vice versa.

FILTERING, FEEDBACK & TEMPORAL-SEQUENTIAL REORGANIZATION

The old cortical centers located in the midbrain and brain stem evolved long before the appearance of neocortex and have long been adapted and specialized for performing a considerable degree of information analysis 9. This is evident from observing the behavior of reptiles and amphibians where auditory neocortex is either absent or minimally developed. Moreover, many of these old cortical nuclei also project back to each other such that each subcortical structure might hear and analyze the same sound repeatedly. In this manner the brain is able heighten or diminish the amplitude of various sounds via feedback adjustment 10. In fact, not only is feedback provided, but the actual order of the sound elements perceived can be rearranged when they are played back. This same process continues at the level of the neocortex which has the advantage of being the recipient of signals that have already been highly processed and analyzed. It is here and in this manner that language related sounds begin to be organized and recognized.

Sustained auditory activity. One of the main functions of the primary auditory neocortical receptive area appears to be the retention of sounds for brief time periods (up to a second) so that temporal and sequential features may be extracted and discrepancies in spatial location identified; i.e. so that we can determine from where a sound may have originated 11. This also allows comparisons to be made with sounds that were just previously received and those which are just arriving.

Moreover, via their sustained activity, these neurons are able to prolong (perhaps via a perseverating feedback loop with the thalamus) the duration of certain sounds so that they are more amenable to analysis. In this manner, even complex sounds can be broken down into components which are then separately analyzed. Hence, sounds can be perceived as sustained temporal sequences. In this manner sound elements composed of consonants, vowels, and phonemes and morphemes can be more readily identified, particularly within the auditory neocortex of the left half of the brain 12. Moreover, via feedback and sustained (vs diminished) activity, the importance and even order of the sounds perceived can be changed, filtered or heightened; an extremely important development in regard to the acquisition of language 13.

Indeed, a single phoneme may be scattered over several neighboring units of sounds. A single sound segment may in turn carry several successive phonemes. Therefore a considerable amount of reanalysis, reordering, or filtering of these signals is required so that comprehension can occur 14. These processes, however, presumably occurs both at the neocortical and old cortical level. In this manner a phoneme can be identified, extracted and analyzed and placed in its proper category and temporal position.

For example, take three sound units, "t-k-a," which are transmitted from the thalamus to the superior temporal auditory receiving area. Via a feedback loop this primary auditory area can send any one of these units back to the thalamus which again sends it back to the temporal lobe thus amplifying the signal or rearranging the order, "t-a-k," or "K-a-t." A multitude of such interactions are in fact possible so that whole strings of sounds can be arranged in a certain order. Mutual feed back characterizes most other neocortical interactions as well, be it touch, audition, or vision 15.

Via all these interactions sounds can be repeated, their order can be rearranged, and the amplitude on specific auditory signals can be enhanced whereas others can be filtered out. It is in this manner that fine tuning of the nervous system occurs so that specific signals are attended to, perceived, processed, committed to memory and so on. Indeed, a massive degree of auditory filtering occurs throughout the brain not only in regard to sound, but visual and tactual information as well. Moreover, the same process occurs when organizing words for expression.

This ability to perform so many operations on individual sound units, has in turn greatly contributed to the development of human speech and language. For example, the ability to hear human speech requires that temporal resolutions occur at great speed so as to sort through the overlapping and intermixed signals being perceived. This requires that these sounds are processed in parallel, or stored briefly and then replayed in a different order so that discrepancies due to overlap in sounds can be adjusted for 16, and this is what occurs many times over via the interactions of the old cortex and the neocortex. Similarly, when speaking or thinking, sound units must also be arranged in a particular order so that what we say is comprehensible to others and so that we may understand our own verbal thoughts.

HEARING SOUNDS & LANGUAGE

Humans are capable of uttering thousands of different sounds all of which are easily detected by the human ear. And yet, although own vocabulary is immense, human speech actually consists of about 12-60 units of sound depending, for example, if one is speaking Hawaiian vs English. The English vocabulary consists of several hundred thousand words which are based on the combinations of just 45 different sounds.

Animals too, however, are capable of a vast number of utterances. In fact monkeys and apes employ between 20-25 units of sound, whereas a fox employs 36. However, these animals cannot string these sounds together so as to create a spoken language. This is because most animals tend to use only a few units of sound at one time which varies depending on their situation, e.g. lost, afraid, playing.

Humans combine these sounds to make a huge number of words. In fact, employing only 13 sound units, humans are able to combine them to form five million word sounds 17.

PHONEMES

The smallest unit of sound is referred to as a phoneme. For example, p and b as in bet vs pet are phonemes. When phonemes are strung together as a unit they in turn comprise morphemes. Morphemes such as "cat" are composed of three phonemes, "k,a,t". Hence, phonemes must be arranged in a particular temporal order in order to form a morpheme.

Morphemes in turn make up the smallest unit of meaningful sounds such as those used to signal relationships such as "er" as in "he is older than she." All languages have rules that govern the number of phonemes, their relationships to morphemes and how morphemes may be combined so as to produce meaningful sounds 18.

Each phoneme is composed of multiple frequencies which are in turn processed in great detail once they are transmitted to the superior temporal lobe. In fact, the primary auditory area is tonotopically organized, such that similar auditory frequencies are analyzed by cells which are found in the same location of the brain 19. For example, as one moves across the temporal lobe auditory frequencies perceived becomes progressively higher in one direction and lower when traveling in the opposite direction.

TEMPORAL-SEQUENTIAL & LINGUISTIC SENSITIVITY.

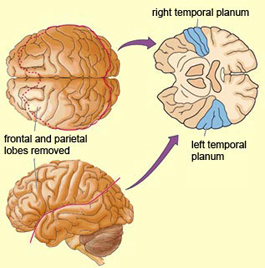

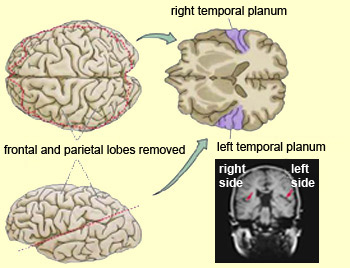

Although it is apparent that the auditory regions of both cerebral hemispheres are capable of discerning and extracting temporal-sequential rhythmic acoustics, the left temporal lobe contains a greater concentration of neurons specialized for this purpose since the left brain is clearly superior in this capacity 20.

For example the left hemisphere has been repeatedly shown to be specialized for sorting, separating and extracting in a segmented fashion, the phonetic and temporal-sequential or linguistic-articulatory features of incoming auditory information so as to identify speech units. It is also more sensitive to rapidly changing acoustic cues be they verbal or non-verbal as compared to the right hemisphere. Moreover, via dichotic listening tasks (where sounds can be selectively transmitted to the right vs left ear), the right ear (left temporal lobe) has been shown to be dominant for the perception of real words, word lists, numbers, backwards speech, Morse code, nonsense syllables, the transitional elements of speech, rhymes, single phonemes, consonants, and consonant-vowel syllables 21.

In general, there are two large classes of speech sounds: consonants and vowels. Consonants by nature are brief and transitional and have identification boundaries which are sharply defined. These boundaries enable different consonants to be discerned accurately 22. Vowels are more continuous in nature and in this regard, the right half of the brain plays an important role in their perception.

Consonants are more important in regard to left brain speech perception. This is because they are composed of segments of rapidly changing frequencies which includes the duration, direction and magnitude of sounds segments interspersed with periods of silence. These transitions occur in 50 msecs or less which in turn requires that the left half of the brain take responsibility for perceiving them.

In contrast, vowels consist of a slowly changing or steady frequencies with transitions taking 350 or more msec. In this regard, vowels are more like natural environmental sounds which are more continuous in nature, even those which are brief such as a snap of a twig. They are also more likely to be processed and perceived by the right half of the brain, though the left brain also plays a role in their perception 23.

The differential involvement of the right and left half of the brain in processing consonants and vowels is a function of their neuroanatomical organization and the fact that the left brain is specialized for dealing with sequential information. Moreover, the left brain is able to make fine temporal discriminations with intervals between sounds as small as 50 msec. However, the right brain needs 8-10 times longer and has difficulty discriminating the order of sounds if they are separated by less than 350 msecs.

Hence, consonants are perceived in a wholly different manner from vowels. Vowels yield to nuclei involved in "continuous perception" and are processed by the right half of the brain. Consonants are more a function of "categorical perception" and are processed in the left half of cerebrum. In fact, the left temporal lobe acts on both vowels and consonants, during the process of perception so as to sort these signals into distinct patterns of segments via which these sounds become classified and categorized.

Nevertheless, be they processed by the right or left brain, both vowels and consonants are significant in speech perception. Vowels are particularly important when we consider their significant role in communicating emotional status and intent.

SPATIAL LOCALIZATION, ATTENTION & ENVIRONMENTAL SOUNDS

As noted, auditory neurons located in the neocortex of the temporal lobe receive input from both ears. Hence, the primary auditory area, in conjunction with the inferior auditory tectum, play a significant role in orienting to and localizing the source of various sounds; for example, by comparing time and intensity differences in the neural input from each ear. A sound arising from one's right will more quickly reach and sound louder to the right ear as compared to the left.

Among mammals, a considerable number of auditory neurons respond or become highly excited in response to sounds from a particular location 24. That is, just as certain parietal and motor neurons are responsible for only select regions of the body, many auditory neurons are selectively sensitive to certain locations, and to certain sounds including human screams and cries. Moreover, some of these neurons become excited only when the subject looks at the source of the sound, which is why some people feel they cannot hear as well when their eye glasses are off. Hence, these neurons act so that the source, identity, and location may be ascertained and fixated upon. As based on studies of humans who have sustained brain injuries, the right temporal lobe is more involved than the left in discerning location 25, and in perceiving non-verbal sounds.

As noted, certain cells in the auditory area are highly specialized and will respond only to certain meaningful vocalizations. In this regard they seem to be tuned to respond only to specific auditory parameters so as to identify and extract certain salient features. These are called feature detector cells, much like those in the inferior temporal lobe which respond only to certain forms. For example, some cells will respond only to cries of alarm and others only to sounds suggestive of fear or indicating danger.

Although the left temporal lobe appears to be more involved in extracting certain linguistic features, as noted, the right temporal region is more adept at identifying and recognizing non-verbal environmental acoustics (e.g. wind, rain, animal noises), the prosodic-melodic nuances of speech, sounds which convey emotional meaning, as well as most aspects of music. Indeed, tumors in or electrical stimulation of the superior temporal gyrus, the right temporal lobe in particular, results in musical hallucinations. Frequently patients report that they hear the same melody over and over. In some instances patients have reported the sound of singing voices and individual instruments 26. Conversely, strokes or complete surgical destruction of the right temporal lobe significantly impairs the ability to name or recognize melodies and musical passages. Such injuries can also disrupt time sense, the perception of timbre and loudness, and tonal memory

Indeed, the right temporal lobe's ability to perceive and recognize musical and environmental and sounds coupled with its sensitivity to the location of sound, no doubt provided great survival value to our early ancient ancestors. That is, in response to a specific sound (e.g. a creeping predator), one is immediately able to identify, locate, and fixate upon the source and thus take appropriate action. Of course, even modern humans rely upon the same mechanisms to escape being run over by cars when walking across streets or riding bicycles, or to ascertain and identify approaching individuals.

CORTICAL DEAFNESS

In some instances, such as due to a middle cerebral artery stroke, the primary auditory receiving areas of the right or left cerebral hemisphere may be destroyed. This results in a disconnection syndrome such that sounds relayed from the thalamus, amygdala or colliculi, cannot be received or analyzed by the temporal lobe. In some cases, however, the strokes may be bilateral such that both auditory areas are injured. When this occurs, one can no longer hear sounds and the patient is said to be suffering from cortical deafness 27.

However, sounds continue to be processed by the thalamus, colliculus and so on. Hence the ability to hear sounds per se, is retained. Since the sounds which are heard are not received neocortically and thus cannot be transmitted to the adjacent association areas, sounds become stripped of meaning. That is, meaning cannot be extracted or assigned by the neocortex, which is the seat or our language dependent conscious mind. Rather, only differences in intensity are discernible. An individual so affected would not only lose the ability to hear sounds, but the sounds of speech.

Patients who suffer cortical deafness cannot respond to questions, do not show startle responses to loud sounds, lose the ability to discern the melody for music, cannot recognize speech or environmental sounds, and tend to experience the sounds they do hear as distorted and disagreeable, e.g. buzzing and roaring, or like the banging of tin cans, etc.

Individuals who are cortically deaf are not aphasic. They can read, write, speak, comprehend pantomime, and are fully aware of their deficit. Nevertheless, although not aphasic, per se, speech is sometimes noted to be hypophonic and contaminated by occasional literal paraphasias such that erroneous sound order substitutions are produced.

More commonly, a destructive lesion may be limited to the primary auditory receiving area of just the right or left cerebral hemisphere. Patient's with such lesions restricted to a single hemisphere are not considered cortically deaf. However, when the auditory receiving area of the left temporal lobe is destroyed the patient suffers from a condition referred to as pure word deafness. If the lesion is in the right temporal receiving area, the disorder is described as an auditory agnosia 28, i,e, an inability to recognize or hear environmental sounds.

PURE WORD DEAFNESS

With a destructive lesion involving the left primary auditory receiving area, Wernicke's auditory association area becomes disconnected from almost all sources of acoustic input and patients are unable to recognize or perceive the sounds of language, be it sentences, single words, or even single letters. All other aspects of comprehension are preserved, including reading, writing, and expressive speech, and the ability to hear music and environmental-emotional sounds. This is because these cognitive capacities are dependent on the functional integrity of other brain areas.

Due to sparing of the right temporal region, the ability to recognize musical and environmental sounds is preserved. If a someone were to knock on the door, this would be heard without difficulty. If instead they yelled, "is anybody home?" only an indiscernible noise would be perceived.

However, the ability to name or verbally describe these sounds is impaired --due to disconnection and the inability of the right hemisphere to talk. That is, these sounds, although recognized as sounds cannot be transmitted to Wernicke's area which is located in the opposite half of the brain. However, they can describe what produced the sound, draw a picture of it, and so on.

Pure word deafness, of course, also occurs with bilateral lesions in which case environmental sound recognition is also effected. In these instances the patient is considered cortically deaf.

Pure word deafness, when due to a unilateral lesion of the left temporal lobe, is partly a consequence of an inability to extract temporal-sequential features from incoming sounds. Hence, linguistic messages cannot be recognized. Pure word deafness can be partly overcome if the patient is spoken to in an extremely slowed manner. The same is true of those with Wernicke's aphasia. AUDITORY AGNOSIA

Mary was home sitting at her desk when she began feeling dizzy and then queasy and then a tremendous buzzing filled her left ear. For a brief moment, she thought she heard a weird crackling sound and then it was gone. Returning to her work, she bent diligently over the papers when the dizzy, sick feeling and the buzzing began again, and then, just as suddenly again it was gone. She sighed and tried to concentrate but was bothered by the development of a tremendous headache.

Later, glancing at the clock, she furrowed her brow in irritation as she realized that the phone call she had been expecting had not come. However, as she pondered the significance of this, again came the buzzing, and then she noticed that the light on her answering machine was blinking. Somebody had left a message! How could that be? she wondered. She had been sitting by the phone for the last hour and no one called. Pressing the button, she turned up the volume and listened intently to the message but couldn't make out the voice. Was somebody playing tricks on her? The voice claimed to be Dave, whose call she had been expecting, but it sure didn't sound like him. Even the "I love you," at the end sounded phony, like some kind of robot. Again her thoughts were interrupted by that stupid buzzing sound, and then, to her surprised, she watched as her answering machine switched on. Quickly turning up the volume she was shocked to hear a recorded voice that did not sound at all like hers, but like a female robot, and then, the robot voice of Dave came on the line, claiming to be angry that she wasn't home. But it didn't sound like Dave, and the voice didn't even sound angry, just loud. "What's wrong with me?" she said out loud, feeling ill, and then froze. That wasn't the sound of her voice!

An individual with cortical deafness suffers from a generalized auditory agnosia involving words and non-linguistic sounds. Agnosia in these instances means, to not know, and someone suffer from agnosagnosia, do not know they do not know.

Usually, an auditory agnosia, with preserved perception of language occurs with lesions restricted to the right temporal lobe. In these instances, an individual loses the capability to correctly discern or even hear environmental sounds (e.g. birds singing, doors closing, keys jangling, telephones ringing), or to recognize emotional-prosodic speech and music. As such, speech may sound like a monotone, or melody may be distorted and displeasing. However, if the condition is severe, the person may not realize that she no longer hears sounds properly, simply because that part of the brain which would notice abnormalities in environmental and melodic-emotional sounds would be destroyed and unable to alert her to the problem. This is due to a disconnection and in this instances she suffers from an agnosagnosia, she doesn't know that she doesn't know, just as a wolf or a chimpanzee would not know that it doesn't know how to read or write as it does not have the brain areas which would alert them to this deficit.

As such, these problems are not likely to come to the attention of a physician unless accompanied by secondary emotional difficulties or if the stroke damages tissue beyond the right temporal lobe and disrupts motor functioning and the patient suffers a paresis. With a more restricted right temporal stroke, most individuals with this disorder, if they are completely agnosic, might not know that they have a problem and thus would not complain. If they or their families notice (for example, if a patient does not respond to a knock on the door) the likelihood is that the problem will be attributed to faulty hearing or even forgetfulness.

Mary had replayed the tape several times and had finally decided that something was definitely wrong with her machine. However, sometime later as she stood up to retrieve some aspirin for that terrible headache she was rudely shocked to see Dave walking into the room.

"Don't you believe in knocking?" she asked angrily. "What do you mean sneaking up on me like this?"

Dave stared at her with tightly pressed down turned lips. It was clear from his expression that he was upset. "Sneaking up?" he replied, loudly. "I pounded several times on your door and rang the bell.."

"You did not!" she interrupted. "And what's wrong with your voice? You sound stange?" Suddenly she felt frightened. Even her own voice didn't sound right.

You're the one ignoring my calls and refusing to answer the door," he replied, giving her a funny look.

Mary stepped back feeling frighted. First the phone, and now this. She stared at him feeling increasingly upset and confused. Although it looked like Dave, he sure didn't sound like Dave, and Dave would never play tricks on her like this. And what about her own voice and the phone? What was going on? What was he up to? "Get out!" she suddenly demanded, as she reached for the phone and placed it next to her ear. Pulling the phone away, she momentarily forgot about Dave. What happened to the dial tone? What was that weird noise?

Because individuals suffering from auditory agnosia may also have difficulty discerning emotional- melodic nuances, it is likely that they will misperceive and fail to comprehend a variety of paralinguistic social-emotional messages. This includes difficulty discerning what others may be implying, or in appreciating emotional and contextual cues, including variables such as sincerity or mirthful intonation. Hence, a host of behavioral difficulties may arise as patients become upset and fearful, and as they realize that something is wrong. As such they may become paranoid, fear they are going crazy, or become convinced that loved one's have become replaced by impostors.

For example, a patient suffering from a right temporal lobe injury and auditory agnosia may complain that his wife no longer loves him, and that he knows this from the sound of her voice, which seems to him to be lacking in emotion. In fact, a patient may notice that the voices of friends and family, and even his own voice sounds in some manner different, which, when coupled with difficulty discerning nuances such as humor and friendliness may lead to the development of paranoia and what appears to be delusional thinking. Unless appropriately diagnosed it is likely that the patients problem will feed upon and reinforce itself and grow more severe.

It is important to note that rather than completely agnosic or word deaf, patients may suffer from only partial deficits. In these instances they may seem to be hard of hearing, frequently misinterpret what is said to them, or slowly develop related emotional difficulties.

THE AUDITORY ASSOCIATION AREAS

Following the analysis performed in the primary auditory receiving area, auditory information is transmitted to the middle temporal lobe (which in the left brain maintains neurons which store linguistic-auditory symbols, words and names) and to the immediately adjacent auditory association area 29. It is in this region where complex auditory associations are performed and where the comprehension of speech or environmental-melodic, emotional sounds occurs. This regions also receives visual associations from the occipital lobe.

This middle temporal auditory and auditory-visual association neocortex is intimately interlinked with Wernicke's area which in turn has become coextensive with the angular gyrus and the inferior parietal lobule. It is in Wernicke's area where the units of sound are strung together in the form of words and sentences and are recognized as having specific meanings and as belonging to certain simple categories 29. Hence, verbal comprehension takes place in Wernicke's and the middle temporal lobe area just as gestural comprehension takes place in the superior-inferior parietal lobe.

The middle temporal and Wernicke's areas, and the corresponding region in the right hemisphere are not merely auditory association areas as they also receive a convergence of fibers from the tactual and visual association cortices. They also receive input from the contralateral auditory association area via the corpus callosum and anterior commissure (large bundles of axonal nerve fibers which interconnect and link the two halves of the brain). Hence, a considerable number of multimodal linkages are made possible within the auditory association areas. Indeed, the richness of language and the ability to utilize a multitude of words and images to describe a single object or its use is very much dependent on these interconnections.

RECEPTIVE APHASIA

Caroline's grown children had at first thought that maybe she was becoming hard of hearing because she so frequently misunderstood what they were saying. Then they worried that she might be losing her memory or even becoming senile, because when they asked her a question or reminded her to keep an appointment or to do some task, she would sometimes just stare at them with a perplexed look on her face. Worse, sometimes she answered questions that they hadn't asked. Like when they wanted to know if Roger had come by and she answered "fine, fine," as if they had asked how she was instead.

She seemed so reluctant to talk and when she did, it often didn't make any sense either. She had even quit reading the newspaper and had not opened any of the books she had been given on her birthday, which was very unusual since she loved to read.

Finally, since she seemed so depressed and confused and was increasingly acting like she did not understand anything that was being said to her, they brought her to their doctor who ordered an MRI. It was then that they realized a tumor was growing in the superior temporal lobe of the left half of her brain.

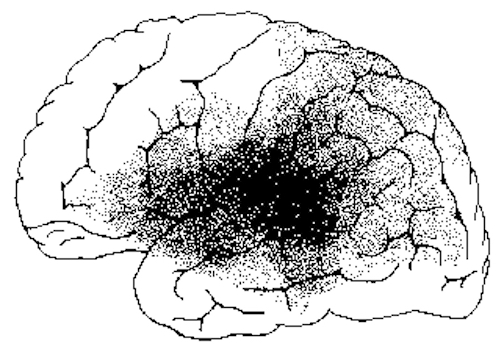

When the left auditory association area is damaged there results severe receptive aphasia i.e. Wernicke's aphasia. Individuals with Wernicke's aphasia, in addition to severe comprehension deficits, usually suffer abnormalities involving expressive speech, reading, writing, repeating and word finding 30. This is because Wernicke's receptive language area acts to retrieve, associate, and provide words, names, and the auditory equivalents of whatever is perceived, seen or felt, as well as assisting in their temporal organization.

When a person with this disorder attempts to read a word, due to destruction of this tissue, she is able to see but unable to analyze the visual symbols linguistically and thus cannot comprehend them. She can no longer read.

Patients with severe forms of Wernicke's aphasia, in addition to other impairments, are necessarily "word deaf." As these individuals recover from their aphasia, word deafness is often the last symptom to disappear. Even when less severe, they are unable to perceive spoken words in their correct order, cannot identify the pattern of presentation and become easily overwhelmed by the sounds of speech and must be spoken to very slowly.

IMPAIRED PERCEPTION OF TEMPORAL ORDER

Frequently disturbances of linguistic comprehension are due to an impaired capacity to discern the individual units of speech and their temporal order 31. Sounds must be separated into discrete interrelated linear units or they will be perceived as a blur, or even as a foreign language. Hence, a patient with Wernicke's aphasia may perceive a spoken sentence such as the "pretty little kitty" as the "lipkitterlitty." They also may have difficulty establishing the boundaries of phonetic (confusing "love" for "glove") and semantic (cigarette for ashtray) auditory information.

Many receptive aphasics, be it due to brain tumors or stroke, can comprehend frequently used words but have increasing difficulty with those less commonly heard. Thus loss of comprehension is not an all-or-none phenomenon. They will usually have the most difficulty understanding relational or syntactical structures, including the use of verb tense, possessives, and prepositions. However, by speaking slowly and by emphasizing the pauses between each individual word, comprehension can be modestly improved. Nevertheless, in severe cases, comprehension is all but completely abolished, though the ability to perceive environmental sounds and emotional nuances will be preserved.

John scrunched his eye closed for a brief moment to fight off the headache and the sick feeling in his stomach. Opening his eyes he stared back into the newspaper and tried to read but something was wrong. Closing his eyes again, he slowly put down the paper, which for some reason sounded awfully loud, so loud in fact that it startled him causing him to almost knock over his coffee in the process. The clatter of the cup made his headache become worse, almost unbearable. Closing then opening his eyes he stared at the almost empty cup and then looked up and met the eyes of the heavy set waitress who was staring at him with a bemused expression. She held a coffee pot in her hand and was sliding some pie toward him.

"You did order pie didn't you?" she asked.

John just stared at her, trying to figure out what she wanted and then glanced at the pie, frowned and shook his head. The waitress shrugged and walked away. It was then that Roger, his partner looked up from the paper and noticed that John looked angry. "You OK?" he asked.

John shook his head feeling irritable. "Piezgrazing and the waitress koasted black coffing I said to that drinker roze coffin..." "What?" Roger asked, staring at him incredulously.

John glanced at the pie and then his empty coffee cup. "Coffing over on thuz the cup with pizelong she said but I'll be over if you want to pear peering, no way am I guzin, like the fat pig..."

Although comprehension has been lost, patients with damage to Wernicke's area are usually still capable of talking (due to preservation of Broca's area). However, they are completely unable to understand what they are saying. Moreover, since Wernicke's area has been destroyed, they do not know that what they are saying no longer makes sense; a condition referred to earlier as agnosagnosia --not knowing that one does not know.

Moreover, most of what they say is nonsensical and is not comprehensible to a listener, who instead may conclude that the person has suffered a nervous breakdown and is schizophrenic and psychotic.

The reason the speech of a person with Wernicke's receptive aphasia no longer makes sense is because Wernicke's area also acts to code linguistic stimuli for expression prior to its transmission to Broca's expressive speech area. Hence, expressive speech becomes severely abnormal, lacking in content, containing neologistic distortions (e.g. "the razgabin"), and/or characterized by non-sequitars, literal (sound substitution) and verbal paraphasic (word substitution) errors. Their speech is also characterized by a paucity of nouns and verbs, and the omission of pauses and sentence endings. This is because the correct words and linguistic concepts cannot be organized and provided by Wernicke's region which has been destroyed. As such, what they say is often incomprehensible.

Presumably because the coding mechanisms involved in organizing what they are planning to say are the same mechanisms which decode what they hear, expressive as well as receptive speech becomes equally disrupted. This is why even those with normal brains are unable to talk and comprehend what others are simultaneously saying.

The spontaneous speech of Wernicke's aphasics is also often characterized by long, seemingly complex grammatically correct sentences, the grammatical stamp being applied by the inferior parietal lobule and Broca's area. Sometimes their speech is in fact hyperfluent such that they speak at an increased rate and seem unable to bring sentences to an end with words being unintelligibly strung together. Hence, this disorder has also been referred to as fluent aphasia for although they can talk, they make absolutely no sense whatsoever. The reason a person can still speak is because Broca's area and the fiber pathway linking it to the inferior parietal lobule, the middle temporal lobe and other areas where word sounds, names, phonemes and morphemes are stored, are still intact. When severe their speech deteriorates into jargon aphasia such that no meaningful communication can be made.

Among those with Wernicke's receptive aphasia, one gauge of comprehension can be based on the amount of normalcy in their language use. That is, if they can say or repeat only a few words normally, it is likely that they can only comprehend a few words as well. In addition, the ability to write may be preserved, although what is written is usually completely unintelligible consisting of jargon and neologistic distortions. Copying written material is possible although it is also often contaminated by errors.

ANOSAGNOSIA

As noted, although the speech of a patient with Wernicke's aphasia is often abnormal or bizarre, with severe dysfunction these patients do not realize that what they say is meaningless. Moreover, they may fail to comprehend that what they hear is meaningless as well. Nor can you tell them since they are unable to comprehend. This is because when Wernicke's area is damaged, there is no other region left to analyze the linguistic components of speech and language. The rest of the brain cannot be alerted to the patient's disability. They don't know that they don't know, that they don't understand as these are not functions they are concerned with. Not being informed otherwise, the rest of the brain assumes that what is being said is normal. Again, this is due to disconnection.

EMOTIONAL AWARENESS

Presumably, as a consequence of loss of comprehension, these patients may display euphoria, or in other cases, paranoia as there remains a non-linguistic or emotional awareness that something is not right 31. Emotional functioning and affective comprehension remains somewhat intact, though sometimes disrupted due to erroneously processed verbal input. That is, the patient's right hemisphere continues to respond to signals generated by others as well as the left half of the patients own brain even though it is abnormal. Similarly, the ability to read and write emotional words (as compared to non-emotional or abstract words) is also somewhat preserved among aphasics. This is because the right hemisphere is intact and is dominant for all aspects of emotion.

Since these paralinguistic and emotional features of language are analyzed by the intact right cerebral hemisphere, sometimes the aphasic individual is able to grasp in general the meaning or intent of a speaker, although verbal comprehension is reduced. This in turn enables him to react in a somewhat appropriate fashion when spoken to. That is, he may be able to discern not only that a question is being asked, but that concern, anger, fear, and so on are being conveyed. Unfortunately, this also makes him appear to comprehend much more than he is capable of.

'SCHIZOPHRENIA"

John was finally admitted to a psychiatric hospital after both his physician and the psychiatrist he had been referred to decided that he had suffered a "nervous breakdown." Roger still couldn't believe it. John was always the most emotionally stable individual he had ever known and then, out of the blue, he goes crazy and develops what those doctors call "paranoid schizophrenia."

Because these individuals display unusual speech, loss of comprehension, a failure to realize that they no longer comprehend or "make sense" when speaking, as well as paranoia and/or euphoria, they are at risk for being misdiagnosed as psychotic or suffering from a formal thought disorder, i.e. "schizophrenia." Indeed, individuals with abnormal left temporal lobe functioning sometime behave and speak in a "schizophrenic-like" manner 32.

According to Benson 33, "those with Wernicke's aphasia often have no apparent physical or elementary neurological disability. Not infrequently, the individual who suddenly fails to comprehend spoken language and whose output is contaminated with jargon is diagnosed as psychotic. Patients with Wernicke's aphasia certainly inhabited some of the old lunatic asylums and probably are still being misplaced" .

Conversely, it has frequently been reported that "schizophrenics" often display significant abnormalities involving speech processing, such that the semantic, temporal-sequential, and lexical aspects of speech organization and comprehension are disturbed and deviantly constructed. Significant similarities between schizophrenic discourse and aphasic abnormalities have also been reported 34. In this regard it is often difficult to determine what a schizophrenic individual may be talking about. These same psychotic individuals have sometimes been known to complain that what they say often differs from what they intended to say. Temporal-sequentual (i.e. syntactical) abnormalities have also been noted in their ability to reason. The classic example being: "I am a virgin; the Virgin Mary was a virgin; therefore I am the Virgin Mary."

Hence, there is some possibility that a a significant relationship exists between abnormal left hemisphere and left temporal lobe functioning and schizophrenic language, thought and behavior.

THE LANGUAGE AXIS

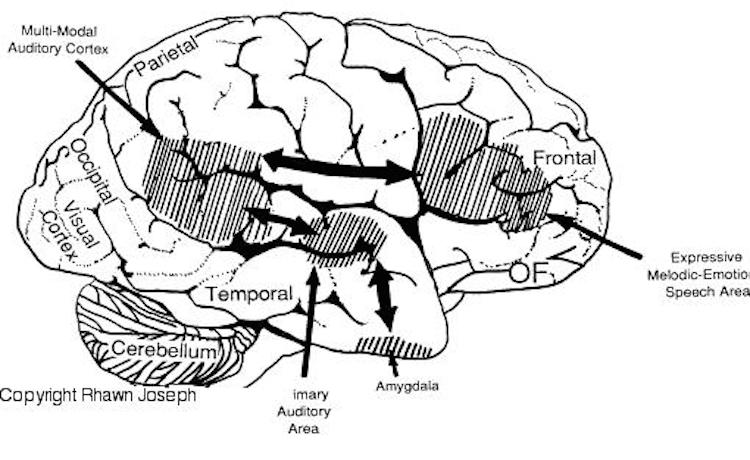

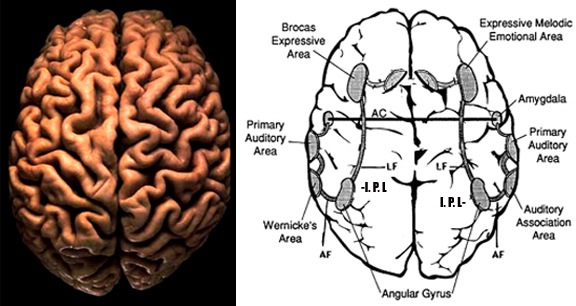

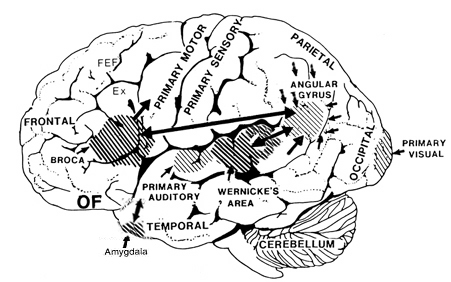

A large expanse of the cortex is involved in auditory perception, analysis and production. This includes the amygdala and inferior, middle and superior temporal lobe, the inferior parietal and inferior frontal areas as well as the thalamus.

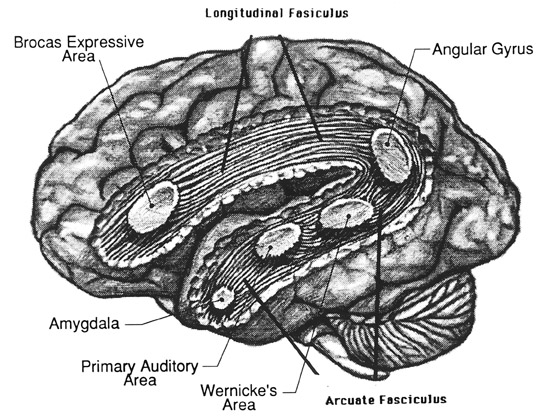

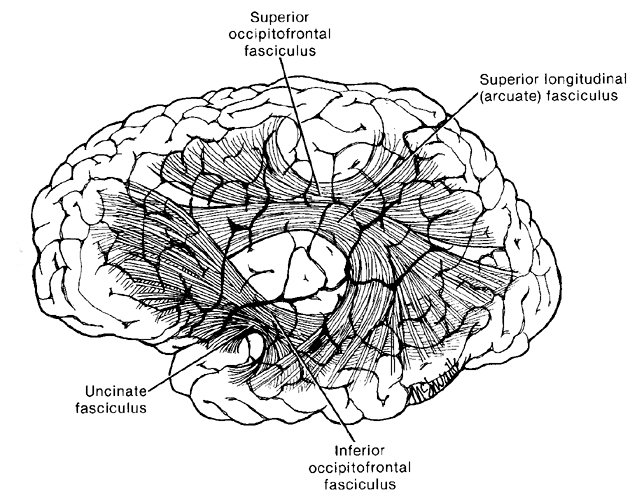

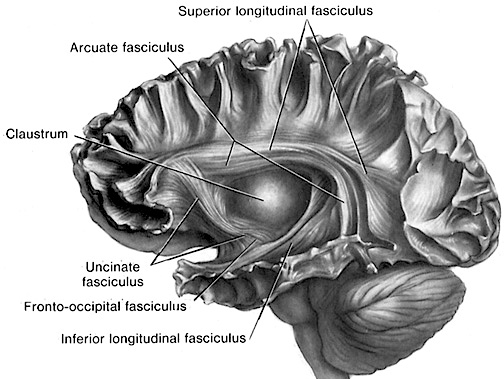

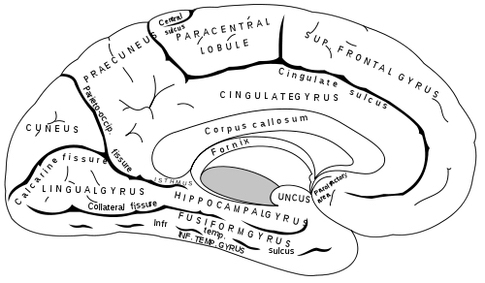

The auditory area extends in a continuous belt-like fashion from the amygdala located deep in the inferior temporal lobe, to the primary and association (e.g. Wernicke's) area in the superior temporal lobe, toward the inferior parietal lobule, and via the arcuate fasciculus (a rope of axons interconnecting these areas) onward toward Broca's area in the frontal lobe.

Indeed, in the left hemisphere, this massive rope of interconnections forms a Language Axis such that the amygdala, Wernicke's Area, the inferior parietal lobule, and Broca's area, together, are linked and able to mediate the perception and expression of most forms of language and speech via the extensive interconnections maintained 37.

This rope-like bundle of axons, the arcuate fasciculus, is a bidirectional fiber pathway. As noted, it runs not only from Wernicke's through to Broca's area but extends inferiorly deep into the temporal lobe where contact is established with the amygdala. In this manner, auditory input comes to be assigned emotional-motivational significance, whereas verbal output becomes emotionally-melodically enriched. Within the right hemisphere, these interconnections which include the amygdala appear to be more extensively developed 35.

It is via this linkage that when a sentence is read the information is transferred to the inferior parietal lobule and to Wernicke's area so that these two regions can in turn access the appropriate verbal labels for each letter, word, and so on. Moreover, through there interconnections, not just words, but concepts, categories, and associated attributes (e.g. color, form, size, shape, cost, desirability, use,) and emotional significance of whatever is read, or heard, or felt, can be accessed or determined as well. This information is then integrated within the Language Axis, associated, labeled, named, strung together, comprehended, provided some emotional coloration, and if necessary, talked about via transmission to Broca's area.

THE MELODIC-INTONATIONAL AXIS

Language is not just a function of the left half of the brain, however, as the right half of the cerebrum makes significant contributions to the understanding and expression of its contextual, emotional and melodic components. For example, it has been consistently demonstrated that the right temporal lobe (left ear) predominates in the perception of timbre, chords, tone, pitch, loudness, melody, intensity, prosody, and non-verbal, environmental, and emotional sounds 36.

When the right temporal lobe is damaged there results a disruption in the ability to sing, carry a tune, perceive, recognize or recall tones, loudness, timbre, and melody, and one's sense of humor may be abolished as well 37. Similarly, the ability to recognize even familiar melodies and the capacity to obtain pleasure while listening to music is abolished or significantly reduced; a condition referred to as amusia. In addition, lesions involving the right temporal-parietal area, have been reported to significantly impair the ability to perceive and identify environmental sounds, comprehend or produce appropriate verbal prosody, emotional speech, or to repeat emotional statements. Indeed, when presented with neutral sentences spoken in an emotional manner, right temporal-parietal damage has been reported to disrupt the perception and comprehension of humor, context, and emotional prosody regardless of its being positive or negative in content 38.

Hence, the right temporal-parietal area is involved in the perception, identification, and comprehension of environmental and musical sounds and various forms of melodic and emotional auditory stimuli. This region then probably acts to prepare this information for expression via transfer to the right frontal lobe.

Just as Broca's area in the left frontal region is responsible for the production of words and sentences, a similar region in the right frontal lobe is dominant for the expression of emotional-melodic and even environmental sounds. Hence, it appears that an emotional-melodic-intonational Axis, somewhat similar to the Language Axis of the left half of the brain in anatomical design is maintained within the right hemisphere 39.

When the posterior portion of the Melodic-Emotional Axis is damaged, i.e. the right temporal-parietal area, the ability to comprehend or repeat melodic-emotional vocalizations in disrupted. Such patients are thus agnosic for non-linguistic sounds. With right frontal convexity damage speech becomes bland, atonal, and monotone or the tones produced become abnormal.

LIMBIC LANGUAGE

MATERNAL BEHAVIOR & INFANT SEPARATION CRIES

Fish and many other creatures who swim the shining sea are capable of producing sounds. Marine mammals, such as the dolphin and a variety of whales in fact produce numerous informative and highly meaningful sounds. However, these, like most other mammals first developed and evolved upon dry land and only later returned to live within the deep. Nevertheless, creatures who roamed the planet or swam beneath the waves, some 300 or more million years ago, also relied on sound as a means of communication although the brain structures and external hearing apparatus they possessed were probably quite rudimentary, and not much more developed than that seen in modern day frogs and lizards 40. As such they were attuned to hear low level vibrations and sounds, such as croaking, tails being thumped on the ground, and a few distress calls and sounds of contentedness, at least among the amphibia.

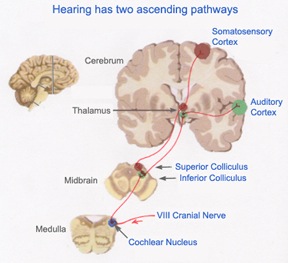

When reptiles began to differentiate and evolve into the repto-mammals, and then, many millions of years later, when reptiles again diverged and the first tiny dinosaurs began to roam the Earth, several major biological alterations occurred. These includes changes in skeletal structure, thermo-regulation, reproduction, and skull and brain size and organization, all of which conincided with the development of audio-vocal communication 41.

For example, most reptiles show absolutely no maternal care, do not vocalize, and are capable of hearing at bast, only low frequency vibrations. Moreover, infants generally must hide from their parents, and other reptiles, in order to avoid being canabalized. One might presume that the first reptiles were not in any manner more advanced than their predecessors in this regard.

In contrast, many of the repto-mammalian therapsids, and then later, many of the various dinosaurs, lived in packs or social groups, and presumably cared and guarded their young for extended time periods lasting up until the juvenile stage 42. Hence, one of the hallmarks of this evolutionary transitional stage, some 250 million years ago, was the first evidence of maternal feelings and what would become the family.

Among the the repto-mammals and the dinosaurs, significant alterations also occurred within their brain, including the development of brain cells that were capable of producing highly meaningful social and maternally related sounds and yet other regions which would inhibit the tendency to consume one's young.

Among social terrestrial vertebrates the production of sound is very important in regard to infant care, for if an infant becomes lost, separated, or in danger, a mother would have no way of quickly knowing this by smell alone. Such information would have to be conveyed via a cry of distress or a sound indicative of separation fear and anxiety. It would be the production of these sounds which would cause a mother to come running to the rescue.

THE CINGULATE GYRUS

As noted, all such creatures, including reptiles and fish, possess a limbic system, consisting of an amygdala, hippocampus, hypothalamus, and septal nuclei. It is these structures, the amygdala in particular, which are important in maternal care and infant bonding, and which are most highly developed in mammals and humans. It is the presence of these particular limbic nuclei which enable a group of fish to congregate together, i.e. to school, or for reptiles to form territories which include an alpha female, several sub-females, and a few juveniles 43.

Such creatures, however, do not care for their young and do not produce complex meaningful sounds as they are lacking more recently acquired brain tissue. These more advanced tissues which they lack include not only the neocortex, but a cortical mass which is intermediate between neocortex and old cortex, and which is the most recently developed region of the limbic system. This new, or rather, transitional limbic-cortical tissue is the cingulate gyrus. It is likely that the expansion which occurred within the repto-mamalian brain, some 250 million years ago, was due to the evolution of this transitional, four layered cingulate limbic cortex 44. It is only rather late in evolution, about 100 million years ago, that the first thin sheets of neocortex would begin to spread across and cover the old limbic brain and the cingulate gyrus.

The cingulate cortex is intrinsically linked to the hippocampus and the amygdala and is absent in reptiles and fully present only in mammals. Moreover, the cingulate in humans, maintains direct interconnections with the left and right frontal region, which, as noted, are responsible for the production of speech and emotional sounds. More importantly, when the cingulate cortex is electrically stimulated, the separation cry, similar if not identical to that produced by an infant, is elicited 45, and it is only this area which produces it. It thus appears that the cingulate, in conjuction with the amygdala, and other limbic tissue may well be the responsible agents in regard to infant care, and the initial production of what would become language. I have referred to this eleswhere as "limbic language."46

LIMBIC LANGUAGE: AMYGDALA & CINGULATE CONTRIBUTIONS

Phylogenetically and ontogenetically, the original impetus to vocalize springs forth from roots buried within the depths of the ancient limbic lobes (e.g. amygdala, hypothalamus, septum, cingulate) 47. Although non-humans do not have the capacity to speak, they still vocalize, and these vocalizations are primarily limbic in origin being evoked in situations involving sexual arousal, terror, anger, flight, helplessness, and separation from the primary caretaker when young. The first vocalizations of human infants are similarly emotional in origin and limbically mediated.

Although cries and vocalizations indicative of rage or pleasure have been elicited via hypothalamic stimulation, of all limbic nuclei the amygdala is the most vocally active, followed only by the cingulate 48. In humans and animals a wide range of emotional sounds have been evoked through amygdala activation, including those indicative of pleasure, sadness, happiness, and anger.

Conversely, in humans, destruction limited to the amygdala, the right amygdala in particular, has abolished the ability to sing, convey melodic information or to enunciate properly via vocal inflection. Similar disturbances occur with right hemisphere damage. Indeed, when the right temporal region (including the amygdala) has been grossly damaged or surgically removed, the ability to perceive, process, or even vocally reproduce most aspects of musical and emotional auditory input is significantly curtailed. Moreover, the person loses much of their social interest and ceases to express affection, love, or emotions.

Similarly with destruction of the anterior cingulate, there results a loss of fear or socially appropriate behavior. Humans will often become initially mute and socially unresponsive, and when they speak, their vocal inflectional patterns and the emotional sounds they produce sound abnormal 49. Animals, such as monkeys who have suffered cingulate destruction will also become mute, will cease to groom or show acts of affection and will treat their fellow monkeys as if they were inanimate objects. For example, they may walk upon and over them as if they were part of the floor or some obstacle rather than a fellow being 50. Maternal behavior is also abolished and the majority of infants soon die from lack of care.

The amygdala and the cingulate gyrus, however, appear to influence language in a different manner. As noted, the amygdala is buried within the depths of and maintains rich interconnections with all regions of the temporal lobe, including the neocortex and fiber pathways which link Wernicke's receptive speech area with Broca's expressive speech area, i.e. the arcuate fasciculus.

Via its interconnections with the Wernicke's area in the left temporal lobe, and the sound receiving areas in the right temporal lobe, the amygdala is able to discern as well as apply emotional coloration to all that is heard. It is also able to add emotional tone to all that might be said. This is why when the right amygdala has been destroyed or surgically removed, the ability to sing as well as to properly intonate is altered .

In this regard, the amygdala should be considered part of the melodic-intonational axis of the right hemisphere, and part of the language axis of the left hemisphere, as it not only responds to and analyzes environmental sounds and emotional vocalizations but imparts emotional significance to auditory input and output processed and expressed at the level of the neocortex.

The cingulate, via its rich interconnections with the amygdala, adds yet another level of motivational and emotional significance to all that is heard, including possible feelings of maternal concern. Through its connections with the more evolutionarily advanced neocortical regions of the left and right frontal lobes, and thus with Broca's expressive speech area and the melodic-emotional speech area in the right half of the brain, the cingulate is able to directly influence the emotional and melodic tone of all that is said. Hence, the cingulate gyrus, like the amygdala, is an important part of the Language and Melodic-Emotional Axis of the right and left half of the brain. These two limbic nuclei provide the motive source from which spoken language derives its origins.

GRAMMAR & AUDITORY CLOSURE

Despite claims regarding "universal grammars" and "deep structures," it is apparent that many people when conversing together, speak in a decidedly non-grammatical manner with many pauses, repetitions, incomplete sentences, irrelevant words, and so on. However, this does not prevent comprehension since the structure of the nervous system enables us to perceptually alter word orders so they make sense, and even fill in words which are left out.

In one experiment reviewed by Peter Farb in the book Word Play 51, listeners were played a tape of a sentence in which a single syllable (gis) from one word "legislatures," had been deleted and filled in with static (i.e. le...latures). However, no one could detect it and instead filled in the missing sound (gis) so that they heard "legislatures."

In another experiment, when the word "tress" was played on a loop of tape 120 times per minute (tresstresstress....") subjects reported hearing words such as dress, florists, purse, Joyce, and stress. In other words they organized these into meaningful speech sounds which were then coded and perceived as words.

The ability to engage in gap filling, sequencing, and to impose temporal order on incoming (supposedly grammatical speech) is important because human speech is not always fluent as many words are spoken in fragmentary form and sentences are usually four words or less . Much of it also consists of pauses and hesitations, "uh" or "err" sounds, stutters, repetitions, and stereotyped utterances, "you know," "like".

Hence, a considerable amount of reorganization as well as filling in must occur before comprehension and this requires that these signals be rearranged in accordance with the temporal sequential rules imposed by the structure and interaction of Wernicke's area, the inferior parietal lobe and the nervous system (what Noam Chompsky referred to as "deep structure" 52) so that they may be understood.

Consciously, however, most people fail to realize that this filling in and reorganization has even occurred, unless directly confronted by someone who claims that she said something she believes she didn't. Nevertheless, this filling in and process of reorganization greatly enhances comprehension and communication.

UNIVERSAL GRAMMARS

Regardless of culture, race, environment, geographical location, parental verbal skills or attention, children the world over go through the same steps at the same age in learning language 53. Unlike reading and writing, the ability to talk and understand speech is innate and requires no formal training. One is born with the ability to talk, as well as the ability to see, hear, feel, and so on. However, one must receive training in reading, spelling and mathematics as these abilities are acquired only with some difficulty and much effort. On the other hand, just as one must be exposed to light or he will lose the ability to see, one must be exposed to language or he will lose the ability to talk or understand human speech.

In his book Syntactic Structures, Noam Chompsky argues that all human beings are endowed with an innate ability to acquire language as they born able to speak in the same fashion, albeit according to the tongue of their culture, environment and parents 54. They possess all the rules which govern how language is spoken and they process and express language in accordance with these innate temporal-sequential motoric rules which we know as grammar.

Because they possess this structure, which in turn is imposed by the structure of our nervous system, children are able to learn language even when what they hear falls outside this structure and is filled with errors. That is, they tend to produce grammatically correct speech sequences, even when those around them fail to do so. They disregard errors because they are not processed by their nervous system which acts to either impose order even where there is none, or to alter or delete the message altogether. It is because we all possess these same inferior parietal lobe "deep structures" that speakers are also able to realize generally when a sentence is spoken in a grammatically incorrect fashion.

It is also because apes, monkeys, and dogs and cat's do not possess these deep structures that they are unable to speak or produce grammatically complex signs. Hence, their vocal production does not become punctuated by temporal-sequential gestures imposed upon auditory input or output by the Language Axis.

LANGUAGE ACQUISITION: FINE TUNING THE AUDITORY SYSTEM

By time we reach adulthood, we have learned to attend to certain sounds and to ignore the rest. Some sounds we almost never hear because we learned a long time ago that they are irrelevant or meaningless. Or we lose the ability to hear these sounds because of actual physical changes, such as deterioration and deafness, which occur within the auditory system.

Initially, however, beginning at birth and continuing throughout life there is a much broader range of generalized auditory sensitivity. It is this generalized sensitivity that enables children to rapidly and more efficiently learn a foreign tongue, a capacity that decreases as they age. It is due, in part, to these same changes that generational conflicts regarding what constitutes "music" frequently arise.

Nevertheless, since much of what is heard is irrelevant and is not employed in the language the child is exposed to, the neurons involved in mediating their perception either drop out and die from disuse, which further restricts the range of sensitivity. This further aids the fine tuning process so that, for example, one's native tongue can be learned.

For example, no two languages have the same set of phonemes. It is because of this that to some cultures certain English words, such as pet and bet, sound exactly alike as they are unable to distinguish between or recognize these different sound units. Via this fine tuning process, only those phonemes essential to one's native tongue are attended to.

Language differs not only in regard to the number of phonemes, but the number which are devoted to vowels vs consonants and so on. Some Arabic dialects have 28 consonants and 6 vowels. By contrast, the English language consists of 45 phonemes which include 21 consonants, 9 vowels, 3 semivowels (y, w, r),4 stress, 4 pitches, 1 juncture (pauses between words) and 3 terminal contours which are used to end sentences.

It is from these 45 phonemes that all the sounds are derived which make up the infinity of utterances that comprise the English language. However, in learning to attend selectively to these 45 phonemes, as well as to specific consonants and vowels, required that the nervous system become fine tuned to perceiving them while ignoring others. In consequence, those cells which are unused, die.

Children are able to learn their own as well as foreign languages with much greater ease than adults because initially infants maintain a sensitivity to a universal set of phonetic categories. Because of this they are predisposed to hearing and perceiving speech and anything speech-like regardless of the language employed.

These sensitivities are either enhanced or diminished during the course of the first few years of life so that those speech sounds which the child most commonly hears becomes accentuated and more greatly attended to such that a sharpening of distinctions occurs. However, this generalized sensitivity in turn declines as a function of acquiring a particular language and the loss of nerve cells not employed. The nervous system becomes fine tuned so that familiar language-like sounds become processed and ordered in the manner dictated by the nervous system; i.e., the universal grammatical rules common to all languages.

Fine tuning is also a function of experience which in turn exerts tremendous influence on nervous system development and cell death. Hence, by the time most people reach adulthood they have long learned to categorize most of their new experiences into the categories and channels that have been relied upon for decades.

Nevertheless, in consequence of this filtering, sounds that arise naturally within one's environment can be altered, rearranged, suppressed, and thus erased. By fine tuning the auditory system so as to learn culturally significant sounds and so that language can be acquired occurs at a sacrifice. It occurs at the expense of one's natural awareness of their environment and its orchestra of symphonic sounds. In other words, the fundamental characteristics of reality are subject to language based alterations.

LANGUAGE AND REALITY

According the Edward Sapir: "Human beings are very much at the mercy of the particular language which has become the medium of their society...the real world is to a large extent built up on the language habits of the group. No two language are ever sufficiently similar to be considered as representing the same social reality." 55

According to Benjamin Whorf "language.... is not merely a reproducing instrument for voicing ideas but rather is itself the shaper of ideas... We dissect nature along lines laid down by language." However, Whorf believed that it was not just the words we used but grammar which has acted to shape our perceptions and our thoughts 56.

A grammatically imposed structure forces perceptions to conform to the mold which gives them not only shape, but direction and order. Moreover, distinctions imposed by temporal order, as well as by necessity, not only result in the creation of linguistically based categories but labels and verbal associations which enable them to be described. Again, however, they are described in accordance with the rules and vocabulary of language.

Eskimos possess an extensive and detailed vocabulary which enables them to make fine verbal distinctions between different types of snow, ice, and prey, such as seals. To a man born and raised in Kentucky, snow is snow and all seals may look the same. But if that same man from Kentucky had been raised around horses all his life he may in turn employ a rich and detailed vocabulary so as to describe them, e.g. appaloosa, paint, pony, stallion, and so on. However, to the Eskimo a horse may be just a horse and these other names may mean nothing to him. All horses look the same.

Moreover, both the Eskimo and the Kentuckian may be completely bewildered by the thousands of Arabic words associated with camels, the twenty or more terms for rice used by different Asiatic communities, or the seventeen words the Masai of Africa use to describe cattle.

Nevertheless, these are not just words and names, for each cultural group are also able to see, feel, taste, or smell these distinctions as well. An Eskimo sees a horse but a breeder may see a living work of art whose hair, coloring, markings, stature, tone, height, and so on speak volumes as to its character, future, and genetic endowment. Indeed, many ranchers are able to differentiate and recognize their cows as individuals, many of whom look almost identical to a city slicker or Burger King patron.

Through language one can teach another individual to attend to and to make the same distinctions and to create the same categories. In this way, those who share the same language and cultural group, learn to see and talk about he world in the same way, whereas those speaking a different dialect may in fact perceive a different reality.

For example, when A.F. Chamberlain, visited the Kootenay and Mohawk Indians of Brittish Columbia during the late 1800s, he noted that they even heard animal and bird sounds differently from him 57. For example, when listening to some owls hooting, he noted that to him it sounded like "tu-whit-tu-whit-tu-whit," whereas the Indians heard "Katskakitl." However, once he became accustomed to their language and began to use it, he soon developed the ability to hear sounds differently once he began to listen with his "Indian ears." When listening to a whip poor will, he noted that instead of saying whip-poor-will, it was saying "kwa-kor-yeuh."

Observations such as these thus strongly suggest that if one were to change languages they might change their perceptions and even their thoughts and attitudes. Consider for example, the results from an experiment reported by Farb. Bilingual Japanese born women married to American Serviceman were asked to answer the same question in English and in Japanese 58. The following responses were typical.

"When my wishes conflict with my family's..."

"....it is a time of great unhappiness (Japanese)

....I do what I want. (English)

"Real friends should....

"...help each other." (Japanese)

"...be very frank." (English).

Obviously, language does not shape all our attitudes and perceptions. Moreover, language is often a consequence of these differential perceptions, which in turn requires the invention of new linguistic labels so as to describe these new experiences. Speech of course is also filtered through the personality of the speaker and the listener and is influenced by their attitudes, feelings, beliefs, prejudices and so on, all of which can affect what is said, how it is said, and how it is perceived and interpreted.

On the other hand, regardless of the nature of the experience, be it visual, olfactory or sexual, language can influence our perceptions and experiences and our ability to derive enjoyment from them, for example, by labeling them cool, hip, sexy, bad, or sinful, and by instilling guilt or pride. In this manner, language serves not only to label and filter reality, but affects our ability to enjoy it.

REFERENCES