Rhawn Gabriel Joseph, Ph.D.

BrainMind.com

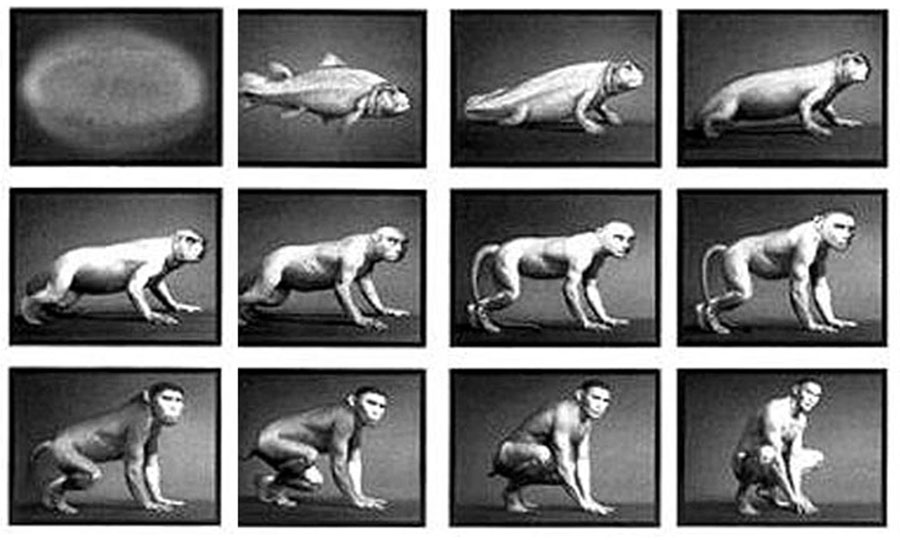

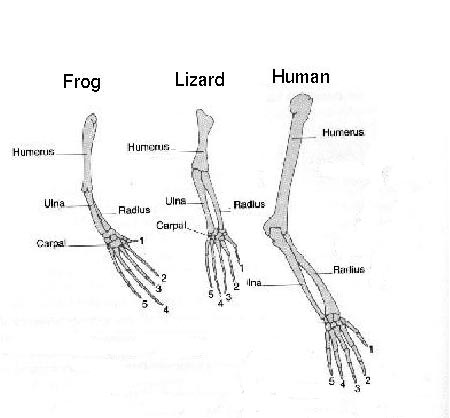

Until around 580 million years ago, the vast majority of life forms sojourning on Earth and beneath the seas, were single celled organisms and simple multi-celled creatures composed of less than 11 different cell types (Bottjer et al., 2006; Glaessner, et al. 2010; Narbonne 2005; Narbonne and Gehling 2003; Shen et al., 2008). Until sufficient oxygen, silica, and calcium had been released and the oceans had become oxygenated, body and cell size were restricted and unable to expand or engage in strenuous physical activity. Larger bodies require skeletal support. Internal organs require skeletal protection. Moreover, in the absence of ozone, larger sized bodies would be burnt by UV rays and would pop and explode. Therefore, beginning around 640 mya, once silica, calcium, and oxygen levels had increased and a protective (oxygen-initiated) ozone layer was established, creatures expanded in size, diversified, and grew spines, silica skeletal compartments, then silica-collagen skeletons, collagen-calcium skeletons, armor plates (sclerites) and small shells like those of brachiopods and snail-like molluscs (Matthews and Missarzhevsky, 1975; Mooi and Bruno,2011; Butterfield 2003; Conway Morris 2003; Lin et al., 2006).

There ensued an explosion of life with all manner of complex creatures appearing in every river, ocean, and stream. This vast explosion of bilateral metazoan diversity appeared multi-regionally throughout the oceans of the Earth within 5 my to 10 millions (Levinton, 1992; Kerr, 1993, 1995). Over 32 phyla rapidly evolved, many with the "modern" body plans seen in modern animals (Fortey et al., 1997; Valentine et al., 2011; Conway and Morris 2000; Budd and Jensen 2000; Peterson et al. 2005). These included organisms with a hard tube-like outer-skeleton consisting of calcium carbonate, and all manner of "small shelly fish" (Anabrites, Protohertzina), as well as sponges and jelly fish, and later, mollusks, brachipods, and the first anthropods (e.g. trilobites) which immediately sprouted legs. In fact, with no history of derivative ancestral forms, and over the course of just a few million years, all manner of complex life forms emerged, and many species were equipped with gills, intestines, joints, and modern eyes with retinas and fully modern optic lenses. In fact, every phylum in existence today (including several which have since become extinct), emerged during the Cambrian Explosion, including the phylum Chordata and animals which would possess a rudimentary 2-layered brain consisting of a spinal cord, brainstem, midbrain and limbic forebrain.

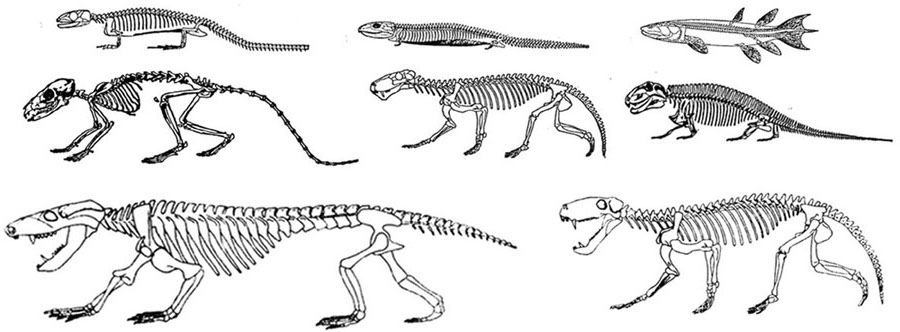

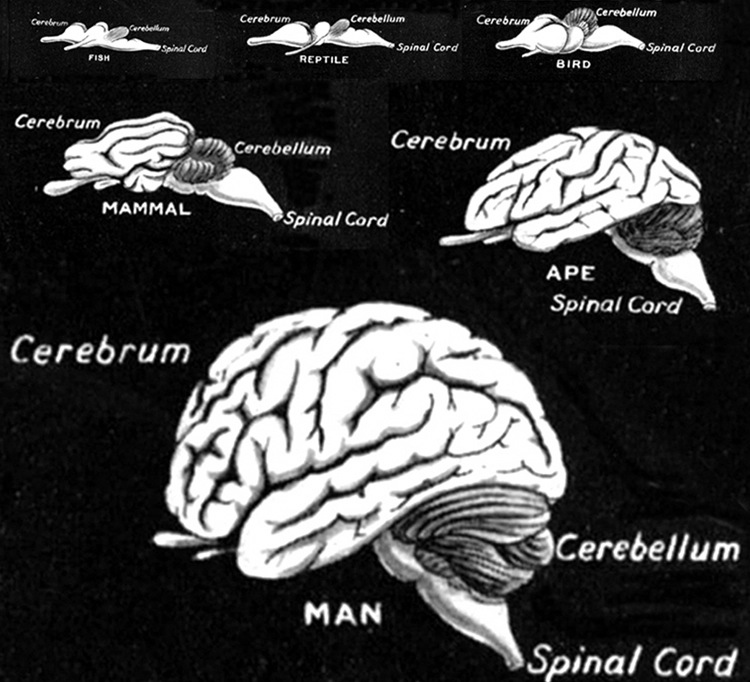

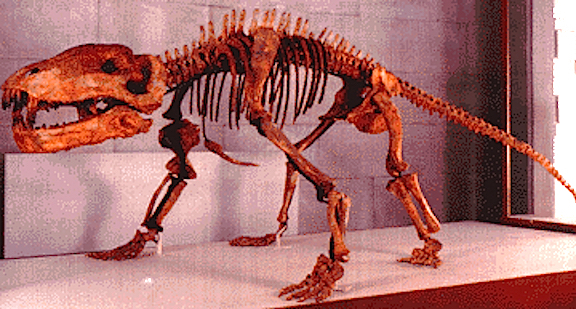

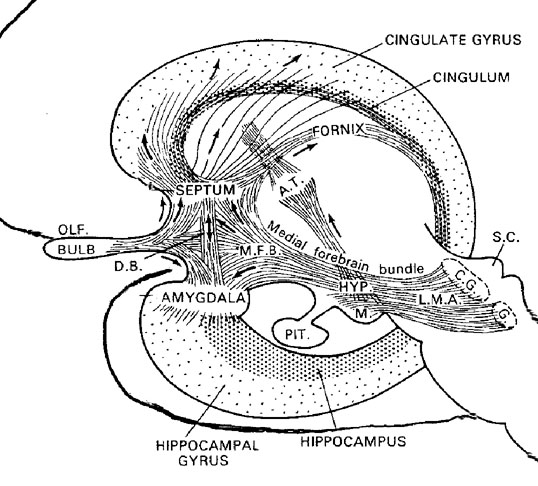

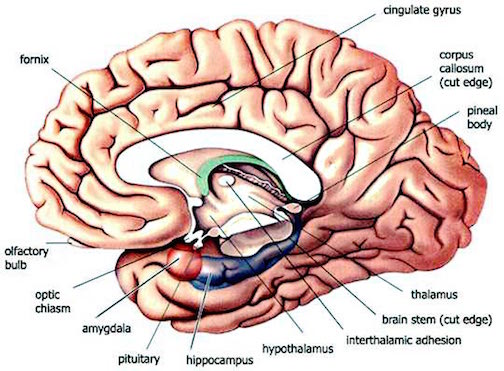

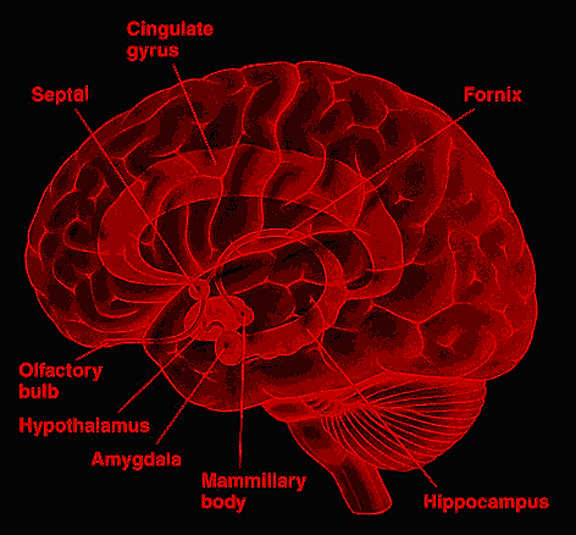

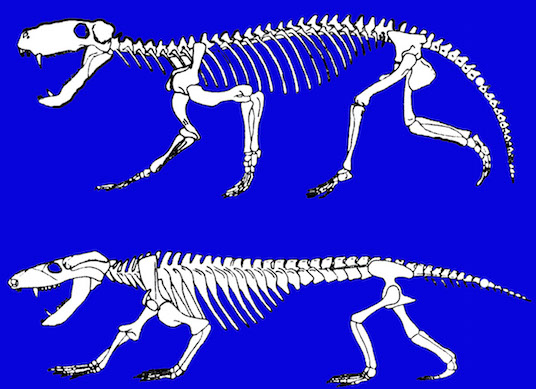

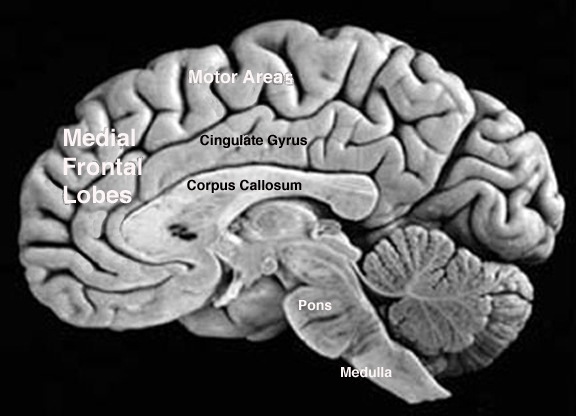

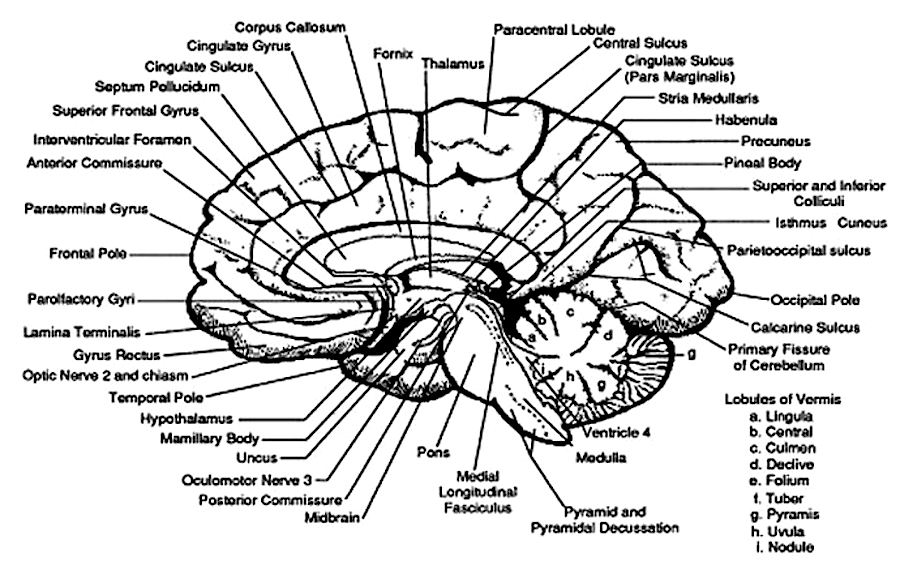

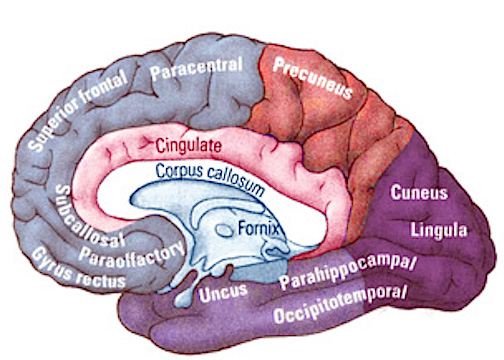

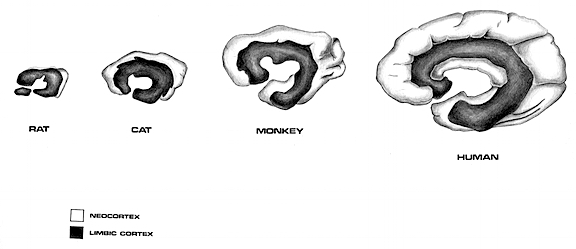

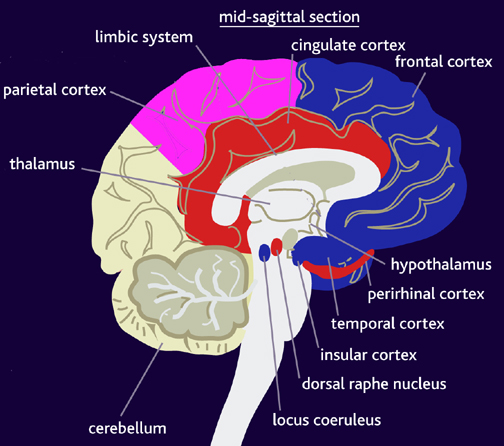

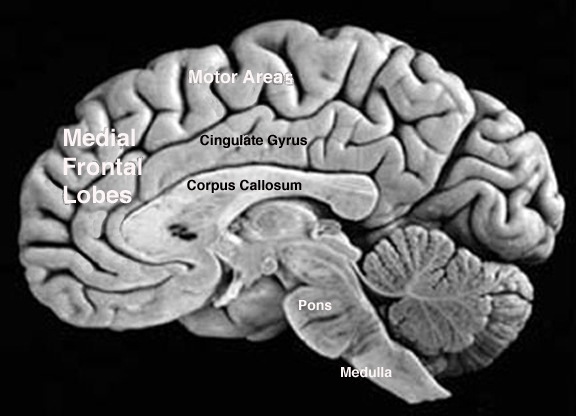

The evolution of fish, amphibians, reptiles, and then repto-mammals then ensued over the course of the next 300 million years. The repto-mammals were distinguished by the cerebral metamorphosis of the 4-5 layered cingulate gyrus, which stretched in an arc over the hypothalamus, hippocampus, and amygdala. True 6 layered mammalian neocortex was probably also beginning to form in thin patches.

By 200 million years ago therapsids had already evolved from repto-mammals, whereas by 150 to 100 million years ago, mammals began to evolve from and to slowly replace the therapsids, emerging multi-regionally on every continent. The evolution of mammals is distinguished by the six layered neocortical mantle which sits and surrounds, like a shroud, the more ancient regions of the brain. It is this six layered neocortex which would give rise to language, thought, and the rational mind.

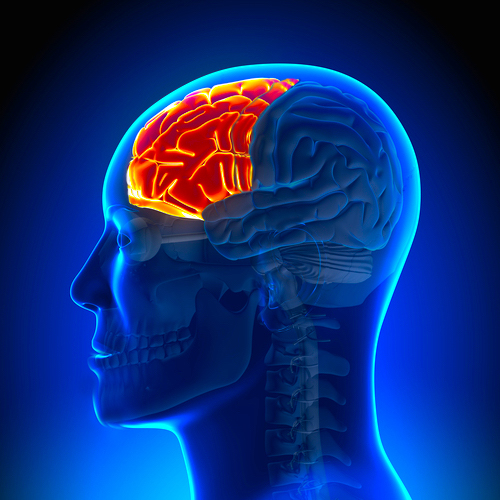

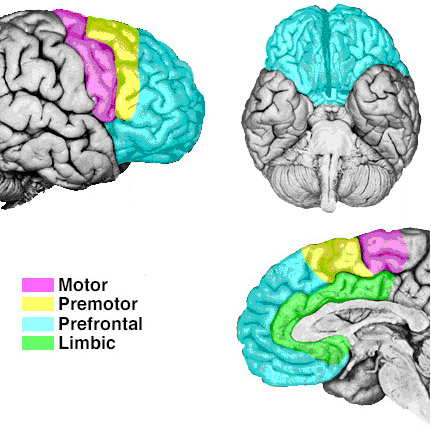

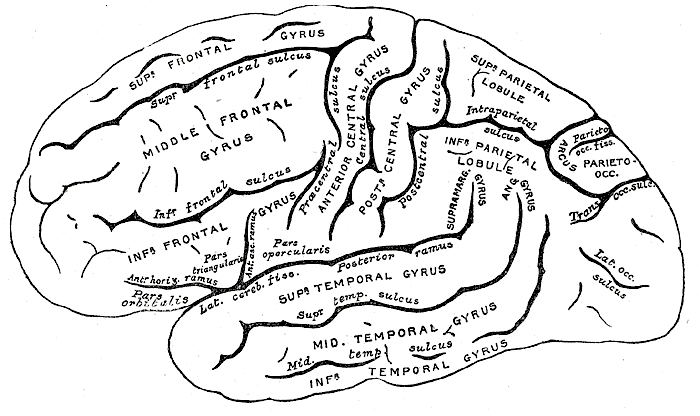

Corresponding with the ascendance of mammals was the continued expansion of the brain and development of the six layered telencephalon. Indeed, much of the new cortex had evolved for the specific purpose of serving the needs of the limbic system, and thus, these additional layers evolved out of the amygdala, hippocampus, and cingulate gyrus, thereby giving rise to the frontal, temporal, occipital, and parietal lobes.

For example, the medial and then the lateral (motor areas) of the frontal lobe were fashioned from the anterior cingulate gyrus, whereas portions of the amygdala and hippocampus had become increasingly cortical and had given rise to the inferior, medial, lateral, and superior temporal lobe (e.g. Sanides 1964). Hence, six to seven layers of cortex, i.e. neocortex, began to enshroud and form the cerebral hemispheres which in turn conferred upon these creatures extraordinary powers of intelligence, foresight, planning, and communication. Being much more intelligent, mammalian predators were becoming a serious competitive threat to the more dim witted dinosaurs.

By 75 million years ago the number of dinosaurs began to decline throughout most parts of the world (cf Paul, 2012) with the possible exception of North America. However, about 65 million years ago a huge asteroid or meteor struck the planet near the gulf of Mexico (Alvarez, 1986; Alvarez & Asaro, 2010; Hildebrand, 1991) and a vast number of (large sized) species died out in response to this cataclysm (Raup, 1991), particularly those who lived in North America; i.e. the dinosaurs. Apparently the explosion was so massive that animals for thousands of miles around were instantly incinerated, and so much dust was thrown into the air that the sunlight was bloated out causing temperatures to plummet--thus killing off larger sized cold blooded animals, and reducing the plant-food supply for many other creatures.

Nevertheless, mammals (including small primates), being warm blooded, and other smaller sized creatures were able to recover and apparently take competitive advantage of the situation. Hence, with the evolution of neocortically endowed mammalian Creodonta and related carnivores (Carnivora) the remaining dinosaurs could not compete and were completely eradicated. The Age of Mammals had begun.

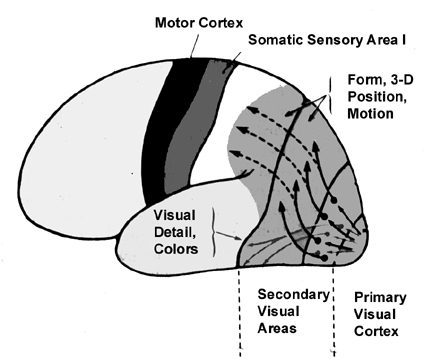

PRIMATE EVOLUTION

With mammals and primates, who apparently diverged from the mammalian line some 70-100 million years ago (Colbert, 1980; Jarvik, 1980; Jerison, 1973; Romer, 1970), in ascendance, and exploring, exploiting and gaining dominion over the Earth, the brain also underwent further adaptive alterations and evolutionary advances in structure and organization. For example, as these creatures now ruled the day as well as the night and as they were advancing into every niche that had formerly been forbidden to them, there were tremendous expansions in visual cortex and thus the size of the occipital and temporal lobes -especially among the primates some 50 million years ago (Allman, 2010; Jerison, 2010). In fact, 50 million years ago the primate brain was much larger than the brain of similar sized mammals.

It is believed that some of the first proto-primates were possibly little rat-like creatures with long snouts and whiskers who devoured insects. In this regard they were no match for the numerous mammalian predators who lurked everywhere. On the other hand, it is just as likely that the first primates were in fact much larger, perhaps equivalent to a medium sized dog. Nevertheless, it was presumably from one or several of these first primates stocks that monkeys, apes, and humans branched off and allegedly descended.

Some primate lines adapted to life on the ground and these creatures flourished for almost 10 million years before dying out. By contrast, those primates who took to the trees adapted, flourished and rapidly adapted to living among the branches of the trees and the forest. They began to grow fingers and their hands and feet became adapted for grasping. As the environment acts on gene selection, in consequence tremendous alterations occurred in the occipital and parietal lobes and the frontal motor system which was now significantly contributing to the control of the extremities as these creatures became increasingly adapted to a life in the trees. However, what has been referred to as "prefrontal" cortex--that is the tissue anterior to the frontal motor areas, remained poorly developed (LeGros Clark, 1962).

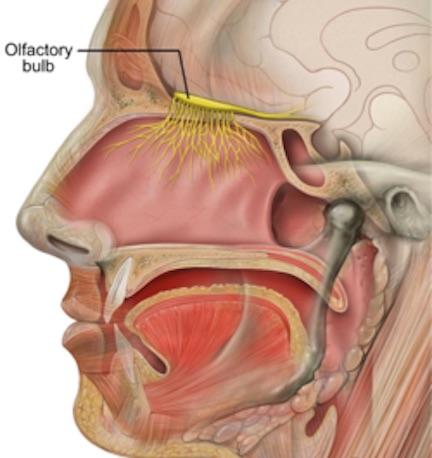

The visual system was also modified in that the eyes had shifted from the sides of the head and became frontally placed which in turn resulted in stereoscopic vision, greater central visual acuity as well as improved hand-eye coordination (Allman, 2010). Like other mammals, these primates also continued to rely on olfactory and pheromonal communication; and as in mammals, the olfactory bulb remained large (Jerison, 1973, 2010).

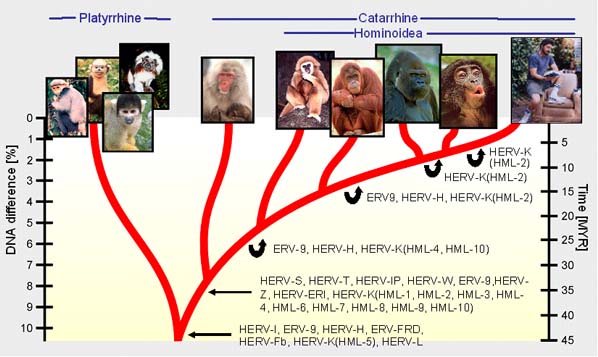

It was presumably from these widely dispersed tree loving stocks that gave rise to monkeys in Africa, India, Asia, and the Americas about 40 million years ago (Leaky, 1988; Pfeiffer, 2013). One or several branches of this wide ranging stock of monkey in turn gave rise to apes (hominoids) about 30 million years ago (Sibley & Alhquist, 1984), with what would become chimpanzees and gorillas eventually appearing in Africa, and Orangutans appearing in India and Asia. Presumably numerous branches from these varied primate-hominoid trunk lines diverged again, and yet again, and gave rise to several possible hominid ancestral lines, one or several of which would eventually lead to the evolutionary metamorphosis of the first hominids.

FROM HOMINOID TO HOMINID

As to the ancestors of the first hominids, there are several candidates. These include Dryopithecus and Sivapithecus, ape-like hominid/hominoids who emerged in Europe and India, about 16 million years ago, as well as Ankarapithecus of Turkey, Ouranopithecus of Greece, and especially Ramapithecus whose remains have been discovered in Africa, India, and Southwest China (Jurmain, et al. 2010; Munthe et al. 2013). Ramapithecus stood about three foot high, had a low forehead, flat wide nose, and face shaped like muzzle. Although he was a seed eater and tended to grind his food, Ramapithecus (or like minded) males probably occasionally hunted, captured and killed by hand small game and possibly other primates, whereas females and their young obtained the brunt of their food by gathering (Leakey & Lewin, 1977; Pfeiffer 2013). Ramapithecus, in fact, appears closely related to Dryopithecus and Sivapithecus, and may have given rise to Giganotopithecus whose 8 million year old remains have been found in India, China, and Vietnam. Giganotopithecus in turn may have been the ancestor of those Australopithecines which emerged in these lands.

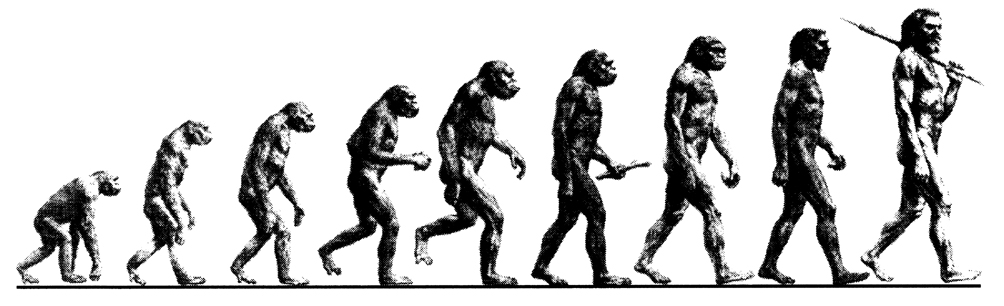

Evolutionary metamorphosis is most likely to occur when an organism is exposed to a multiplicity of changing environments, or where two divergent worlds meet; as the environment acts on gene selection. For the pre-hominid hominoids such as Dryopithecus, Sivapithecus, Ramapithecus, Ankarapithecus, Ouranopithecus, and Giganotopithecus, the netherworld of change occurred during a period in which parts of the planet were bathed in renewed warmth, thereby causing the forests to shrink, thus giving rise to expanding savannas. It was during this period of climatic change, around 5-10 million years ago (Sibley & Alhquist, 1984; Takahata et al. 1995) that the descendants of Ramapithecus or Giganotopithecus or Ankarapithecus or Ouranopithecus, or some other primate-pre-hominid, underwent further evolutionary metamorphosis and gave rise to a variety of more advanced hominids, the descendants of which would come to include Australopithecus, H. Habilis, H. erectus, and eventually Homo sapiens sapiens--an evolutionary advanced being who would soon dominate and then threaten a good part of the planet's multiple life forms with death and extinction.

AUSTRALOPITHECUS & HOMO HABLIS

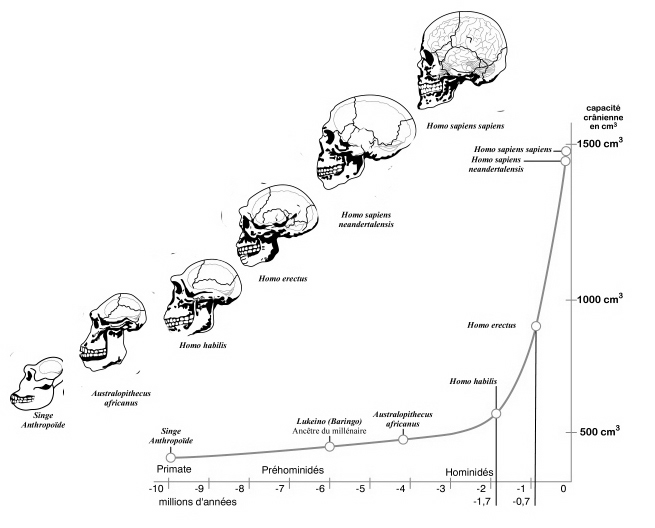

It may have been as recently as 5 million years ago that hominoids and hominids diverged from a common ancestor (Sibley & Alhquist, 1984; Takahata et al. 1995) with the first pre-humans, with over a half dozen species of Australopithecus, emerging soon thereafter.

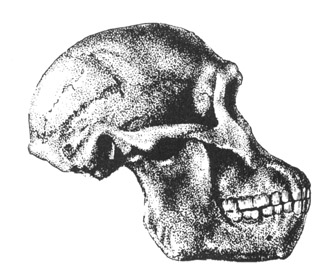

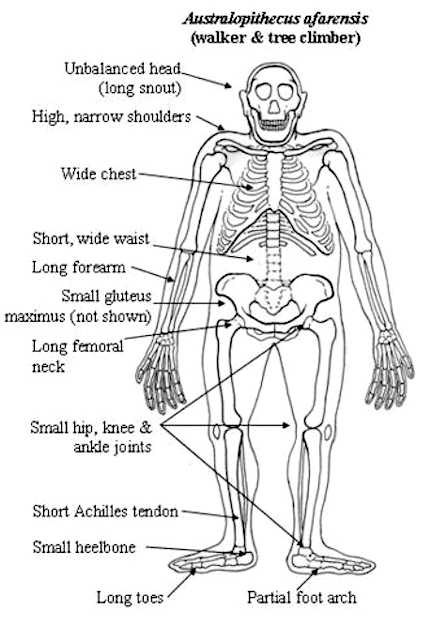

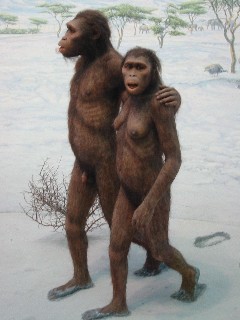

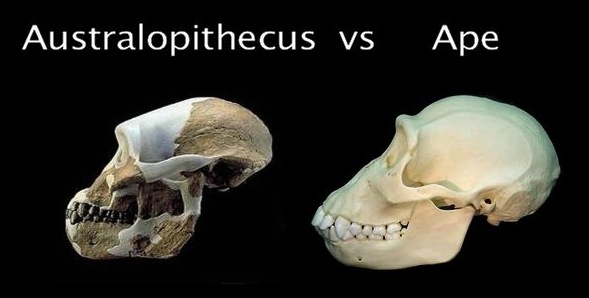

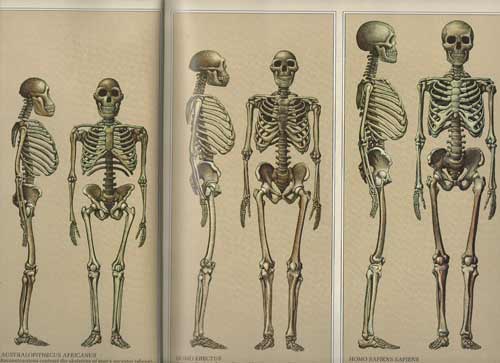

Australopithecus, however, was in many respects more ape than human especially in regard to head and brain size (Conroy, 1998), their small semi-circular canals (inner ear), robust body build, conical chest, and curved feet. In fact, although Australopithecus (aferensis/africanus) had acquired the ability to walk on two legs and was well on the way to becoming human (e.g. Howells, 1997; Johanson, 1980; Johanson, & Shreeve, 1989; Leakey, 1981), this pre-human was built like a chimpanzee, and retained chimp-like capabilities and limitations, including an ape-like maturational growth rate, and a propensity for climbing, eating in, and perhaps sleeping in trees (Fleagle, 1988; Stern & Susman, 2013; Wood, 2004). Similar traits may have been possessed by early H. habilis).

Like chimpanzees, it can also be assumed that Australopithecus (as well as H. habilis) employed elaborate vocalizations and facial, hand, arm, and body gestures to communicate. They likely developed long lasting sibling and mother-infant relationships, and used a variety of strategies for achieving dominance and forming coalitions. Australopithecines (and H. habilis) probably greeted their own kind with hugs, pats, and kisses, would hold and shake hands, engage in long periods of mutual grooming, seek reassurance by embracing, and were probably willing to risk their lives to help family members who were in distress or danger.

As is characteristic of chimpanzees (e.g., de Waal, 1989; Goodall, 1986, 2010; Nishida, 2010; Wrangham et al., 2012), Female Australopithecus/H. habilis likely spent considerable time socializing and engaged in prolonged child care with mother-son and especially mother-daughter bonds lasting a lifetime. Incessant mutual vocalizing and prolonged daily food gathering activities were probably characteristic (Joseph, 2011e).

By contrast male Australopithecines (and H. habilis) were probably more independent, though like chimps, they likely formed coalitions, as well as hunting or raiding parties in which they would kill other animals (White et al., 2011) or hominids from adjacent troops; and, on occasion, each other (Dart, 1949).

Australopithecus (as well as H. habilis) were sexually dimorphic, with the female weighing half as much as the male (Howell, 1997; Johanson, & Shreeve, 1989; Leakey, 2004). All larger sized primates are sexually dimorphic and tend to live in multi-male, multi-female groups (Fedigan, 2012), with males competing for access to estrus females who may mate with numerous males. By contrast, smaller and similar sized primates are more likely to be monogamous (Fedigan, 2012). Hence, we can assume that monogamous sexual relations had not yet been established with the emergence of these pre-humans (see chapter 8).

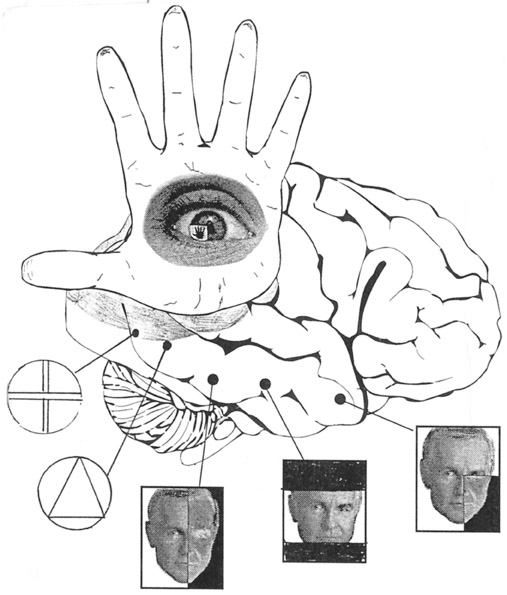

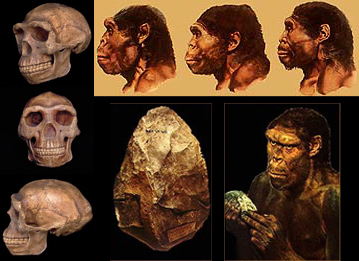

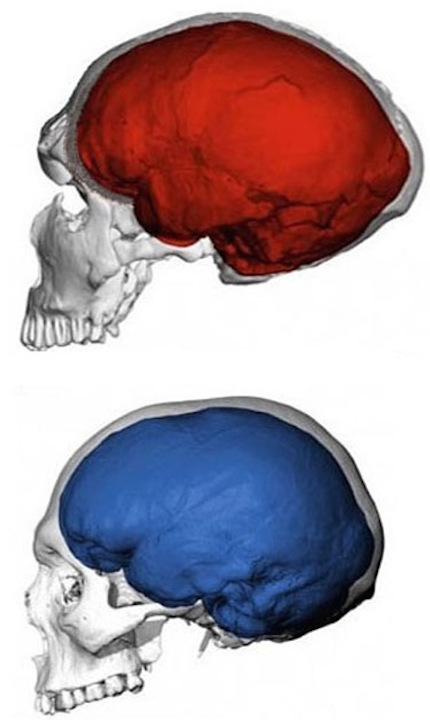

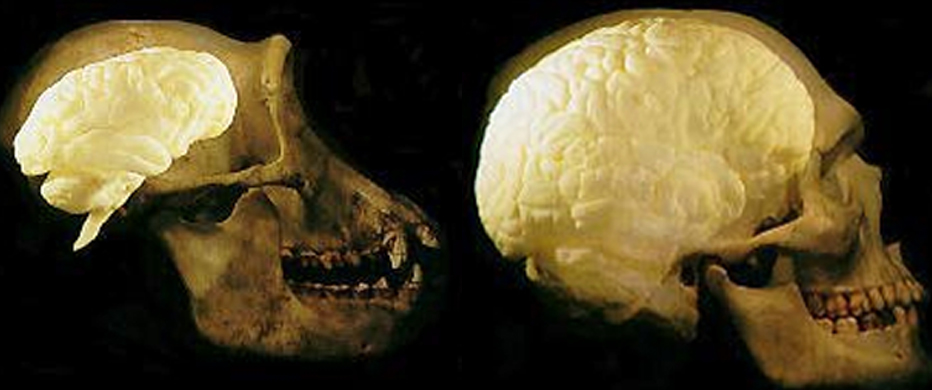

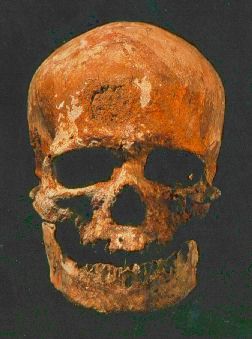

Modern human vs Australopithcus Brain

It should be stressed, however, that there were numerous species of Australopithecus (as well as H. habilis), who shared the planet simultaneously from around 4 million to 2 million years B.P. In fact, Australopithecus (and H. habilis) appears to have "evolved" multi-regionally, emerging in Africa (see Grine, 1988; Leakey & Walker, 1988; Skelton & McHenry 2012) as well as China and Java (reviewed in Barnes, 1993). However, over the ensuing three million years, some species of Australopithecus died out whereas the descendants of others underwent a step-wise and progressive "evolution" with increasingly human-like species replacing their more primitive ancestors.

Hence, Ardipithecus ramidus, "ground ape" (the remains of which are 4.4 million years old) was joined and/or replaced by A. anamesis (who emerged 4 million years B.P.), and A, anamesis was joined and/or replaced by A. aferensis (3.6 million B.P.), who in turn was succeeded by A. africanus (3 million years B.P.), who in turn was joined and/or replaced by A. garhi (around 2.5 million years B.P.) who was then joined and then replaced by early H. habilis (2.2 million B.P).

However, various subtypes H. habilis also appeared in Africa, as well as China (Dragon Hill) and Indonesia (reviewed in Barnes, 1993; Howells, 1997). Hence, H. habilis (the handy man) seems to have evolved multi-regionally, perhaps as descendants of various species of Australopithecus who may have also evolved multi-regionally.

It must be emphasized, however, that there is no general or widespread agreement as to the various possible phylogentic relationships shared by the wide variety of Plio-pleistocene hominids so far discovered (Leakey, 2004; Skelton & McHenry 2012). Moreover, despite the apparent step-wise progression that appears to have led from A. anamesis to A. Africanus to A. garhi (the remains of which were in fact found in the same fossil rich Afar Triangle, where skeletons of A. Aferensis were discovered), and although Australopithecus was later joined by and then succeeded by H. habilis, there is no general agreement and it is not yet established if present-day humans descended from Australopithecus or H. habilis, or even if H. habilis descended from Australopithecus (Grine, 1988; Howells, 1997; Johanson, & Shreeve, 1989; Leakey & Walker, 1988; Leakey, 2004; Skelton & McHenry, 2012).

However, it has also been proposed that many of the supposed different subtypes of Australopithecus and/or H. habilis (e.g. H. rudolfensis, H ergaster) and/or H. erectus may have evolved from different ancestral species, and that each in turn has given rise to separate branches of the human race which then evolved multi-regionally, including those which long ago became extinct, such as the Neanderthals (see chapter 4). For example, in 1863, Carl Vogt argued that racial differences "leads us back not to a common stem, to a single intermediate form between man and apes, but to manifold lines of succession, which were able to develop, more or less within local limits, from parallel lines of apes.

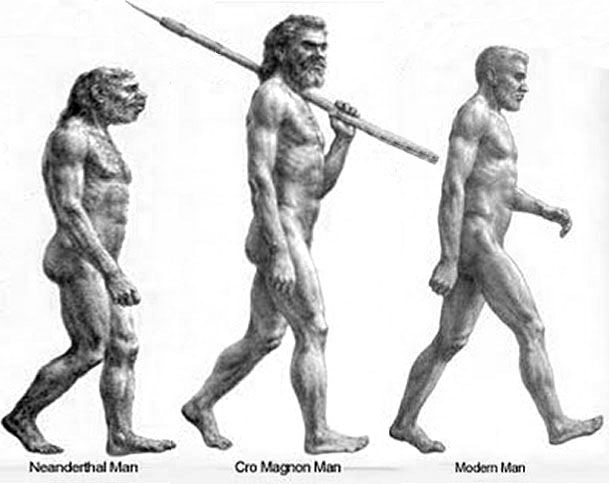

A more neurologically oriented view has been proposed by MacLean (2010). As based on enlargements in the frontal portion of the cranium, MacLean (2010) argues that modern humans (including the Cro-Magnon) may have descended from Homo habilis who he believes descended from Australopithecus africanus, whereas Neanderthals evolved from a distinct branch of H. erectus who evolved from Astralopithecus robustus. Indeed, the existence of the Neanderthal people, and the fact that they evolved separately but then coexisted in Europe and/or the Middle East with "early modern" and anatomically "modern" Upper Paleolithic peoples, can be considered recent proof for multi-regional evolution.

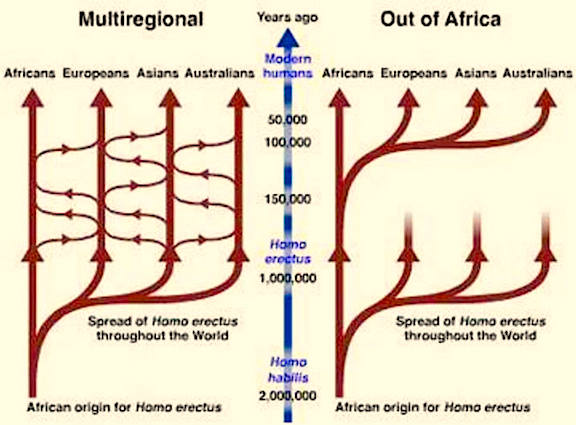

Some scientists, however, propose that it is only the descendants of H. erectus who evolved "multi-regionally, and that commonalities between present day races and previous species of humanity, are due to interbreeding. Indeed, interbreeding is a likelihood as so many divergent species coexisted for thens of thousands of years, including Australopithecus, H. habilis, and H. erectus, and then later, H. erectus, archaic, "early modern" and anatomically "modern" Paleolithic H. sapiens sapiens. Yet others argue for a direct line of descent, with all species of humanity originating in Africa from a single ancestor and then radiated outward, displacing and killing off those previous species who immigrated before them. The evidence supporting the "multi-regional" vs the "out-of-Africa" replacement arguments are discussed in chapters 4 & 5 and will be reviewed below.

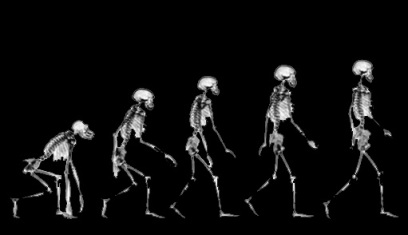

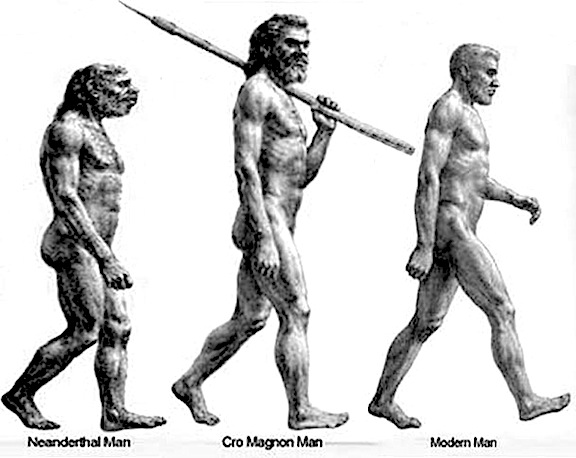

Unlike those who had come before them, Australopithecus and Homo habilis were extremely advanced for they had learned to stand on their hind legs and to walk in an upright manner (Howells, 1997; Johanson & Shreeve, 1989; Leakey, 2004). Similarly, although curved and still used for climbing, their feet evolved so as to better accommodate standing, and they tended to walk and run on two legs rather than on all four as do apes and monkeys. In consequence, the arms and hands ceased to be weight bearers and were freed of the necessity of holding or hanging on to a tree branch in order to move about. In addition, the fingers and thumb underwent further modification and functional elaboration and they gained the ability to not only hold and grasp, but to explore and manipulate objects.

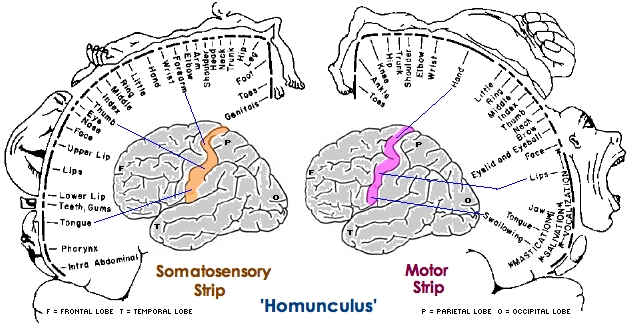

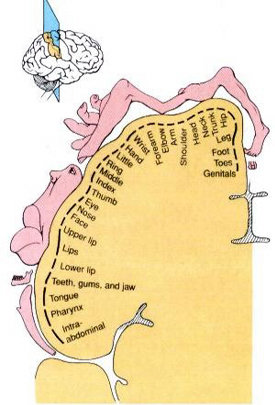

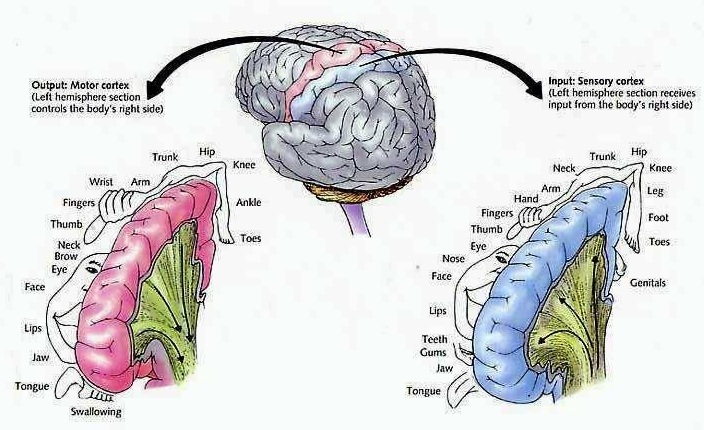

For example, by time H. habilis had come to wonder the Earth, the thumb had become longer and stronger which enabled them to engage in complex acts involving a refined precision grasp; e.g. the construction and utilization of simple tools (see Hamrick & Inouye, 1995; McGrew, 1995; Susman, 1995 for discussion of supportive and contrary evidence). Correspondingly the areas of the neocortex devoted to the representation of the hand and in particular, the fingers increased (Richards, 1986) which also improved versatility in fine motor control and communication.

Because the hands and fingers had become more versatile they could also be used for tool construction and elaborate signaling among other primates so as to convey feelings of anger (such as by making a fist, pounding a tree, angrily swinging or throwing a tree branch), a desire to share (such as begging for a small piece of meat), to indicate friendliness or the desire to form a social bond, or to even soothe an angry neighbor by grooming his coat. In this regard, the neocortex subserving the manipulation of the hand (the superior parietal lobe) became increasingly adapted for social-emotional communication as well as exploration and manipulation.

As based on the fossil evidence, both Australopithecus and H. habilis were capable of creating and manipulating simple stone and wooden tools, just as chimpanzees use rocks, twigs, and leaves as tools. In fact, antelope leg bones which show the signs of cutting and pounding by a shark and blunt rock were found in association with the remains of A. garhi, which in turn is evidence of deliberate tool use (White et al., 2011).

However, only the remains of H. habilis have been found in association with stone tools --some of which were discovered in the Omo valley of Ethiopia from a site dated to 2.4 million years, whereas yet others were found at Kada Gona and dated to 2.1 million years (Gowlett, 1986; Lewin, 1981). These stone tools, however, were little more than rocks that had been banged together in order to arrive at a desired shape, e.g., pebble choppers and stone flakes used for cutting. This tool making tradition has been referred to as the Oldowan and prevailed for a million years.

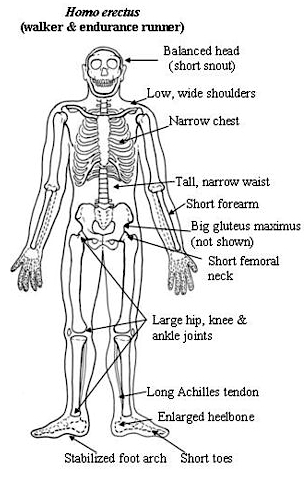

HOMO ERECTUS

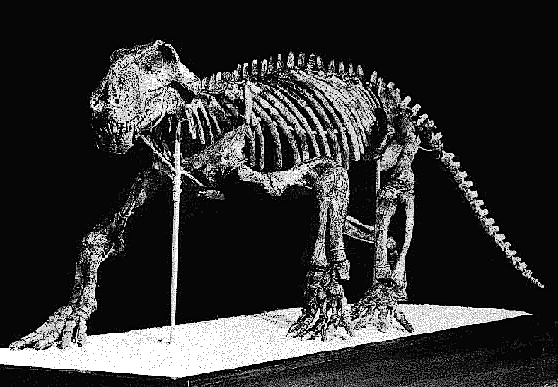

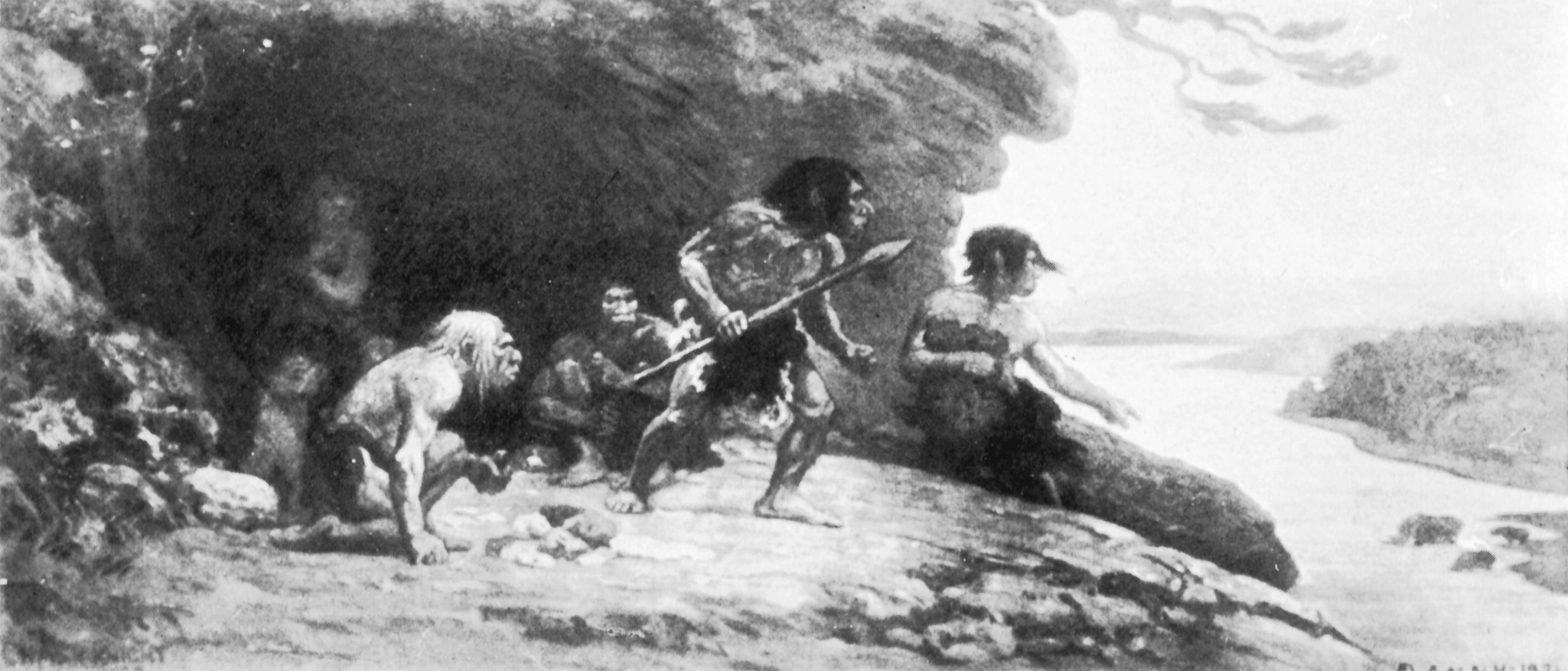

Following on the heels of Australopithecus and briefly sharing the planet with Homo habilis were a wide range of quite different individuals collectively referred to as Homo erectus. Homo erectus were big and robust, with thick browridges, large teeth, and bulging shoulder muscles (Day, 1996; Potts, 1996; Rightmire, 2010), and ranged throughout Africa, Europe, Russia, Indonesia and China from approximately 1.9 million until about 300,000 years ago, with a few isolated populations possibly hanging on in the island of Java, until 27,000 years B.P. (Day, 1996; Potts,1996; Rightmire, 2010; Swisher et al., 1996). Thus, H. erectus emerged almost immediately after H. habilis appeared upon the scene, such that they shared the planet simultaneously. Hence, H. erectus and may well have been responsible for the demise of H. habilis and any remaining Australopithecines.

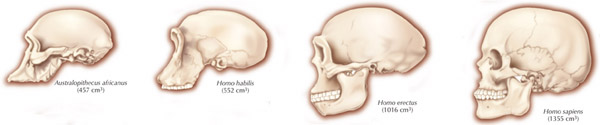

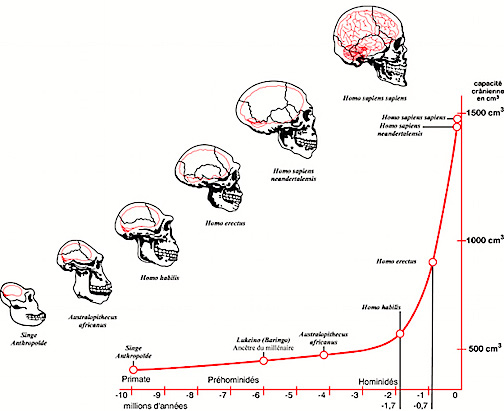

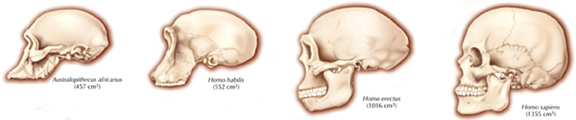

Nevertheless, early H. erectus was rather small brained, that is, as compared to modern H. sapiens sapiens, with a cranial capacity of about 800 to 937 cc (Tobias, 1971). It was not until the latter stages of H. erectus evolution that the brain became significantly enlarged, doubling in size as compared to that of Australopithecus (440 cc) and approaching within 15% of present-day humans (Conroy 1998; Potts, 1996; Rightmire, 2010; Tobias, 1971).

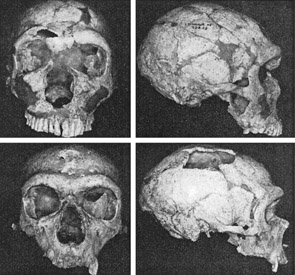

H. Habilis vs H. Erectus v Modern Human Brain

Nevertheless, both early and late H. erectus were exceedingly intelligent and resourceful. H. erectus was the inventor of the hand ax and the Achulean tool making industry, and may well have been constructing camp sites 1.8 million years ago. As with earlier hominids, these camps were established near rivers and lake shores, and served as semi-permanent as well as transitory sites where stone tools could be constructed and animals butchered (e.g., Isaac, 1971, 1981, 1982; Leakey, 1976, 1978).

Hence, in contrast to chimps and other primates who eat as they range and forage, H. erectus was apparently returning to the camp site with food that had been gathered, scavenged, or slaughtered and butchered, so that it could be shared with their compatriots. They also utilized various earth pigments (ochre) for perhaps cosmetic or artistic purposes. In this regard, perhaps such individuals were beginning to experiment with individual creative and artistic expression.

It was during the later stages of Homo erectus development, about half a million years ago, that the human brain appears to have become significantly enlarged (Rightmire 2010; Tobias 1971) with some individuals sporting craniums with a cerebral capacity of up to 1100 cc. Likewise, around 500,000 years B.P., they were living in crude shelters and had established semi-permanent home bases (Clark & Harris 2013; Potts 1984). It was also during this time that big game hunting had its onset. Moreover, it may have been during this later period that the H. erectus female finally lost her estrus and became sexually receptive at all times (Joseph, 2011e; see chapter 8). The loss of the estrus cycle and full time sexual receptivity no doubt contributed significantly to the development of long term male-female relationships, which in turn may explain the the development of the semi-permanent home base (Joseph, 2011e; see chapters 7, 8).

However, because a bigger brain comes in a bigger head, trends in the division of labor became more pronounced and an additional divergence in the mind and brain of man and woman may have resulted as males and females became increasingly specialized to perform certain tasks (Joseph, 1993, 2011e; chapter 7). Males increasingly engaged in big game hunting which coincided with improved visual-spatial perceptual and motor functions involving predominantly the right hand, whereas females increasingly spent a considerable amount of time engaged in child care, domestic tool making, and food gathering which coincided with improvements in bilateral fine motor control and which contributed to the development of language (Joseph, 1993, 2011e).

MULTI-REGIONAL EVOLUTION OF H. ERECTUS

Presumably, H. erectus is the common ancestor for Neanderthals and modern humans. However, the fossil evidence suggests that H. erectus "evolved" multi-regionally (see chapter 4), and that Neanderthals and different subpopulations of "modern" humans, "evolved" from different subpopulations of H. erectus who were dwelling in Africa, the Caucasus, Indonesia, and China.

For example, the jaw from a H. erectus has been discovered in the Caucasus (Georgian Republic) dated to 1.9 million years (Howells, 1997). The skeletal remains of H. erectus (and associated stone tools) have also been discovered in Java, Indonesia, (Pithecanthropus erectus) and near the Solo River (e.g. Solo Man, Java Man)--sites dated from 1.8 million to 700,000 B.P (respectively). In addition, the remains of H. erectus (H. erectus pekinesis) have been discovered in Northern China (e.g. Peking Man) and in Zhoukoudian, Yuanmou and Xihouda China--sites dated from 1.5 million years B.P., to 800,000 to 750,000, to 500,000 B.P. (Jia, 1975, 1980; Jurmain et al. 2010; Stanley, 1979, 1981; Wu & Wang, 2013).

Given that the earliest evidence of H. erectu (Homo ergaster) in Africa is dated to around 1.6 million years B.P. (Leakey & Walker, 1988), it is not likely that "Georgia Man," "Solo/Java Man" and "Peking Man" migrated out of Africa. In fact, ancestral forms which include the remains of Gigantopithecus as well as an H. habilis (Jia, 1980; Munthe et al. 2013) have been discovered in Java, and China (Dragon Hill). Of equal importance, stone tools were discovered from Dragon Hill, China, which are dated to 2 million years. However, it was not until almost a million years later that H. erectus finally invaded Europe as the remains of H. erectus have been found in Italy from a site dated to 800,000 B.P., whereas others have been found in Southern England (Howells, 1997).

Hence, contrary to the out-of-Africa scenario, it appears that H. erectus evolved multi-regionally, in Java, Asia, the Caucasus, and Africa, and/or immigrated from these distant lands to Africa (and then lastly, Europe) where H. habilis was still the dominant hominid. Indeed, if H. erectus did emerge first in China, Java, Georgia, and so on, and then spread to Africa, this may explain why the African H. habilis managed to hang on for almost half a million years before becoming extinct around 1.6 million years ago; i.e. at about the same time that H. ergaster appeared upon the scene.

Of course, the above scenario, although supported by the fossil evidence, is completely contrary to the Darwinian notion of a single line of descent. Moreover, it should be stressed that many of those who support the "multi-regional" view of evolution, hold a much more conservative view. That is, although arguing that "modern" humans evolved multi-regionally, many of these scientists accept the notion that H. erectus first emerged and migrated out-of-Africa.

MULTI-REGIONAL EVOLUTION

About 500 thousand years ago the first primitive and archaic Homo sapiens began to appear in increasing numbers in North Africa, the near Middle East, and even southern England. Wherever they wandered they proved to be far more intelligent and resourceful as compared to the last remnants of Homo erectus who over the next two hundred thousand years were eradicated or simply died out as a species.

It has been logically surmised that H. sapiens ("the wise man") evolved from H. erectus. However, if archaic humans first "evolved" in Africa, Asia or Indonesia, is subject to considerable debate. As noted above there is considerable evidence which suggests Australopithecus, H. habilis, and H. erectus "evolved" multi-regionally.

Moreover, there is evidence which also indicates that not all H. sapiens evolved at the same time, in the same place, or from the same stock of H. erectus (Frayer et al. 1993; Moritz et al. 1987; Wolpoff, 1989); that is, it appears that H. sapiens evolved "multi-regionally."

As argued by Frayer, Wolpoff, Thorne, Smith, and Pope (1993, p. 17) "the best evidence of the fossil record indicates that modern humans did not have a single origin, or for that matter a number of independent origins, but rather it was modern human features that originated--at different times and in different places. This view also implies that certain features that distinguish some modern groups were developed very early in our history, after the exodus from Africa. It is also important to recognize that not all features common to modern humans emerged at precisely the same time."

Hence, just as there is evidence which suggests that different subspecies of Australopithecus, H. habilis, and H. erectus may have "evolved" from separate stocks of antecedent species, there is fossil evidence that "archaic" Homo sapiens may have "evolved" from at least three different branches of H. erectus as recently as 400,000 years ago, each of which in turn gave rise to at least three branches of human beings who first appeared and ranged throughout Africa, Europe (e.g., Petralona Italy; Hungary, Germany), China (Hupei Province), the Middle East, and India. Moreover, there is evidence of evolutionary progression in each of these distant lands for the remains of more advanced and modern appearing archaic ("early modern") H. sapiens have been discovered in China as well as in East Africa from sites dated to 130,000 B.P. and 120,000 B.P respectively (Barnes, 1993; Butzer, 1982; Grun et al. 2010; Howells, 1997; Rightmire, 1984). Hence, there appears to be evidence of multi-regional descent.

OUT-OF-AFRICA, "EVE" AND REPLACEMENT

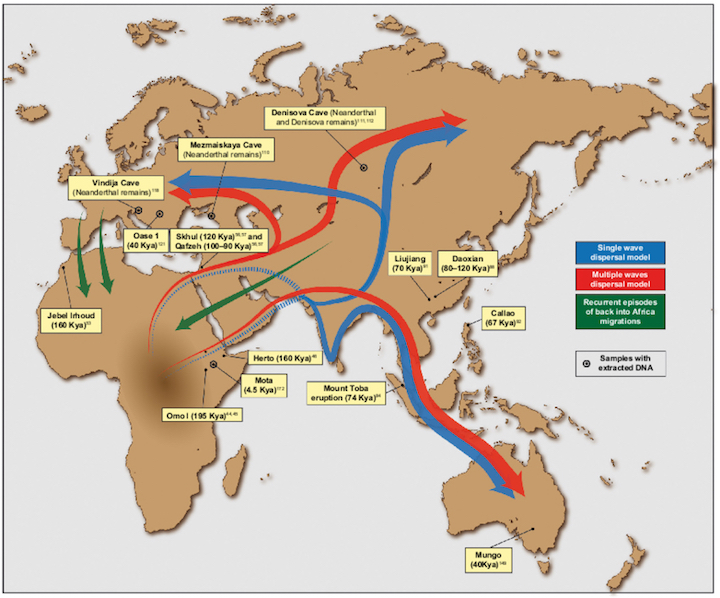

The "multi-regional" view is rejected by many scientists who hold to the Darwinian notion of a single line of descent. Although most also admit that European archaics (Neanderthals) evolved separately (which supports the multi-regional view) these scientists argue that modern humans originated in Africa, perhaps about 250,000 years ago, and then radiated outward replacing those who came before them including the European Neanderthals. That is, according to the out-of-Africa scenario, successive waves of hominids originated in Africa and then spread to China, Indonesia, etc., only to be followed by more advanced species who also radiated outward, killing off those who came before them. Presumably this out-of Africa pattern of dispersal and invasion was initiated by and the followed by successive species of Australopithecus, who were then followed (and killed off) by H. habilis, who were then followed (and killed off) by H. erectus, who were then followed (and killed off) by archaic H. sapiens, all of whom originated in Africa and all of whom must have followed the same well traveled route out of Africa to parts unknown.

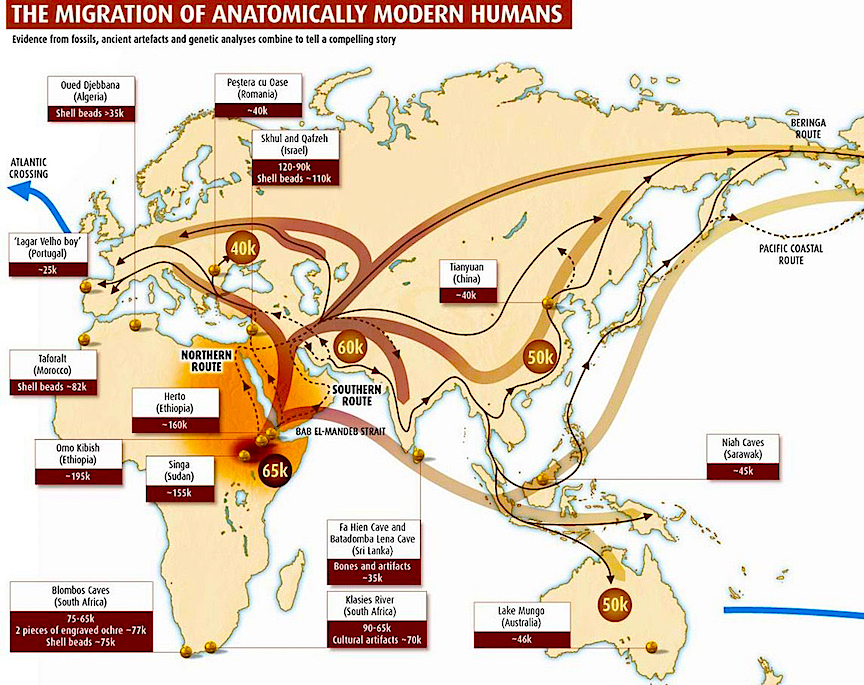

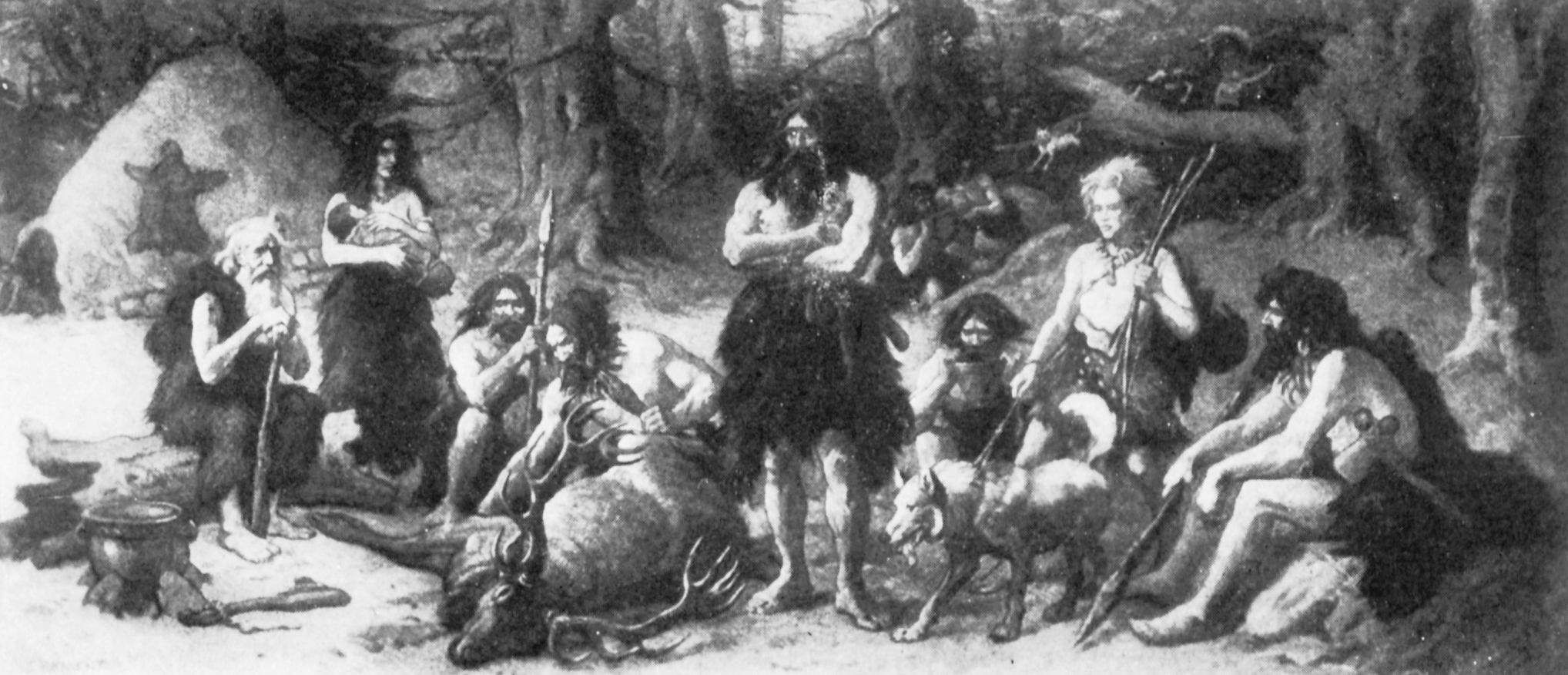

Presumably, this same pattern of dispersal and invasion was followed b "early modern" and "modern" Paleolithic H. sapiens sapiens ("the wise man who knows he is wise"). According to the "replacement" theory, in venturing out of Africa and invading the Middle East and Europe, "early modern" and "modern" Paleolithic Homo sapiens sapiens "replaced" perhaps by killing off or out-competing, the more archaic H. sapiens (e.g. Neanderthals) who already dwelled in these coveted lands. Hence, presumably, due to population growth and other factors perhaps related to changes in culture, tool making, climate or availability of game animals, "early modern" and then anatomically "modern" H. sapiens sapiens eventually pushed their way up through Northeastern Africa, and then into the Middle East, and then into Europe, China, Indonesia, Australia, and the Northeastern and Southwestern Americas, and so on, eradicating "inferior" species as they went, such that by 50,000 to 40,000 B.P. the Cro-Magnon and "modern" H. sapiens had come to occupy a considerable part of the Earth.

Hence (and broadly speaking), whereas the "mutli-regional" view presents humans as evolving in multiple places over multiple time periods from multiple ancestral species, the "replacement" theorists argue that all hominids including "modern" humans evolved in Africa and in proliferating and invading other lands, eradicated those who had come before them. Moreover, consistent with the belief in a single line of descent, the "replacement" theorists have argued, as based on mitochondrial DNA, that all modern humans have descended from a single female ancestor in Africa about 250,000 years ago;. i.e. the "Eve" hypothesis. Specifically, based on an analysis of a small fragment (610 base pairs) of the mitochondrial genome (which consists of about 16500 base pairs), taken from 189 individuals, Stoneking and Cann (1989; Vigilant et al. 1991) have argued that based on the varying patterns in sequencing ("types") that there is greater diversity within Africa than outside Africa. From this data they concluded that all humans must have descended from African ancestors.

However, the DNA evidence and "mutation rates" upon which this theory has been based, has been discredited and shown to be statistically invalid (Templeton, 2012; Wolpoff & Thorne, 1991). Indeed, human mitochondrial DNA consists of only 37 genes, circulates independently within the cell's cytoplasm, is inherited only from the maternal line, and there is no evidence of evolution in the mitochondria genome, although deleterious mutations are not uncommon. In fact, others have found, using the same data, that all humans could have also descended from ancestors who lived in New Guinea (Ruvolo & Swafford, 1993).

As per more recent data provided by Chu et al., (1998), regarding the origins of modern Chinese, it is noteworthy that although these researchers concluded that "genetic evidence does not support an independent origin of Homo sapiens in China" they also found that the peoples of Northern and Southern China clustered into distinct regional genetic populations that could be divided into even smaller separate genetic groups. Moreover, the East Asian populations they studied were genetically more closely related to "Native American" indians, followed by Australian aborigines, and New Guineans and most distantly related to Africans. Hence, this data could be interpreted to mean that anatomically "modern" humans originated in Australia or New Guinea, then migrated to southern Asia, and then the Americas whereas Africans remains in Africa.

The out-of-Africa model demands a single line of descent, which in turn is based on the single seed theory of the origin and evolution of life on Earth. However, as based on genetics alone, a single line of descent appears to be an unlikely (see chapter 3, 4). For example, if a new species were to emerge in a single line of descent (due to a chance mutation), who would that individual breed with so as to perpetuate the "new" species?

In order to breed, and in order to prevent the"mutation" from being eliminated from the genome, and/or so as to insure that an intermediate (i.e. regressed) version of the new species would not be produced following breeding, an unrelated male and female, that is, a potential breeding pair would both have to experience this "random" "mutation" (that unlike all other mutations just happens to be adaptive) so as to pass on this mutation to their children who in turn would have to find nearby, unrelated mates who also possess the very same randomly acquired mutation. A chance scenario such as this, of course borders on the ridiculous and is statistically improbable; that is, unless this change in the genome was not random or a mutation, but was in fact programmed into the DNA of this species. Indeed, if an unrelated male and female both evolved this "mutation" so as to breed, then this would be evidence for multi-individual and thus multi-regional evolution, for the same exact genetic events would then be expected to occur throughout the species almost regardless of where they lived; that is, depending on the environment, as the environment acts on gene selection--genes which exist prior to their selection). It has been argued (see chapters 3, 4, Joseph, 1997) that it is precisely because of a genetic commonality, that is, the existence of shared DNA-based genetic instructions, that new members of the species "evolve" multi-regionally, and thus come to posses almost identical physical and biological attributes.

HOMO SAPIENS SAPIENS AND MULTI-REGIONAL EVOLUTION

Although the "Eve" hypothesis requires it, there is no evidence to suggest that "modern" or even "early modern" appearing Paleolithic humans had evolved by 250,000 years ago. Moreover, the first evidence for the evolution of "early modern" H. sapiens appears not in Africa but in China around 130,000 B.P., with similarly advanced species appearing in East Africa 10,000 years later. Moreover, the first evidence for the emergence of "modern" H. sapiens sapiens is not found in Africa, but in Australia. As based on thermoluminescence and optical dating techniques, "modern" H. sapiens had arrived in Australia at least 60,000 years ago and maybe as long ago as 75,000 years B.P, (Rhys Jones, cited by Morell 1995), 25,000 to 40,000 years before "modern" H. sapiens sapiens had emerged in Africa.

In fact, although separated from the mainland by over 100 miles of water, "modern" H. sapiens sapiens not only appeared in Australia, perhaps as long ago as 75,000 years B.P., but had established numerous settlements, were using ocher, and were fashioning complex tools as early as 60,000 B. P. These included grooved "waisted blades" which could be bound to a handle.

Given that these early Australians would have also had to sail almost 100 kilometers across open ocean in order to reach Australia (southern Asia being the closest land mass), considerable intellectual prowess and thus modernness is evident. In fact, these early Australians were engaging in widespread agriculture 60,000 to 50,000 years ago; clearing forests with fire so that fruit-bearing trees could be planted (Miller et al., 2011). Because they were destroying these forests and given their advanced hunting capabilities, more than 85% of Australia's land-dwelling megafauna became extinct almost simultaneously, 50,000 years ago (Miller et al., 2011). It would take 40,000 or more years before their African/Middle Eastern counterparts began to demonstrate similar capabilities.

Moreover, Asian "moderns" also appeared tens of thousands of years before their counterparts in Africa. In fact, after Australia, there is evidence that "modern" Paleolithic humans appeared in China by 67,000 B.P., and then Israel, Romania, and Bulgaria by 43,000 B.P., and then Iraq and Siberia by 40,000 B.P., and then Spain, France, and North East Africa by 35,000 B.P. (reviewed in Howell, 1997). In addition, there is evidence for modern human occupation in Brazil, Peru, Chile and North America as long ago as 50,000 to 30,000 B.P. (Bahn, 1993). In fact, there is evidence to suggest that humans appeared in the Americas perhaps 250,000 years ago--though this date is rejected by almost all anthropologists. Rather, what the evidence strongly indicates is that modern humans arrived in the America's by 50,000 to 40,000 years ago, and this is based on linguistic analysis (e.g. Johanna Nichols of U.C. Berkeley), the analysis of "Native American" DNA (e.g. Theodore Schurr of Emory University) and the discovering of complex tools and human bones in Monte Verde, Chile.

Obviously, these and other findings are not consistent with the out-of Africa scenario, as "modern" humans were emerging out-side of Africa in advance of "modern" humans inside of Africa. Rather, the data instead supports the multi-regional view and even the out-of-Asia view of evolutionary metamorphosis.

Hence, similar to the step-wise world-wide pattern of multi-regional, multi-phyletic metamorphosis which has characterized the progressive emergence and increased complexity of plants and animals (see chapters 4 and 5), and as based on the available evidence it appears that human "evolution" has unfolded multi-regionally in a step wise, progressive fashion, with some lagging far behind and others being left behind altogether and becoming extinct.

Nevertheless, it must be stressed that those adhering to the "out-of-Africa" and the mainstream Darwinian position of a single line of descent, dispute, challenge and reject the evidence for multi-regional evolution, and have offered up numerous plausible explanations so as to discount these findings. Moreover, many also vehemently reject the notion of "progress" and dispute any and all evidence which indicates that there has been a step-wise progression in complexity over the course of evolution. As argued by Harvard paleontologist and popular science writer, Stephen J. Gould (1988, p. 319), "progress is a noxious, culturally embedded, untestable, nonoperational, intractable idea that we must replace if we wish to understand the patterns of history."

"MULTI-REGIONAL" EVOLUTION AND "REPLACEMENT"

Although the "replacement" vs the "multi-regional" theories appear to be at odds, as will be detailed below, these theories are (at least somewhat) mutually supportive; particularly if one considers that the "replacement" theory may accurately depict events that occurred "multi-regionally". For example, in Africa and Asia, pockets of each successive and more advanced species of Australopithecus may well have radiated outward and eradicated any and all contemporary ancestral species. And this same multi-regional pattern of evolution and "replacement" may have characterized the brief rein of H. habilis who in turn was eradicated (multi-regionally) over the course of just a few million years by H. erectus who also may have emerged multi-regionally and then radiated outward to cleanse the surrounding lands of any and all inferior ancestral species.

Likewise, based on the fossil evidence reviewed above, pockets of archaic, "early modern" and anatomically "modern" H. sapiens sapiens who "evolved" in the Far East, Asia and Africa, likely radiated and ventured outward from these pockets, in all directions; each successive species in turn "replacing" by killing off or out-competing, any and all inferior ancestral species of humanity who already dwelled in surrounding lands. In this regard, the "replacement" and "multi-regional" views of evolutionary development are complementary in that both views entail competition and the replacement of "inferior" species.

Indeed, it is exactly the above scenario which likely accounts for the demise of Neanderthals--a peoples whose very existence strongly supports if not verifies the multi-regional theory. However, as will be detailed below, "modern" Paleolithic H. sapiens sapiens (be they of African, Asian, Georgian, or Australian origin) were provided a competitive advantage over archaics including Neanderthals due to the anatomical and functional expansion of the frontal and inferior parietal lobe and the evolution of the angular gyrus. That is, although Neanderthals and other archaic humans may have possessed the DNA and thus the genetic potential to someday evolve into modern humans, and although there was likely some interbreeding, they were eradicated by a neurologically superior race of people, the Cro-Magnon.

THE EVOLUTION OF THE FRONTAL LOBE AND ANGULAR GYRUS

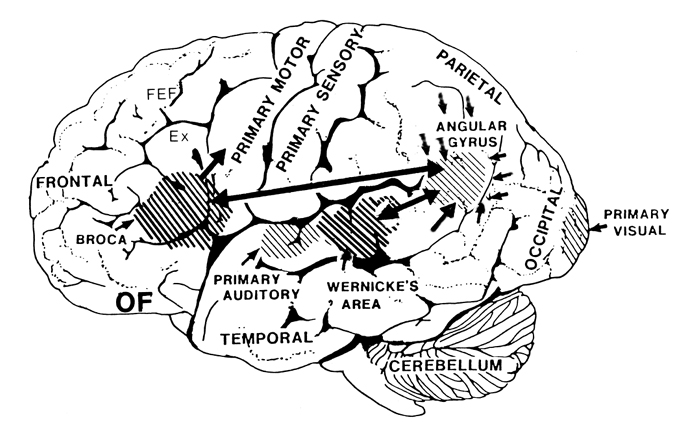

As will be detailed below, the frontal lobes and inferior parietal lobes have obviously expanded over the course of hominoid and human evolution. Hence, it has been theorized, based on cognitive, cerebral, and endocast comparisons, that the evolution and expansion of the frontal lobe and inferior parietal lobe, the angular gyrus in particular, have significantly contributed to the evolution of language, artistry, and tool and hunting technology, as well as the Middle to Upper Paleolithic transition and the eradication of the Neanderthals (Joseph 1993, 2011e).

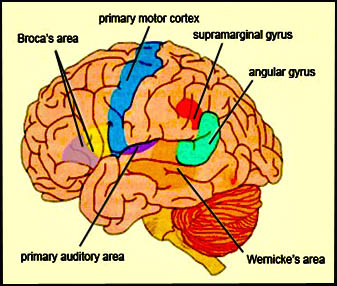

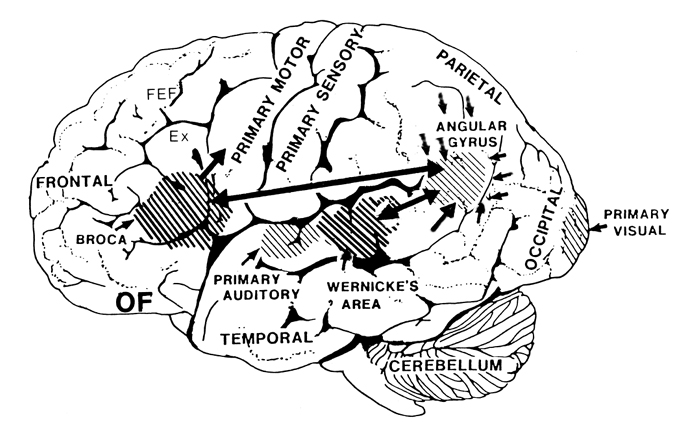

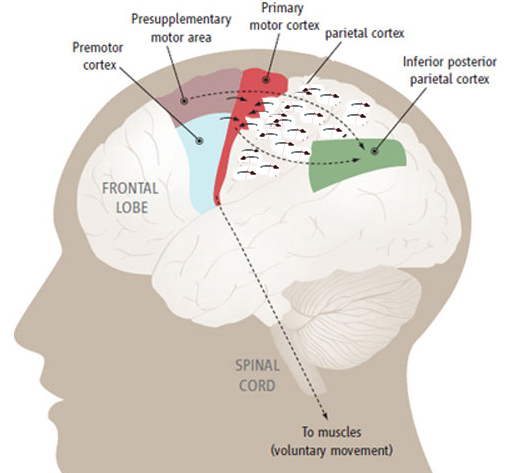

As is well known, the frontal lobes serve as the "senior executive" of the brain, cognition, and personality, regulating information processing throughout the cerebrum (Fuster 1997; Joseph 1986a, 2011a; Passingham 1993; Selemon et al. 1995; Shallice & Burgess 1991; Stuss & Benson 1986), including, via Broca's area, the expression of speech. Hence, the evolution and expansion of the anterior portion of the brain would necessarily confer greater cognitive, linguistic and intellectual capabilities upon those so endowed.

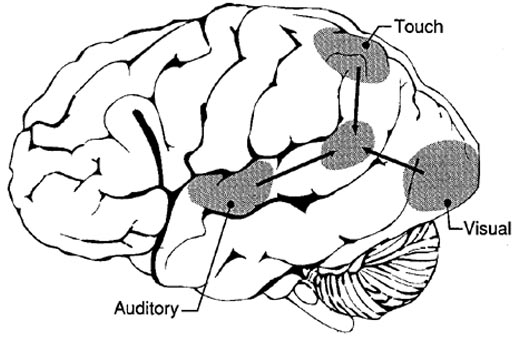

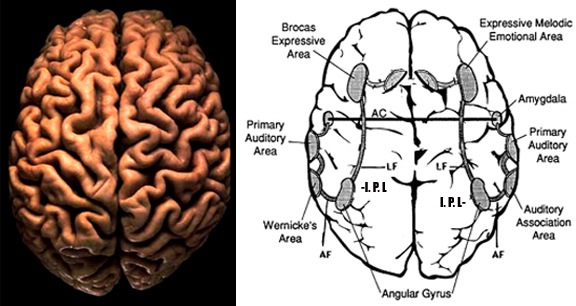

Likewise, the angular gyrus of the inferior parietal lobe (IPL) plays an important role in language, as well as artistry, tool use and manipulation (Joseph 1982, 1993, 2011e). Hominoids (and other non-human mammals) lack an angular gyrus (Geschwind 1965) and their tool-making capabilities are limited to hammering with rocks, and throwing or manipulating leaves, sticks, and twigs.

Likewise, the tool making tradition of H. habilis was exceedingly primitive, consisting or rocks that had been banged together in order to arrive at a desired shape. Hence, it can be concluded that H. habilis had not yet evolved an angular gyrus. Moreover, as the IPL/angular gyrus sits at the junction of the tactile, visual, and auditory association areas, and assimilates, sequentially organizes, and injects this material into the stream of language and thought, it can be also be concluded (see below) that H. habilis had not yet evolved the ability to speak in a manner even remotely resembling the speech of modern humans--an impression that is also bolstered by their poorly developed (pre-) frontal lobe, a region that contains Broca's expressive speech area.

THE HOMINOID AND HOMINID FRONTAL LOBE

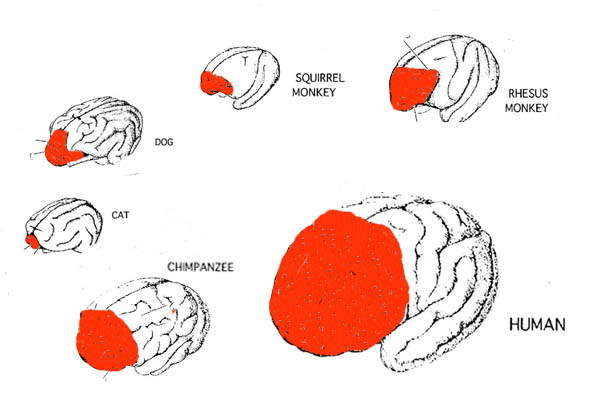

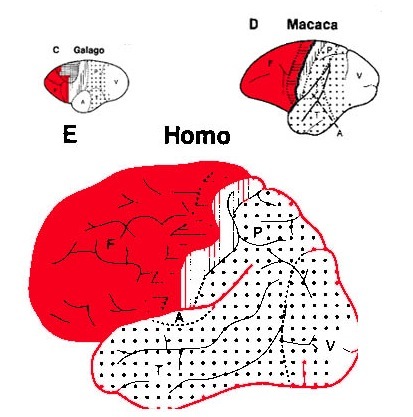

Over the course of mammalian evolution, the frontal lobes have signficantly expanded in size and complexity, reaching their greatest degree of development in humans (Fuster, 1997). Moreover, it is apparent that in the course of human evolution, beginning with Australopithecus, that the frontal lobes have continued to expand.

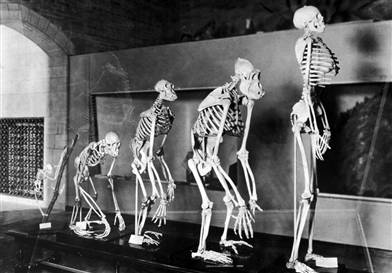

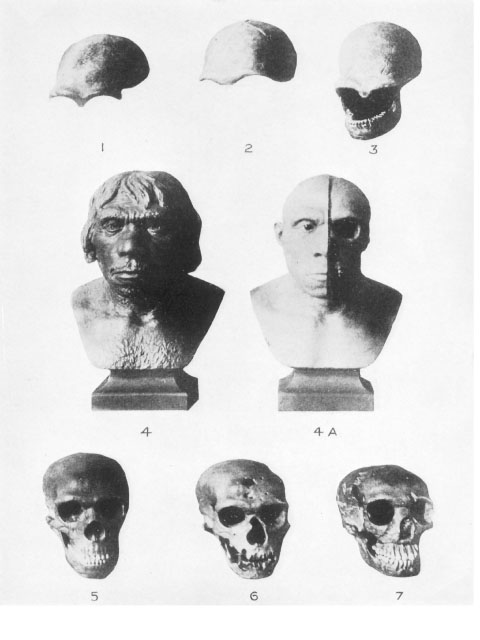

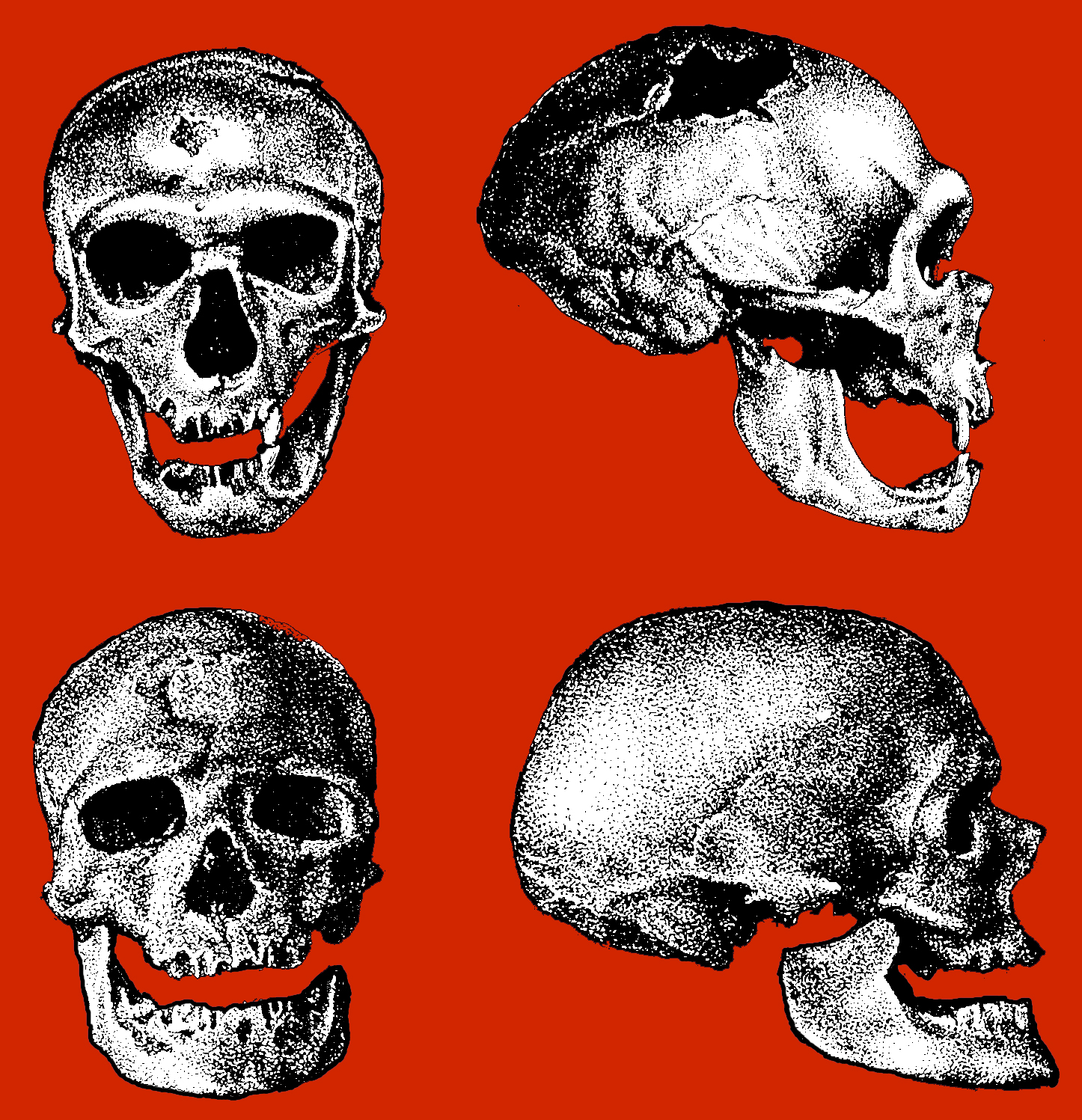

As noted above, hominids and hominoids (i.e. chimpanzees) apparently diverged from a common ancestral stock around 5 million years ago, with Australopithecus appearing soon thereafter. However, not only was Australopithecus more ape-like than human-like, but as based on cranial comparisons, it appears that the brain of the chimpanzee is similar in size and volume (390 cc on average, range=282-500 cc, male/female mean of 410/380 cc), to that of Australopithecus aferensis with a range=385-450 cc (Ashton & Spence, 1958; Tobias, 1971; see also Holloway 1988). These similarities have important implications in regard to the evolution of the frontal lobe.

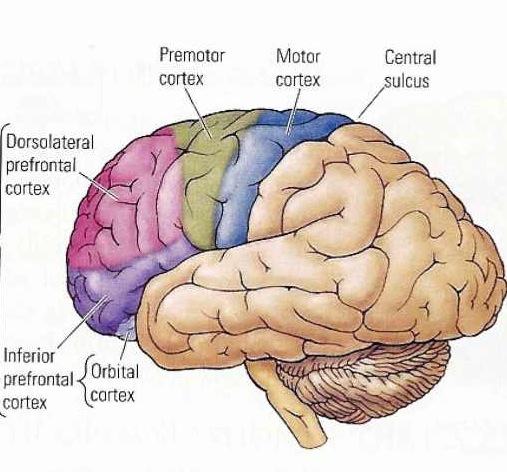

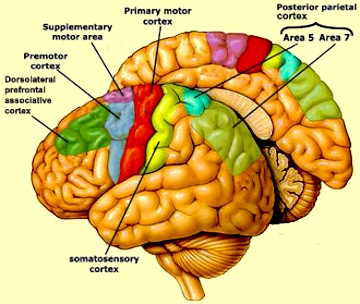

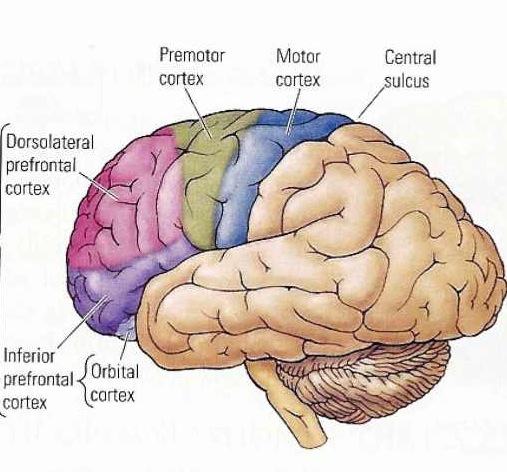

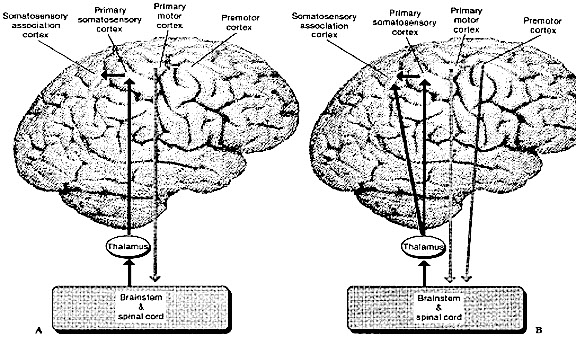

The human versus chimpanzee frontal lobe has been reported by Brodmann (1912) to comprise 36.3% versus 30.5% of the overall neocortical surface, whereas Tilney (1928) provides estimates of 47% versus 33% (see Figure 1 & 3). Blinkov and Glezer (1968) have estimated that the prefrontal and precentral regions comprise 32.8% of the human versus 22.1% of the chimpanzee cerebral hemispheres. As the chimpanzee frontal lobe comprises from 30.5% to 33% of the neocortical surface (whereas the human frontal lobe ranges from 36.3% to 47%), and as there is no evidence of a functional Broca's area in primates (see above) the same may be assumed of Australopithecus aferensis.

As noted, A. aferensis is the likely ancestor of A. africanus who had become marginally more human-like--as reflected by slight physical and neurological advances. For example, Conroy (1998) and others (see Day 1986; Gibbons 1998; Tobias, 1971) have determined, as based on volumetric measurements, that the cranial capacity of Australopithecus africanus is slightly larger than the chimpanzee, ranging from 413 to 515 ccs with a mean volume of 440 cc). Hence, it is apparent that the brain has become larger in the transition from A. aferensis to A. africanus and it can be assumed that some of this expansion took place within the frontal lobe.

Nevertheless, the frontal (and parietal and temporal) lobes of A. africanus (like A. aferensis) was probably more ape-like than human-like (Figures 1 & 2; see also Falk 1982, 2013a) and there is no evidence to suggest that they had "evolved" a functional Broca's area or an angular gyrus. In fact, it was not until the emergence of H. habilis that the frontal (and temporal and parietal lobes) began to enlarge and become more human-like (for related discussion see Holloway 1981, 2013a; Tobias 1971; though again, there is absolutely no evidence (other than that based on phrenology, see below) to suggest they had evolved a functional Broca's area.

ENDOCAST CAVEATS: BROCA'S AREA AND THE PHRENOLOGY OF H. HABILIS

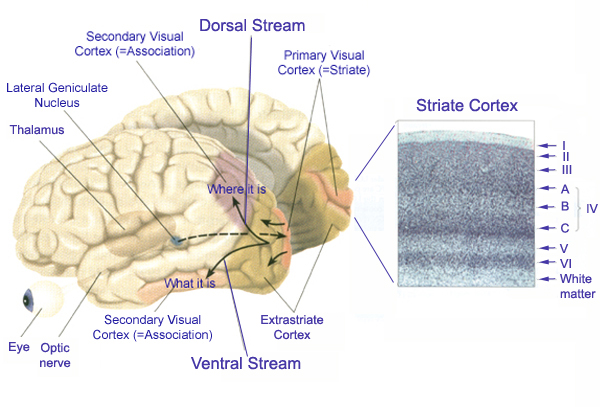

Hominoids lack an angular gyrus (Geschwind 1965) and the neocortical tissues homologous to Broca's area does not subserve speech or vocalization. Although damage to Broca's area in humans results in a profound expressive aphasia, similar destruction in non-human primates has no effect on vocalization rate, the acoustical structure of primate calls, or social emotional communication (Jurgens et al. 1982; Myers 1976).

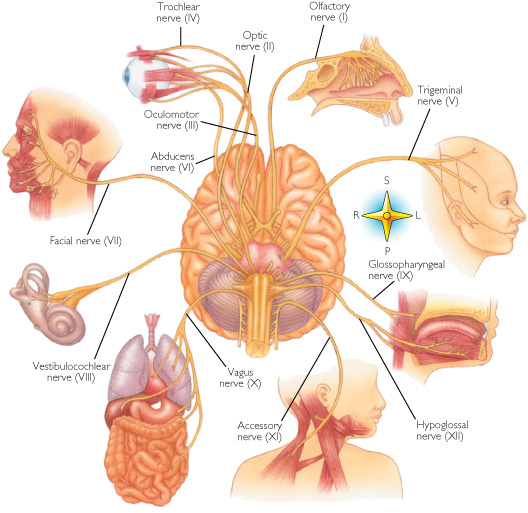

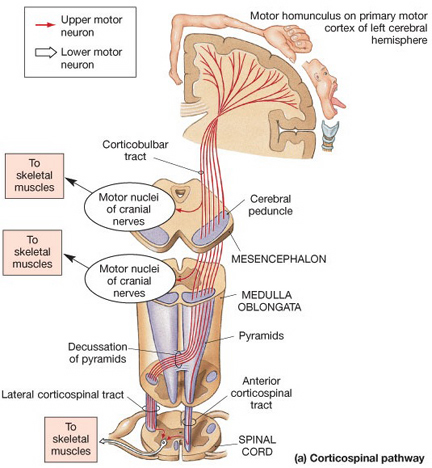

Vocalization in non-human primates is unaffected following lesions to the left frontal lobe as sound production is mediated by limbic and subcortical nuclei such as the cingulate gyrus and amygdala (Jurgens 1979, 1987, 2010, 2012; MacLean 2010; Ploog 2012; Robinson 1967, 1972) which act on the brainstem vocalization centers including the periqueductal gray (Jurgens 2004) which in turn controls the oral musculature, via, for example, the hypoglossal nerves. In fact, the hypoglossal canal, which enables the hypoglossal nerve and thus the cerebrum to control the tongue, is so tiny in these species, that as also confirmed by behavioral indices, they simply do not have the neurological capability to control the oral musculature sufficiently so as to produce speech.

Thus, among non-human primates, there is no functional, and thus no anatomical equivalent to the human Broca's area and there is absolutely no evidence to indicate they can produce human speech. Likewise, as early hominids possessed a chimpanzee-like brain and/or a poorly developed frontal lobe, and a tiny hypoglossal canal, and as there is no functional evidence that the angular gyrus had evolved, it can be concluded that they too probably lacked a functional Broca's area as well as an angular gyrus and were completely incapable of producing human-like speech.

In fact, whereas the brain of Australopithecus was similar to that of a chimpanzee, the brain of H. habilis was ony somewhat larger than a male ape. In fact, the brain of H. habilis is half the size of the modern human brain (see Conroy 1998), i.e., 640 cc compared to 1350 cc. Again, given the simplistic and unchanging nature of their stone-flake "Oldowan" tool-making tradition, it is also unlikely that these hominids evolved an angular gyrus or a functional Broca's area.

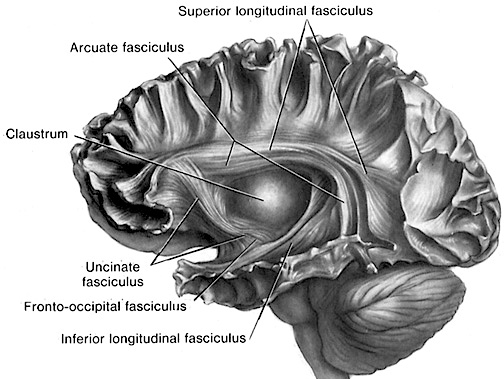

Indeed, H. habilis possessed an exceedingly primitive vocal tract that could not have subserved modern expressive speech (Lieberman 1984). Rather, like the angular gyrus (see below), a functional Broca's area must have evolved in parallel with handedness and advances in tool technology and artistry, and in conjunction with increases in the size and volume of the temporal-parietal neocortex and the frontal lobe. Broca's expressive speech area, like other frontal lobe capacities, was acquired much later in human evolution (Aboitiz & Garcia 1997; Joseph 1993, 2011e).

Some authors, however, have claimed to have discovered an impression of Broca's area on the inside of the H. habilis' skull (Falk 2013b; Tobias 1987). These claims and counterclaims are not only exceedingly controversial (see Falk 2013a; Holloway 2013; Jerison 2010), but the phrenological methodology of determining functional landmarks based on an examination of the skull was rightly denounced as a pseudoscience over 100 years ago.

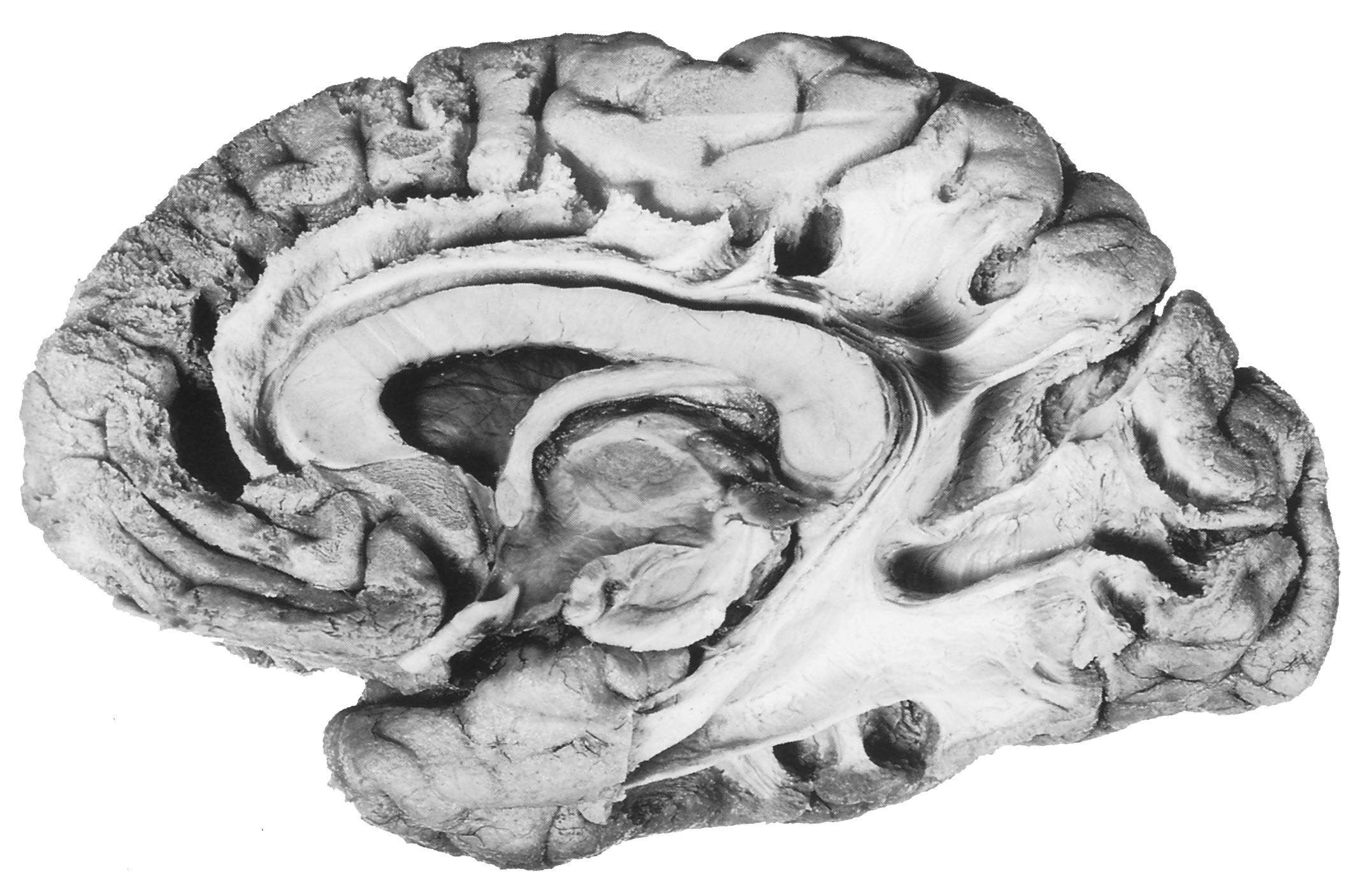

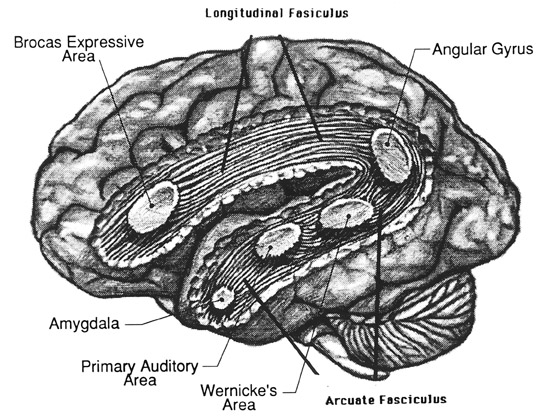

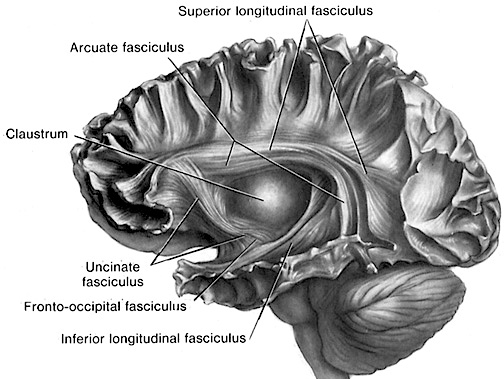

Claims to have discovered functional landmarks are in fact based on the bumps, grooves, and marks supposedly engraved on the inside of the hard inner surface of the skull by an exceeding soft and gelatinous cerebral mass covered by a thick dural membrane. Although an endocast of the inner skull provides impressions regarding the location of major blood vessels and the gross size and position of the lobes of the brain (see Figures ), it is exceedingly difficult to discern or identify primary sulci and gyri, much less a "Wernicke's" or "Broca's" area (see Jerison 2010 for related discussion).

In fact, it's impossible as neurosurgeons can't perform this feat even when palpating a living brain, and must employ an electrode to identify functional landmarks such as Broca's area. In fact, although some people suffer strokes or other injuries which are precisely localized to "Broca's" or "Wernicke's" areas, they do not become aphasic, whereas those with lesions which spare the classic language areas may become profoundly aphasic (Dronkers 1993; Kertesz, Lesk & McCabe 1977). Expressive and receptive speech is sometimes represented in the right hemisphere (Joseph 1986b).

However, in the transition from H. habilis , to H. erectus, to archaic H. sapiens, to anatomically modern Upper Paleolithic H. sapiens sapiens (Blinkov and Glezer 1968; Joseph 1993; MacLean 2010; Tilney 1928, Weil 1929; Wolpoff 1980) the frontal lobes continued to expand in length and height reaching their greatest degree of development with the evolution of the Cro-Magnon peoples. In fact, it is with the emergence of the Cro-Magnon that there is finally evidence that the angular gyrus and a functional Broca's area had evolved.

Broca's Area, Limbic Language, and the Upper Paleolithic Angular Gyrus

Given that the emergence of "modern" Upper Paleolithic humans is characterized by a cognitive "revolution" in tool, artistic, and hunting technology (Chauvet, Deschamps & Hillaire 1996; Gilman 1984; Leroi-Gourhan 1964), it appears that these cultural, technological, and cognitive achievements paralleled and were made possible, in part, via the emergence of the angular gyrus and the expansion of the frontal lobes--the evolution of which also resulted in the development of Broca's speech area and modern language (Joseph, 1993, 2011e).

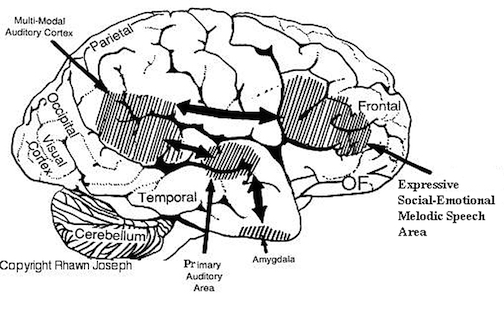

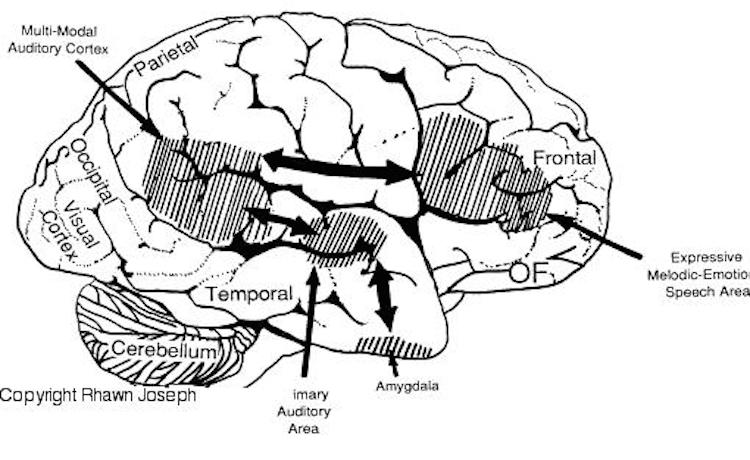

Specifically, as vocalization in non-humans is mediated by limbic and brainstem structures, it has been argued that what has been referred to as "limbic language" (Joseph 1982; Jurgens 2010) became hierarchically represented, punctuated, sequenced, and reorganized by these neocortical speech areas thereby giving rise to modern, grammatical, vocabulary-rich speech (Joseph 1982, 1988a, 2012a, 1993, 2011e,d). That is, due to the interconnections maintained with the frontal speech areas and the anterior cingulate, and Wernicke's area and the amygdala, with the evolution of the angular gyrus and a functional Broca's areas (and the pathways between them) auditory input and limbic output was hierarchically and neocortically subsumed and subject to temporal sequencing, thus giving rise to grammatical, vocabulary-rich language (see below).

Specifically, coinciding with or at least following these transformations in the posterior speech areas, and due to its reception of auditory-linguistic signals, over the course of human evolution Broca's area was transformed from a hand area (as is the case in hominoids) to a speech area and thus began programming the primary motor areas and thus the oral laryngeal musculature for expressive speech. Hence, via connections with the cingulate and amygdala, what had been limbic language was thus hierarchically subsumed by the neocortex, thus giving rise to modern human language and conferring a tremendous intellectual advantage to those so endowed, i.e. the Cro-Magnon (Joseph, 2011e).

As will be detailed below, the evolution of the frontal lobes and angular gyrus parallels and accounts for the cultural, social, technological, and linguistic adaptions which characterize the Middle to Upper Paleolithic transition, including the evolution of language. Moreover, the expansion and evolution of these structures likely contributed to the demise of Neanderthals who were not as well endowed as their Upper Paleolithic, "Cro-Magnon" counterparts.

THE UPPER VERSUS THE MIDDLE PALEOLITHIC FRONTAL LOBE

The human frontal lobes serve as the "Senior Executive" of the brain and personality, and are "interlocked" via converging and reciprocal connections with the limbic system, striatum, thalamus, and the primary, secondary, and multi-modality associational areas including Wernicke's area and the IPL. Through these interactional pathways, the frontal lobes are able to coordinate and regulate attention, memory, personality, and information processing throughout the neocortex so as to direct intellectual and cognitive processes.

As based on human (and animal) experimental and case studies, it is well established that the frontal lobes enable humans to plan for the future and to consider the consequences of certain acts, to formulate secondary goals, and to keep one goal in mind even while engaging in other tasks, so that one may remember and act on those goals at a later time. Selective attention, planning skills, and the ability to marshal one's intellectual resources so as to not only remember but achieve those goals, and the capacity to anticipate the future rather than living in the past, are capacities clearly associated with the frontal lobes (Fuster 1997; Joseph 1986a, 2011a; Stuss & Benson 1986).

In addition, the right and left frontal lobes respectively subserve the expression of emotional-melodic, and vocabulary-rich grammatical speech. Specifically, upon receiving converging impulses from the IPL and the language and auditory areas in the temporal lobes, Broca's area (and its emotional speech producing counterpart in the right frontal lobe) act on the immediately adjacent secondary and primary motor areas which control, regulate and program the oral laryngeal musculature (Foerster 1936; Fox 1995; Joseph 1982, 1988a, 2011e,f). Therefore, the ability to express one's thoughts, ideas, and emotions through complex speech is made possible by the frontal lobes.

Although endocasts should not be employed to localize functional landmarks such as Broca's area, they are useful for making gross determinations as to the overall size and configuration of the cerebrum and the lobes of the brain. In this regard, and as based on cranial comparisons, or endocasts using the temporal and frontal poles as reference points, it has been demonstrated that the brain has tripled in size, and that the frontal lobes have significantly expanded in length and height over the course of human evolution and during the Middle to Upper Paleolithic transition (Blinkov and Glezer 1968; Joseph 1993; MacLean 2010; Tilney 1928; Weil 1929; Wolpoff 1980) such that Cro-Magnon people were obviously superiorally endowed as compared to Neanderthals.

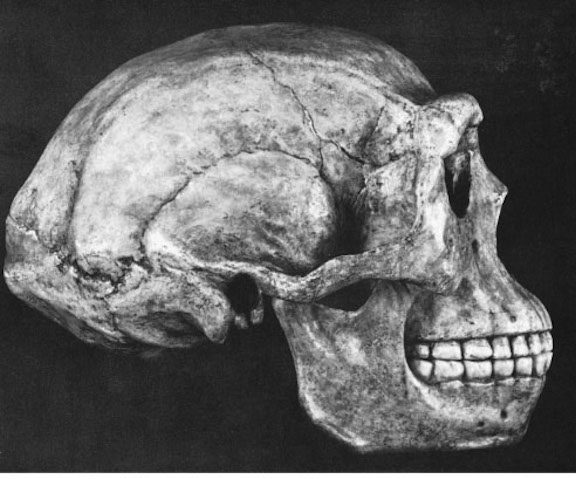

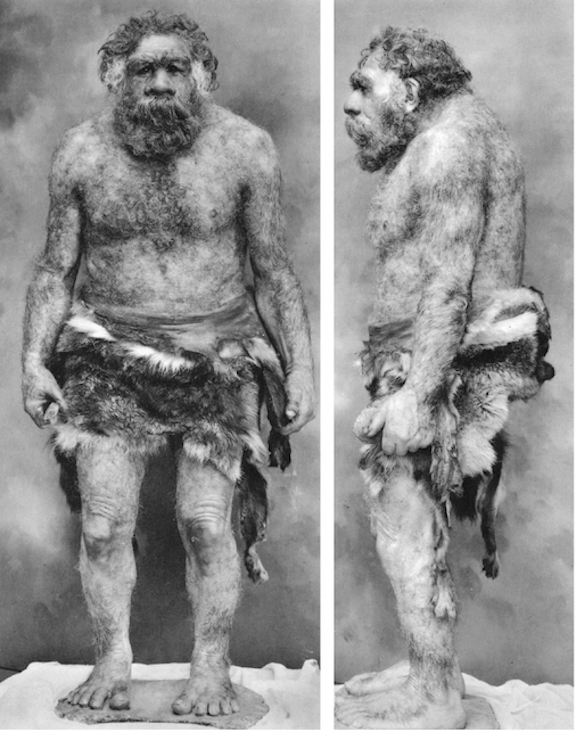

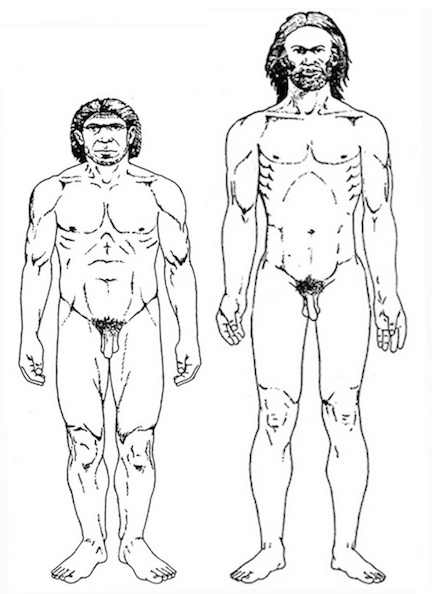

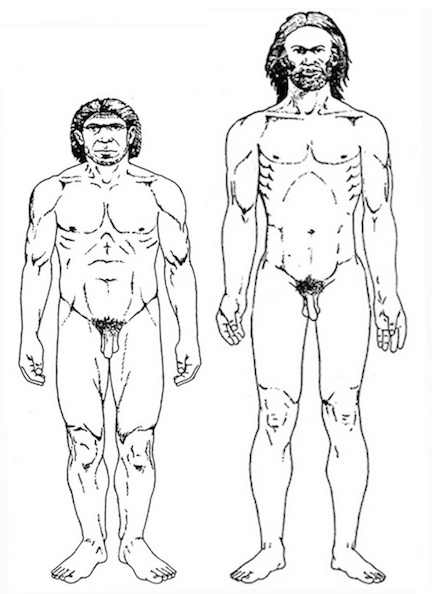

For example, it is apparent (see Figures 27, 28, 29), that the height of the frontal portion of the skull is greater in the six foot tall, anatomically modern Upper Paleolithic H. sapiens sapiens (Cro-Magnon) versus Neanderthal/archaic H. sapiens (see also Wolpoff 1980, Table 12.1; and Tilney, 1928). Hence, impoverished Neanderthal frontal lobe development and expanded Cro-Magnon frontal lobe capacity is indicated. Indeed, the characteristic "slopping forehead" was an obvious limiting factor in archaic and Neanderthal frontal lobe development). In fact, the Cro-Magnon brain was significantly larger than the Neanderthal brain, with volumes ranging from around 1600 to1880 cc on average compared with 1,033 to 1,681 cc for Neanderthals.

The differential evolution of the Cro-Magnon vs the Neanderthal frontal lobe (and angular gyrus) is also apparent as based on paleo-neurological and neuropsychological analysis of tool and hunting technology, artistic and symbolic development, and social organization in the Upper vs the Middle Paleolithic (Joseph 1993). As will be detailed below, the angular gyrus probably emerged and Broca's area probably became fully functional during the Middle to Upper Paleolithic transition; evolutionary developments which likely contributed to the demise of the Neanderthals.

Since Cro-Magnon's shared the planet with Neanderthals during overlapping time periods (and coupled with evidence reviewed below) it certainly seems reasonable to assume that the expansion and evolution of the frontal lobe and angular gyrus, provided these people with an obvious competitive advantage as they clearly dwarfed the Neanderthals in all aspects of cultural, intellectual, social, linguistic and technological achievement. Hence, endowed with a bigger brain and expanded frontal and IPL/angular gyral capacity, the cognitively, linguistically, technologically and intellectually superior "Cro-Magnons" and other "modern" Upper Paleolithians, probably engaged in wide spread ethnic cleansing and exterminated the rather short (5ft 4in.), sloped-headed, heavily muscled Neanderthals, eradicating all but hybrids from the face of the Earth, some 35,000 to 28,000 years ago.

"REPLACEMENT" AND THE NEANDERTHAL FRONTAL LOBE

It is important to note that there continues to be considerable debate as to Neanderthal cognitive and linguistic capability as well as their ultimate fate. From a "replacement" standpoint, it has been argued that Neanderthals died out and/or were killed off and thus replaced (see Nitecki & Nitecki, 2004). Others have argued that despite the considerable differences between these peoples and the Cro-Magnon in height, stature, cranial development, and so on, that Neanderthals suddenly "evolved" into anatomically modern humans over the course of approximately 5,000 years.

However, when considered not just from a cultural but from a neurological perspective, the evidence supports a "replacement" scenario. Although there was likely some interbreeding, as intermediate forms have been discovered, the Neanderthals were probably subject to widespread genocide and were exterminated as a species; a consequence of an inability to successfully compete due to inferior cultural, cognitive, and frontal and parietal lobe evolutionary development (Joseph, 1993).

Unfortunately, as there are no Neanderthal brains in existence, this competitive disadvantage must be inferred or deduced neuropsychologically from the archaeological record and as based on an examination of cranial configuration. As noted, it is apparent that the height and length of the frontal portion of the skull is greater in moderns and "early moderns" vs Neanderthals. Similarly, a gross photographic examination of various Neanderthal vs "modern" endocasts (taken from Smith, 1982: 673; and from Tilney, 1928: 919, and Figures 389-400, and 417-422), and the data provided by Tilney (1928: 923) strongly suggests that the Cro-Magnon as contrasted with the Neanderthal (superior and inferior-orbital) frontal lobe has in fact expanded (Joseph, 1993; MacLean, 2010).

By contrast, whereas the Neanderthals frontal lobe is not as well developed, the occipital and superior parietal areas are larger in length and breadth (Wolpoff, 2011). However, these posterior regions of the brain are concerned with visual analysis and positioning the body in space (see chapters 20, 22). As male and female Neanderthals spent a considerable amount of their time engaged in hunting activities (see below), scanning the environment for prey and running and throwing in visual space were more or less ongoing concerns. A large occipital and superior parietal lobe would reflect these activities.

Nevertheless, this is to not suggest that the "modern" occipital and superior parietal lobes have shrunk. Rather it appears that the visual cortex was displaced into the medial regions of the brain -which is typical of the modern human cerebrum (chapter 22)-- whereas the superior parietal lobe became reorganized, as the environment acts on gene selection, thereby giving rise to the angular gyrus of the inferior parietal lobe.

Given the functional significance of the anterior (non-motor) frontal lobe" (chapter 19), and its role in planning, foresight, and creative and intellectual activities, and the contribution of the IPL to tool making and language (see chapter 11 and below), the Neanderthals with their inferior cognitive and cultural capabilities and poorly developed frontal and inferior parietal lobule would have been no match for the superior endowed Cro-Magnon. Hence, they were likely "replaced" by another species of humanity which evolved in another region of the world--yet another example of multi-regional evolution. However, if considered from a "multi-regional" perspective, it could also be surmised that Neanderthals might have possessed the necessary genes that would have enabled them to someday "evolve" into modern humans; that is, if they had not been exterminated by the Cro-Magnon.

NEANDERTHAL BRAIN DEVELOPMENT

Brain size alone tells us little about function, whereas action and behavioral accomplishment speak volumes as to cerebral, cognitive, and intellectual functional integrity and capability. Moreover, it could certainly be argued that what appears to be impoverished Neanderthal frontal lobe development is in fact an illusion created by their massive brow ridge. But this does not appear to be the case.

Even if we forgo the temptation to make dubious claims based on the size and shape of various endocasts, and ignore the somewhat flattened, sloping Neanderthal forehead and the evolutionary record which indicates a comparative and considerable impoverishment in Middle Paleolithic cultural, tool making, and symbolic accomplishment, there is physical evidence which suggests Neanderthal brain development was characterized by significant limitations in plasticity and learning capability (e.g. chapter 28 for related discussion).

For example, based on Neanderthal pubic morphology and speculations on gestation length, it appears that their infants were born at an advanced stage of maturity and development (Rosenberg, 1988; Wolpoff, 1980). This would not be unusual as the brain of our closest living primate relative, the chimpanzee, is born with a brain that is almost 50% of that of an adult, whereas newborn human brain volume and size is less than 25% of an adult (Passingham, 1982; Sacher & Staffeldt, 1974).

If Neanderthals were born at an advanced state of development, then like other rapidly maturing creatures, their brains would have been more "hard wired". Although this might have enabled them to respond to environmental emergencies without being hindered by prolonged helplessness, their ability to modify behavior patterns over the course of development would have been significantly hampered, at least as compared to modern humans.

Moreover, given that the frontal and inferior parietal lobes take well over seven to twenty years to functionally mature in present day humans, rapid brain development and maturity would preclude functional growth and elaboration in these regions. Consider, for example, that the more rapidly developing chimpanzee brain is completely lacking an angular gyrus (see Geschwind, 1966), whereas the majority of their frontal lobe is devoted to motor functions.

In fact, from an educational (enriched vs deprived) perspective alone, given that Neanderthals lived on average to age 40, compared with age 60 for Cro-Magnon, and the amount of time males and females spent engaged in hunting (see below), the Neanderthal young would not have had aged relatives or grandparents to care for and train them, and their mothers would have not have had the time to educate them. As detailed in chapter 28, these culturally deprived conditions would have also exerted pronounced deleterious influences on the developing brain.

However, even if the Neanderthal brain did not mature more rapidly than modern humans, and we ignore early death rates and time spent hunting, it is still clear that they were not as well endowed neurologically and thus cognitively and intellectually, and were thus destined to lose the human race.

EVOLUTION OF "MODERN" & NEANDERTHAL HOMO SAPIENS: THE FRONTAL LOBES

According to the interpretation of the data that will be presented here, variant populations of H. erectus evolved multi-regionally and occupied parts of Asia, the Middle East, Europe, and Africa. From some of these separate branches of humanity, archaic humans also evolved multi-regionally. However, as the environment acts on gene selection, differences in these varied environments in turn affected the pace and nature of evolutionary development, such that European archaics (i.e. Neanderthals) and those H. erectus living in Java failed to "evolve." In the case of the Neanderthals this is due to the extreme artic cold and unique environmental conditions that characterized Middle Paleolithic glacial Europe and the middle East, Neanderthal, became geologically, genetically, and socially isolated and appear to have evolved differently and more slowly that those archaic Homo sapiens who were living in Africa and Asia under different environmental and climatic conditions.

As has now been well established, the environment acts on gene selection, and environmental influences, particularly during infancy and childhood, can exert drastic and significance influences on neurochemistry, neural development, functional and structural interconnections throughout the brain, including the neocortex and limbic system (see chapters 28, 29). Behavior, emotional expression, perception, intelligence, neuronal growth and size, dendritic aborization, and neurochemistry are correspondingly effected.

If generation after generation are exposed to similar adverse and intellectually limiting environmental influences, such as characterized the Neanderthal occupation of glacial Europe during the Middle Paleolithic, the impact would have been even more profound; effecting not just gene selection, but cerebral functional development across generations. Given that the racial-cultural groups who "evolved" in Africa, Asia, etc., over the last two million years were exposed to different environmental experiences and challenges for tens of thousands of years, it might be expected that their brains and thus their cultural and cognitive capabilities would have also "evolved" or at least developed, somewhat differently.

FRONTAL CRANIAL EVOLUTION

These "racial" cerebral/cognitive evolutionary-racial differences are evident by 100,000 years B.P. and include post-cranial anatomy, tool construction, hunting techniques, dental wear, and the size and functional capacity of the frontal lobe and the evolution of the IPL and angular gyrus. For example, in Africa "archaic" H. sapiens with their characteristic sloping frontal cranium have been discovered buried in caves and rock shelters on the Klasies River of South Africa, and from Laetoli in Tanzania, from sites dated around 120,000 B.P. (Butzer, 1982; Grun et al. 2010; Rightmire, 1984). However, over the next 30,000 years the frontal cranium increases in size and "early modern" Middle Paleolithic H. sapiens begin to appear in Africa and the iddle East. However, in Asia, these evolutionary events appear to have been accelerated and to have taken place 10,000 years early.

Nevertheless, by 90,000 B.P. these "early modern" peoples begin to appear in the Middle East, as Homo sapiens with much more advanced features, including individuals with rounded frontal cranium, have been unearthed from Qafzeh in the Middle East, (Schwarcz et al. 1988). As these peoples apparently evolved from archaic H. sapiens, it is reasonable to assume the frontal lobes increased in size -as based on an examination of cultural record, as well as the frontal cranium.

However, these "early modern" H. sapiens, were not only more advanced than "archaics" but they were superior to the Neanderthals who were living in Europe as well as those living and occupying nearby Middle Eastern sites (Mellar, 1989; Stringer, 1988) albeit at a much later date--which again is evidence for multi-regional evolution. Indeed, classic Neanderthal skulls with their large brows, flattened foreheads and thus reduced frontal lobes have been found in the Middle East (i.e. Kebara cave) -sites dated as recently as 60,000 B.P. (Bar-Yosef, 1989). Therefore the 90,000 year old "early modern" Qafzeh skulls are more advanced and show a greater degree of frontal development than the 60,000 year old Neanderthals skulls located nearby. Moreover, a Neanderthal skull from Saint-Cesaire in Western France and dated from between 33,000 to 35,000 B.P., also displays the characteristically reduced frontal lobe cranial features.

However, beginning as early as 75,000 B.P. (as is evident in Australia) "early modern" Paleolithic H. sapiens apparently had evolved into "modern" H. sapiens; individuals who sported fully modern cranial and post-cranial features and who began emerging throughout Asia, Africa, the Middle East, and who began invading Europe. For example, also unearthed in Western France are the 30,000 to 34,000 year old skeletal remains of a Cro-Magnon displaying the bulging frontal cranium that is characteristic of "modern" humans (see Mellars, 1989). This strongly suggests that Cro-Magnon and Neanderthals occupied adjacent (or even the same) territories at about the same time, or that a tremendous expansion suddenly occurred in frontal lobe capacity such that Neanderthals "evolved" into modern humans within 5,000 years.

THE FRONTAL LOBES

As noted, the frontal lobes serve as the "Senior Executive" of the brain and personality, and together with the motor areas make up almost half of the cerebrum (see chapter 19). In contrast, the "archaic" H. sapien and Neanderthal frontal lobe makes up about a third, as a larger proportion of the brain appears to be occipital lobe and visual cortex.

Because the modern frontal lobe is so extensive and highly developed, and as different frontal regions have evolved at different times periods and are organized differently and have different neuroanatomical connections, they are concerned with different functions (chapter 19; see also Fuster 1997; Joseph, 2011a). For example, about one third of the frontal lobe, i.e. the motor areas, are concerned with initiating, planning, and controlling the movement of the body and fine motor functioning. It is this part of the "archaic" and Neanderthal frontal lobe that appears to be most extensively developed.

The "orbital frontal lobes" acts to inhibit and control motivational and emotional impulses arising from within the limbic system (chapter 19). Via orbital frontal interconnections with the limbic system it is possible for emotions to be represented as ideas, and for ideas to trigger emotions. An examination of the "archaic" H. sapien and Neanderthal orbital area (i.e. endocasts) suggests a relative paucity of development.

The more recently evolved anterior (pre-) frontal lobe and the lateral frontal convexity are highly important in regulating the transfer of information to the neocortex, and are involved in perceptual filtering, and exerting steering influences on the neocortex so as to direct attention and intellectual processes. That is, the anterior half of the frontal lobes act to mediate and coordinate information processing throughout the brain by continually sampling, monitoring, inhibiting and thus controlling and regulating perceptual, cognitive, and neocortical activity.

Moreover, social skills, planning skills, the formation of long range goals, the ability to marshal one's resources so as to achieve those goals, and the capacity to consider and anticipate the future, rather than living in the past, as well as develop alternative problem solving strategies and consider a multiple range of ideas simultaneously, are capacities clearly associated with frontal lobe functional integrity.

Hence, an individual is able to not only anticipate the future and the consequences of certain acts, but can formulate and plan secondary goals which depend on the completion of one's initially planned actions. Indeed, the capacity to decide to do something later, to remember and do it later, and to dream and fantasize and to visualize the future as pure possibility are made possible via the frontal lobes (chapter 19).

Conversely, when the frontal lobes have been damaged, or when the "prefrontal" lobes have been disconnected from the rest of the brain (such as following pre-frontal lobotomy), status seeking, social concern, foresight, and emotional, motivational, intellectual, conceptual, initiative, problem solving and organization skills are negatively impacted. Frontal lobe damage or surgical disconnection of the pre-frontal lobe reduces one's ability to profit from experience, to anticipate consequences, or to learn from errors by modifying future behavior.