Rhawn Gabriel Joseph, Ph.D.

Brain Research Laboratory

BrainMind.com

COMPREHENSION AND EXPRESSION OF EMOTIONAL SPEECH

Although language is often discussed in terms of grammar and vocabulary, there is a third major aspect to linguistic expression and comprehension by which a speaker may convey and a listener discern intent, attitude, feeling, mood, context, and meaning. Language is both emotional and grammatically descriptive. A listener comprehends not only the content and grammar of what is said, but the emotion and melody of how it is said -what a speaker feels.

Feeling, be it anger, happiness, sadness, sarcasm, empathy, etc., often is communicated by varying the rate, amplitude, pitch, inflection, timbre, melody and stress contours of the voice. When devoid of intonational contours, language becomes monotone and bland and a listener experiences difficulty discerning attitude, context, intent, and feeling. Conditions such as these arise after damage to select areas of the right hemisphere or when the entire right half of the brain is anesthetized (e.g., during sodium amytal procedures).

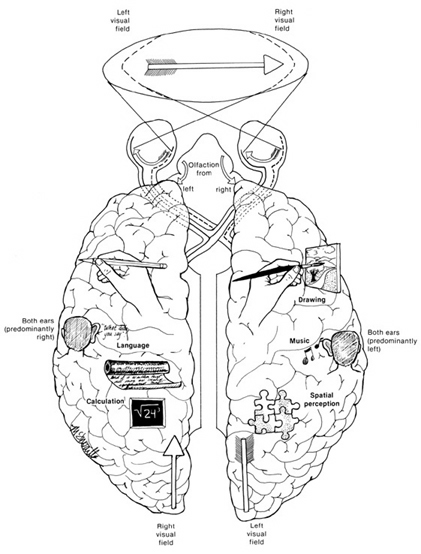

Hence, if I were to say, "Do you want to go outside?" although both hemispheres are able to determine whether a question vs. a statements has been made (Heilman et al. 1984; Weintraub et al. 2011), it is the right cerebrum which analyzes the paralinguistic emotional features of the voice so as to determine whether "going outside" will be fun or whether I am going to punch you in the nose. In fact, even without the aid of the actual words, based merely on melody and tone the right cerebrum can determine context and the feelings of the speaker (Blumstein & Cooper, 2004; DeUrso et al. 1986; Dwyer & Rinn, 1981). This may well explain why even preverbal infants are able to make these same determinations even when spoken to in a foreign language (Fernald, 2015; Haviland & Lelwica, 1987). The left hemisphere has great difficulty with such tasks.

For example, in experiments in which verbal information was filtered and the individual was to determine the context in which a person was speaking (e.g. talking about the death of a friend, speaking to a lost child), the right hemisphere was found to be dominant (Dwyer & Rinn, 1987). It is for these and other reasons that the right half of the brain sometimes is thought to be the more intuitive half of the cerebrum.

. Correspondingly when the right hemisphere is damaged, the ability to process, recall, or even recognize these nonverbal nuances is greatly attenuated. For example, although able to comprehend individual sentences and paragraphs, such patients have difficulty understanding context and emotional connotation, drawing inferences, relating what is heard to its proper context, determining the overall gist or theme, and recognizing discrepancies such that they are likely to miss the point, respond to inappropriate details, and fail to appreciate fully when they are being presented with information that is sarcastic, incongruent or even implausible (Beeman 2018; Brownell et al. 1986; Foldi et al. 2013; Gardner et al. 1983; Kaplan et al. 1990; Rehak et al. 1992; Wapner et al. 2011).

Such patients frequently tend to be very concrete and literal. For example, when presented with the statement, "He had a heavy heart" and requested to choose several interpretations, right-brain damaged (vs. aphasic) patients are more likely to choose a picture of an individual staggering under a large heart vs. a crying person. They also have difficulty describing morals, motives, emotions, or overall main points (e.g. they lose the gestalt), although the ability to recall isolated facts and details is preserved (Delis et al. 1986; Hough 1990; Wapner et al. 2011) -details being the province of the left hemisphere.

Although they are not aphasic, individuals with right hemisphere damage sometimes have difficulty comprehending complex verbal and written statements, particularly when there are features which involve spatial transformations or incongruencies. For example, when presented with the question "Bob is taller than George. Who is shorter? ", those with right-brain damage have difficulties due, presumably, to a deficit in nonlingusitic imaginal processing or an inability to search a spatial representation of what they hear (Carmazza et al. 1976).

In contrast, when presented with "Bob is taller than George. Who is taller?" patients with right-hemisphere damage perform similar to normals, which indicates that the left cerebrum is responsible for providing the solution (Carmazza et al. 1976) given that the right hemisphere is injured and the question does not require any type of spatial transformation. That is, because the question "Who is shorter?" does not necessarily follow the first part of the statements (i.e., incongruent), whereas "Who is taller?" does, these differential findings further suggest that the right hemisphere is more involved than the left in the analysis of incongruencies.

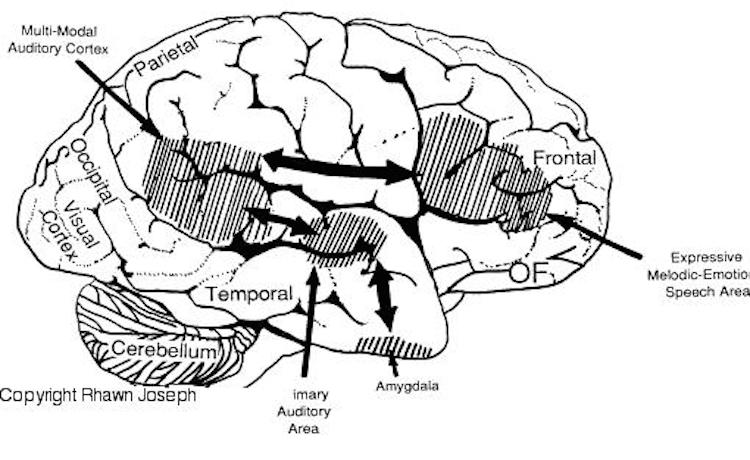

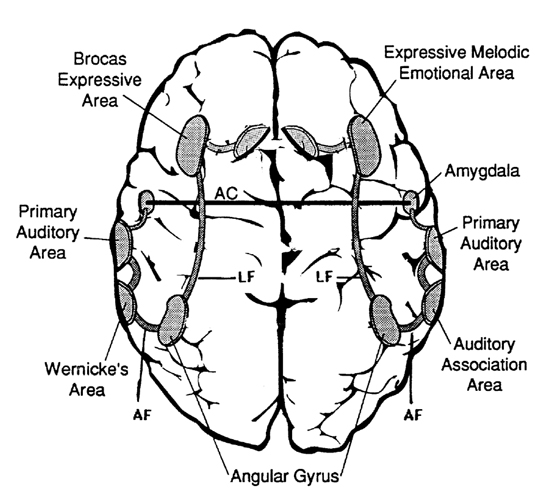

RIGHT HEMISPHERE EMOTIONAL-MELODIC LANGUAGE AXIS

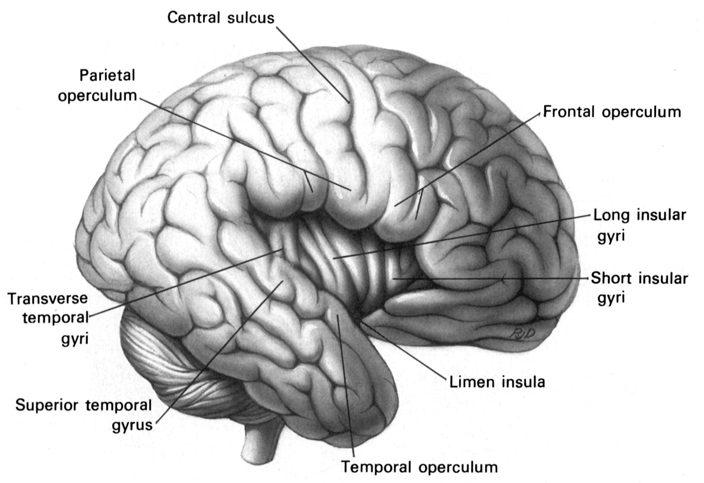

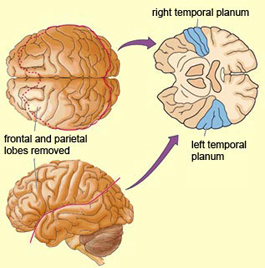

Just as there are areas in the left frontal and temporal-parietal lobes which mediate the expression and comprehension of the denotative, temporal-sequential, grammatical-syntactial aspects of language, there are similar regions within the right hemisphere that mediate emotional speech and comprehension (Gorelick & Ross, 1987; Heilman et al. 1975; Joseph, 1982, 1988a, 2015; Lalande et al. 1992; Ross, 2011; Shapiro & Danly, 1985; Tucker et al., 2014); regions which become highly active when presented with complex nonverbal auditory stimuli (Roland et al. 2011) and when engaged in interpreting the figurative aspects of language (Bottini et al., 2014).

However, as also based on evoked potential studies, the pattern of neurological activity, during the performance of language tasks, does not begin to resemble the adult pattern until the onset of puberty (Hollcomb et al., 1992).

Moreover, although the left hemisphere gradually acquires language, the right hemisphere continues to participate even in non-emotional language processing, including reading, as demonstrated by functional imaging studies (Bottini et al., 2014; Cuenod, et al., 1995; Price et al., 1996).

For example, the right temporal and parietal areas are activated when reading (Bottini et al., 2014; Price et al., 1996), and the right temporal lobe becomes highly active when engaged in interpreting the figurative aspects of language (Bottini et al., 2014). Moreover, bilateral frontal activation is seen when speaking--though this activity is greater on the left (Passingham, 2009; Peterson et al., 1988). In part, however, these latter findings may well reflect those aspects of right hemisphere language processing (temporal-parietal) and expression (frontal-parietal) which are concerned with extracting and vocalizing emotional, motivational, personal, and contextual details.

With lesions that involve the right temporal-parietal area, the ability to comprehend or produce appropriate verbal prosody, emotional speech, or to repeat emotional statements is reduced significantly (Gorelick & Ross, 1987; Heilman et al. 1975; Lalande et al. 2012; Ross, 2011; Starkstein et al. 2014; Tucker et al. 2014). Indeed, when presented with neutral sentences spoken in an emotional manner, right hemisphere damage disrupts perception and discrimination (Heilman et al. 1975; Lalande et al. 1992) and the comprehension of emotional prosody (Heilman et al. 1984; Starkstein et al. 2014) regardless of whether it is positive or negative in content. Moreover, the ability to differentiate between different and even oppositional emotional qualities (e.g., "sarcasm vs irony" or "love" vs "hate") can become distorted (Cicone et al. 1980; Kaplan et al. 1990), and the capacity to appreciate and comprehend humor or mirth may be attenuated (Gardner et al. 1975).

The semantic-contextual ability of the right hemisphere is not limited to prosodic and paralinguistic features, however, but includes the ability to process and recognize familiar, concrete, highly imaginable words (J. Day, 2014; Deloch et al. 1987; Ellis & Shephard, 1975; Hines, 1976; Joseph 1988b; Landis et al., 1982; Mannhaupt, 1983), as well as emotional language in general.

The disconnected right hemisphere also can read printed words (Gazzaniga, 1970; Joseph, 1986b, 1988b; Levy, 1983; Sperry, 1982; Zaidel, 1983), retrieve objects with the left hand in response to direct and indirect verbal commands, e.g. "a container for liquids" (Joseph, 1988b; Sperry, 1982), and spell simple three- and four-letter words with cut-out letters (Sperry, 1982). However, it cannot comprehend complex, non-emotional, written or spoken language.

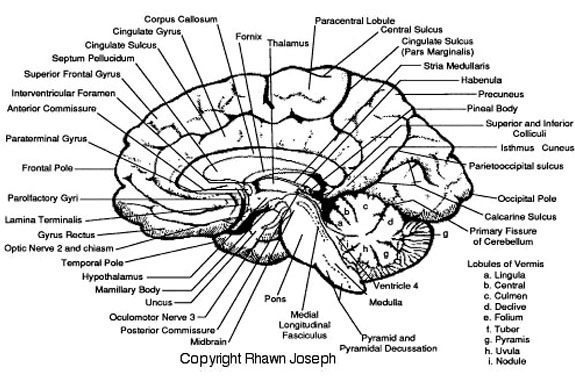

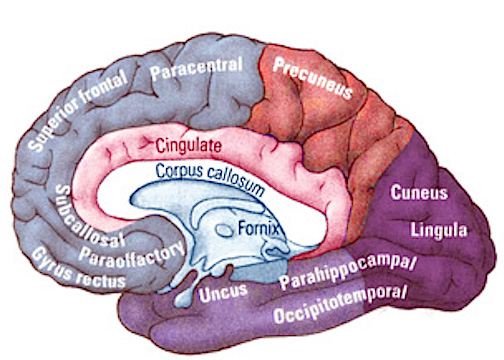

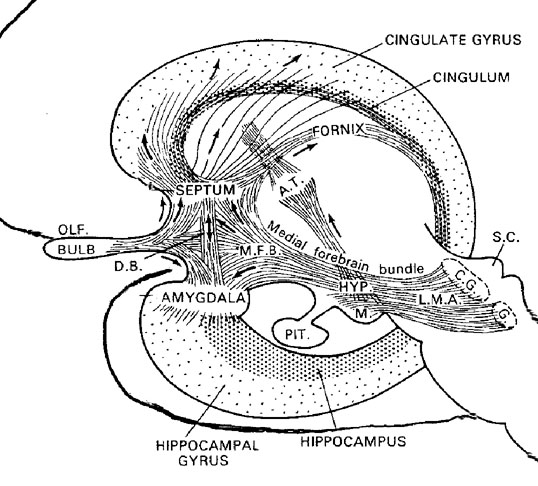

As noted, the right hemisphere dominance for vocal (and non-verbal) emotional expression and comprehension is believed to be secondary to hierarchical neocortical representation of limbic system functions. It may well be dominance by default, however. That is, at one time both hemispheres may well have contributed more or less equally to emotional expression, but with the evolution of language and right handedness, the left hemisphere gradually lost this capacity where it was retained in the right cerebrum (chapter 6). Even so, without the participation of the limbic system, the amygdala and cingulate gyrus in particular, emotional language capabilities would for the most part be nonexistent.

Limbic Language: The Amygdala & Cingulate.

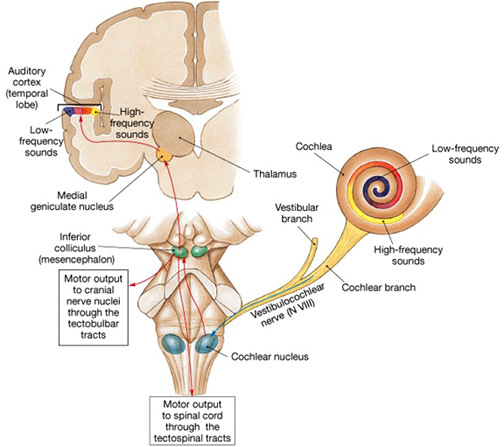

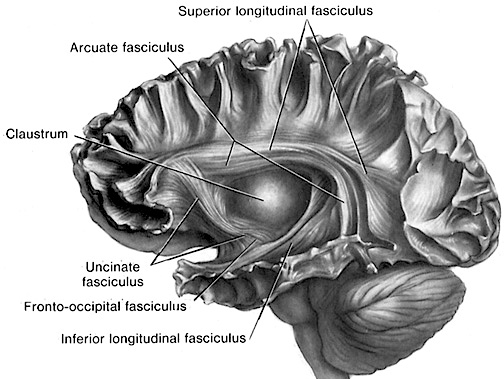

The amygdala appears to contribute to the perception and comprehension of emotional vocalizations which it extracts and imparts to the neocortical language centers via the axonal pathway, the arcuate fasiculus, which links the frontal convexity, the inferior parietal lobule, Wernicke's area, the primary auditory area, and the lateral amygdala (Joseph, 2015). That is, sounds perceived are shunted to and fro between the primary and secondary auditory receiving areas and the amygdala which then acts to sample and analyze them for motivational significance (see chapter 13). Indeed, the amygdala becomes activated when listening to emotional words and sentences (Halgren, 1992; Heith et al., 1989), and if damaged, the ability to vocalize emotional nuances can be disrupted (see chapter 13).

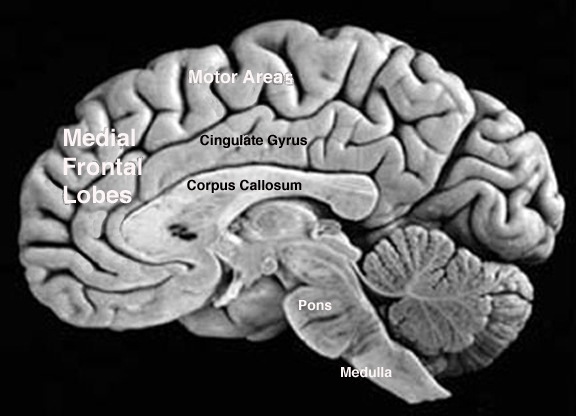

In addition, the anterior cingulate becomes activated when speaking (Dolan et al., 2009; Passingham, 2009) processes and expressed emotional vocalization (Jurgens, 1990; MacLean, 1990) and contributes to emotional sound production via axonal interconnections with the right and left frontal convexity (Broca's area). Indeed, it has been repeatedly demonstrated, using functional imagery, that the anterior cingulate, the right cingulate in particular becomes highly active when vocalizing (Frith & Dolan, 2009; Passingham, 2009; Paulesu et al., 2009; Peterson et al., 1988).

As noted in chapters 5,13,15, over the course of evolution the anterior cingulate appears to have given rise to large portions of the medial frontal lobe and the supplementary motor areas which in turn continued to evolve thus forming the lateral convexity including Broca's area. Via these interconnections, emotional nuances may be imparted directly into the stream of vocal utterances.

Conversely, subcortical right cerebral lesions involving the anterior cingulate, the amygdala, or these fiber interconnections can also result in emotional expressive and receptive disorders (chapter 15). Similarly, lesions to the left temporal or frontal lobe may result in disconnection which in turn may lead to distortions in the vocal expression or perception of emotional nuances -that is, within the left hemisphere.

MUSIC AND NON-VERBAL ENVIRONMENTAL AND ANIMAL SOUNDS

Individuals with extensive left-hemisphere damage and/or severe forms of expressive aphasia, although unable to discourse fluently, may be capable of swearing, singing, praying or making statements of self-pity (Gardner, 1975; Goldstein, 1942; Smith, 1966; Smith & Burklund, 1966; Yamadori et al., 2014). Even when the entire left hemisphere has been removed completely, the ability to sing familiar songs or even learn new ones may be preserved (Smith, 1966; Smith & Burklund, 1966) --although in the absence of music the patient would be unable to say the very words that he or she had just sung (Goldstein, 1942). The preservation of the ability to sing has, in fact, been utilized to promote linguistic recovery in aphasic patients, i.e., melodic-intonation therapy (Albert et al. 2003; Helm-Estabrooks, 1983).

Similarly, there have been reports that some musicians and composers who were suffering from aphasia and/or significant left hemisphere impairment were able to continue their work (Alajounine, 1948; Critchley, 1953; Luria, 2003). In some cases, despite severe receptive aphasia and/or although the ability to read written language (alexia) was disrupted, the ability to read music or to continue composing was preserved (Gates & Bradshaw, 2014; Luria, 1973).

One famous example is that of Maurice Ravel, who suffered an injury to the left half of his brain in an auto accident. This resulted in ideomotor apraxia, dysgraphia, and moderate disturbances in comprehending speech (i.e., Wernicke's Aphasia). Nevertheless, he had no difficulty recognizing various musical compositions, was able to detect even minor errors when compositions were played, and was able to correct those errors by playing them correctly on the piano (Alajounine, 1948).

Conversely, it has been reported that musicians who are suffering from right hemisphere damage (e.g., right temporal-parietal stroke) have major difficulties recognizing familiar melodies and suffer from expressive instrumental amusia (Luria,1973; McFarland & Fortin, 1982). Even among nonmusicians, right hemisphere damage (e.g. right temporal lobectomy) disrupts time sense, rhythm, and the ability to perceive, recognize or recall tones, loudness, timbre, and melody (Chase, 1967; Gates & Bradshaw, 2014; Milner, 1962; Samsom & Zattore, 1988; Yamadori et al., 2014). In fact, right temporal injuries can disrupt the ability to remember musical tunes or to create musical imagery (Zatorre & Halpen, 2015).

Right hemisphere damage also can disrupt the ability to sing or carry a tune and can cause toneless, monotonous speech, as well as abolish the capacity to obtain pleasure while listening to music (Reese. 1948; Ross, 2011; Shapiro & Danly, 1985), i.e., a condition also referred to as amusia. For example, Freeman and Williams (1953) report that removal of the right amygdala in one patient resulted in a great change in the pitch and timbre of speech and that the ability to sing also was severely affected. Similarly, when the right hemisphere is anesthetized the melodic aspects of speech and singing become significantly impaired (Gordon & Bogen, 2004).

It also has been demonstrated consistently in normals (such as in dictotic listening studies) and with brain-injured individuals that the right hemisphere predominates in the perception (and/or expression) of timbre, chords, tone, pitch, loudness, melody, meter, tempo, and intensity (Breitling et al.1987; Curry, 1967; R. Day et al.1971, Gates & Bradshaw, 2014; Gordon, 1970; Gordon & Bogen, 2004; Kester et al. 1991; Kimura, 1964; Knox & Kimura, 1970; McFarland & Fortin, 1982; Milner, 1962; Molfese et al. 1975; Piazza, 1980; Reese, 1948; Segalowitz & Plantery, 1985; Spellacy, 1970; Swisher et al. 1969; Tsunoda, 1975; Zurif, 2004)--the major components (in conjuction with harmony) of a musical stimulus.

For example, in a functional imaging study, it was found that when professional pianists played Bach (Bach's Italian concerto, third movement) that there was increased activity in the right but not left temporal lobe, whereas when they played scales, activity increased in the left but not right temporal lobe (Parsons & Fox, 2009). Likewise, Evers and colleagues (1999) in evaluating cerebral blood velocity, found that a right hemisphere increase in blood flow when listening to harmony (but not rhythm), among non-musicians in general, and especially among females.

In addition, Penfield and Perot (1963) report that musical hallucinations most frequently result from electrical stimulation of the right superior and lateral surface of the temporal lobe. Berrios (1990) also concluded from a review of lesions studies that musical hallucinations were far more likely following right cerebral dysfunction; whereas conversely destruction of this tissue disrupt the ability to conjure up musical imagery (Zatorre & Halpen, 2015). Findings such as these have added greatly to the conviction that the right cerebral hemisphere is dominant in regard to the non-temporal sequential aspects of musical perception and expression.

However, this does not appear to be the case with professional musicians who in some respects tend to treat music as a mathematical language that is subject to rhythmic analysis. As noted when professional pianists played scaled, activity increased in the left but not right temporal lobe (Parsons & Fox 2009). Moroever, Evers and colleagues (1999) found that musicians displayed an increase in left hemisphere blood flow when listening to both harmony and rhythm.

ENVIRONMENTAL AND HUMAN/ANIMAL SOUNDS

In addition to music the right hemisphere has been shown to be superior to the left in discerning and recognizing nonverbal and environmental sounds (Curry, 1967; Joseph, 1988b; Kimura, 1963; King & Kimura, 1972; Knox & Kimura, 1970; Nielsen, 1946; Piazza, 1980; Roland et al. 2011; Schnider et al. 2014; Spreen et al. 1965; Tsunoda, 1975). Similarly, damage that involves select areas within the right hemisphere not only disturb the capacity to discern musical and social-emotional vocal nuances, but may disrupt the ability to perceive, recognize, or disciminate between a diverse number of sounds which occur naturally within the environment (Fujii et al., 1990; Joseph, 2015; Nielsen, 1946; Schnider et al. 2014; Spreen et al. 1965), such as water splashing, a door banging, applause, or even a typewriter; this is a condition which also plagues the disconnected left hemisphere (Joseph, 1988b).

A 47-year old woman I examined who was subsequently found to have a calcium cyst growing from the skull into the right superior temporal lobe, was able to name pictures of animals, tools and household objects. However, she was almost completely unable to recognize and correctly name animal and humans sounds (e.g. a baby crying, a crowd cheering, a lion roaring) which had been briefly presented, but was better able to recognize non-living sounds such as a creaking door, or a hammer hammering--though these abilities were also compromised. However, coupled with other findings to be reviewed below, there is some possiblility that the right temporal lobe is better able to recognize living and true environmental sounds, whereas the left may be better able to recognize and name non-living sounds.

The possibility has been raised that music, verbal emotion, and nonverbal environmental-living sounds are, in some manner, phylogenetically linked (Joseph, 1982, 1988a, 2015). For example, it is possible that right hemisphere dominance for music may be a limbic outgrowth and/or strongly related to its capacity to discern and recognize environmental acoustics as well as its ability to mimic these and other nonverbal and emotional nuances. That is, music may have been invented as a form of mimicry, and/or as a natural modification of what has been described as "limbic language" -(a term coined by Joseph 1982).

For example, it is somewhat probable that primitive man and woman's first exposure to the sounds of music was environmentally embedded, for obviously musical sounds are heard frequently throughout nature (e.g. birds singing, the whistling of the wind, the humming of bees or insects). For example, bird songs can encompass sounds that are "flute-like, truly chime- or bell-like, violin-or guitar-like" and "some are almost as tender as a boy soprano" (Hartshorne, 2003, p. 36).

Hence, perhaps our musical nature is related to our original relationship with nature and resulted from the tendency of humans to mimic sounds that arise from the environment --such as those which conveyed certain feeling states and emotions. Perhaps this is also why certain acoustical naunces, such as those employed in classical music, can affect us emotionally and make us visualize scenes from nature (e.g., an early spring morning, a raging storm, bees in flight).

MUSIC AND EMOTION

Music is related strongly to emotion and, in fact, may not only be "pleasing to the ear," but invested with emotional significance. For example, when played in a major key, music sounds happy or joyful. When played in a minor key, music often is perceived as sad or melancholic. We are all familiar with the "blues" and perhaps at one time or another have felt like "singing for joy," or have told someone, "You make my heart sing!"

Interestingly, it has been reported that music can act to accelerate pulse rate (Reese, 1948), raise or lower blood pressure, and, thus, alter the rhythm of the heart's beat. Rhythm, of course, is a major component of music.

Music and vocal emotional nuances also share certain features, such as melody, intonation., etc., all of which are predominantly processed and mediated by the right cerebrum. Thus, the right hemisphere has been found to be superior to the left in identifying the emotional tone of musical passages and, in fact, judges music to be more emotional as compared to the left cerebrum (Bryden et al. 1982).

Left Hemisphere Musical Contributions.

There is some evidence to indicate that certain aspects of pitch, time sense and rhythm, are mediated to a similar degree by both cerebral hemispheres (Milner, 1962), with rhythm being associated with increased left cerebral activity (Evers et al., 1999). Of course, time sense and rhythm are also highly important in speech perception. These findings support the possibility of a left hemisphere contribution to music. In fact, some authors have argued that receptive amusia is due to left hemisphere damage and that expressive amusia is due to right hemisphere dysfunction (Wertheim, 1969).

It also has been been reported that some musicians tend to show a left hemisphere dominance in the perception of certain aspects of music (Gates & Bradshaw, 2014), particularly rhythm (Evers et al., 1999). Indeed, when the sequential and rhythmical aspects of music are emphasized, the left hemisphere becomes increasingly involved (Breitling et al. 1987; Halperin et al. 2003). In this regard, it seems that when music is treated as a type of language to be acquired or when its mathematical and temporal-sequential features are emphasized (Breitling et al., 1987), the left cerebral hemisphere becomes heavily involved in its production and perception; especially in professional musicians (Evers et al., 1999).

However, even in non-musicians the left hemisphere typically displays if not dominance, then an equal capability in regard to the production of rhythm. In this regard, just as the right hemisphere makes important contributions to the perception and expression of language, it also takes both halves of the brain to make music.