Rhawn Gabriel Joseph, Ph.D.

BrainMind.com

COMPREHENSION AND EXPRESSION OF EMOTIONAL SPEECH

Although language is often discussed in terms of grammar and vocabulary, there is a third major aspect to linguistic expression and comprehension by which a speaker may convey and a listener discern intent, attitude, feeling, mood, context, and meaning. Language is both emotional and grammatically descriptive. A listener comprehends not only the content and grammar of what is said, but the emotion and melody of how it is said -what a speaker feels.

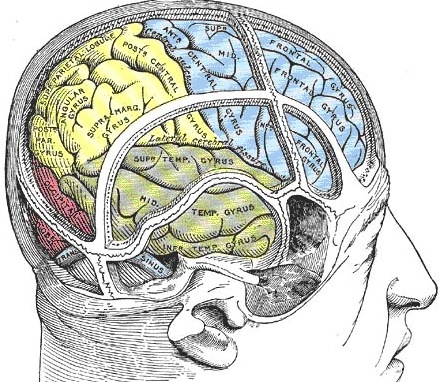

Feeling, be it anger, happiness, sadness, sarcasm, empathy, etc., often is communicated by varying the rate, amplitude, pitch, inflection, timbre, melody and stress contours of the voice. When devoid of intonational contours, language becomes monotone and bland and a listener experiences difficulty discerning attitude, context, intent, and feeling. Conditions such as these arise after damage to select areas of the right hemisphere or when the entire right half of the brain is anesthetized (e.g., during sodium amytal procedures).

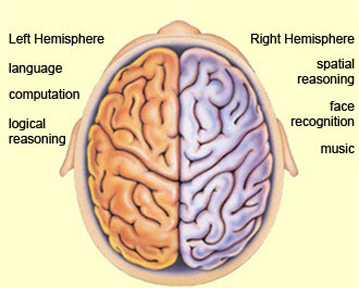

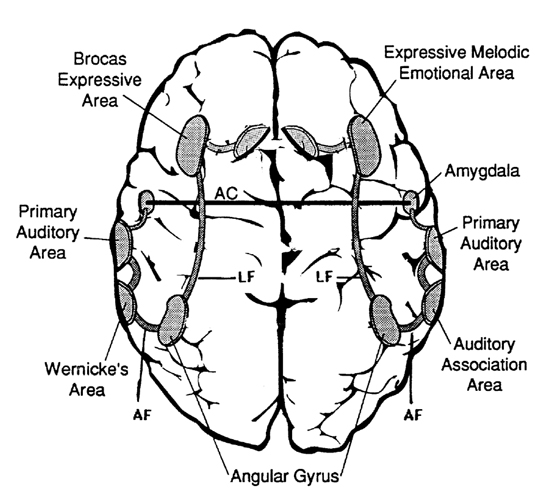

It is now well established (based on studies of normal and brain-damaged subjects) that the right hemisphere is superior to the left in distinguishing, interpreting, and processing vocal inflectional nuances, including intensity, stress and melodic pitch contours, timbre, cadence, emotional tone, frequency, amplitude, melody, duration, and intonation (Blumstein & Cooper, 1974; Bowers et al. 1987; Carmon & Nachshon, 1973; Heilman et al. 2005; Ley & Bryden, 1979; Mahoney & Sainsbury, 1987; Ross, 2011; Safer & Leventhal, 2017; Samson & Zatorre, 2018, 1992; Shapiro & Danly, 1985; Tucker et al. 2017). The right hemisphere, therefore, is fully capable of determining and deducing not only what a persons feels about what he or she is saying, but why and in what context he is saying it --even in the absence of vocabulary and other denotative linguistic features (Blumstein & Cooper, 1974; DeUrso et al. 1986; Dwyer & Rinn, 2011). This occurs through the analysis of tone and melody.

Hence, if I were to say, "Do you want to go outside?" although both hemispheres are able to determine whether a question vs. a statements has been made (Heilman et al. 2014; Weintraub et al. 2011), it is the right cerebrum which analyzes the paralinguistic emotional features of the voice so as to determine whether "going outside" will be fun or whether I am going to punch you in the nose. In fact, even without the aid of the actual words, based merely on melody and tone the right cerebrum can determine context and the feelings of the speaker (Blumstein & Cooper, 1974; DeUrso et al. 1986; Dwyer & Rinn, 2011). This may well explain why even preverbal infants are able to make these same determinations even when spoken to in a foreign language (Fernald, 1993; Haviland & Lelwica, 1987). The left hemisphere has great difficulty with such tasks.

For example, in experiments in which verbal information was filtered and the individual was to determine the context in which a person was speaking (e.g. talking about the death of a friend, speaking to a lost child), the right hemisphere was found to be dominant (Dwyer & Rinn, 2011). It is for these and other reasons that the right half of the brain sometimes is thought to be the more intuitive half of the cerebrum.

Correspondingly when the right hemisphere is damaged, the ability to process, recall, or even recognize these nonverbal nuances is greatly attenuated. For example, although able to comprehend individual sentences and paragraphs, such patients have difficulty understanding context and emotional connotation, drawing inferences, relating what is heard to its proper context, determining the overall gist or theme, and recognizing discrepancies such that they are likely to miss the point, respond to inappropriate details, and fail to appreciate fully when they are being presented with information that is sarcastic, incongruent or even implausible (Beeman 1993; Brownell et al. 1986; Foldi et al. 2013; Gardner et al. 2013; Kaplan et al. 2017; Rehak et al. 1992; Wapner et al. 2011).

Such patients frequently tend to be very concrete and literal. For example, when presented with the statement, "He had a heavy heart" and requested to choose several interpretations, right-brain damaged (vs. aphasic) patients are more likely to choose a picture of an individual staggering under a large heart vs. a crying person. They also have difficulty describing morals, motives, emotions, or overall main points (e.g. they lose the gestalt), although the ability to recall isolated facts and details is preserved (Delis et al. 1986; Hough 2017; Wapner et al. 2011) -details being the province of the left hemisphere.

Although they are not aphasic, individuals with right hemisphere damage sometimes have difficulty comprehending complex verbal and written statements, particularly when there are features which involve spatial transformations or incongruencies. For example, when presented with the question "Bob is taller than George. Who is shorter? ", those with right-brain damage have difficulties due, presumably, to a deficit in nonlingusitic imaginal processing or an inability to search a spatial representation of what they hear (Carmazza et al. 1976).

In contrast, when presented with "Bob is taller than George. Who is taller?" patients with right-hemisphere damage perform similar to normals, which indicates that the left cerebrum is responsible for providing the solution (Carmazza et al. 1976) given that the right hemisphere is injured and the question does not require any type of spatial transformation. That is, because the question "Who is shorter?" does not necessarily follow the first part of the statements (i.e., incongruent), whereas "Who is taller?" does, these differential findings further suggest that the right hemisphere is more involved than the left in the analysis of incongruencies.

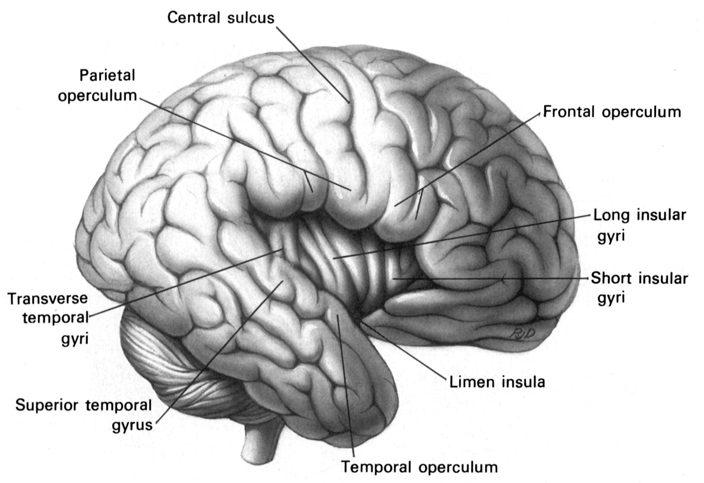

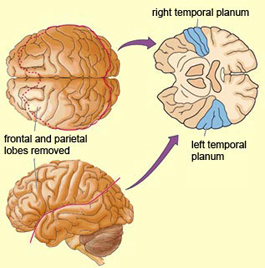

Moreover, it appears that during the early stages of neonatal and infant development, that the role of the right hemisphere in language expression and perception was even more pronounced. As originally proposed by Joseph (1982, 2018a), language in the neonate and infant is dominated by the right hemisphere, which in turn accounts for the initial prosodic, melodic, and emotional qualities of their vocalizations. Of course, the left hemisphere is genetically programmed to gain functional dominance and to acquire the grammatical, temporal sequential, word-rich, and expressive-motor aspects of speech--as is evident neuronatomically by the presence of asymmetries in the fetal and neontal planum temporal (Wada et al., 2005; Witelson & Palli, 1973), and the fact that the left cerebral pyramidal tract descends and establishes synaptic contact with the brainstem and spinal cord in advance of the right (Kertesz & Geschwind 1971; Yakovlev & Rakic 1966).

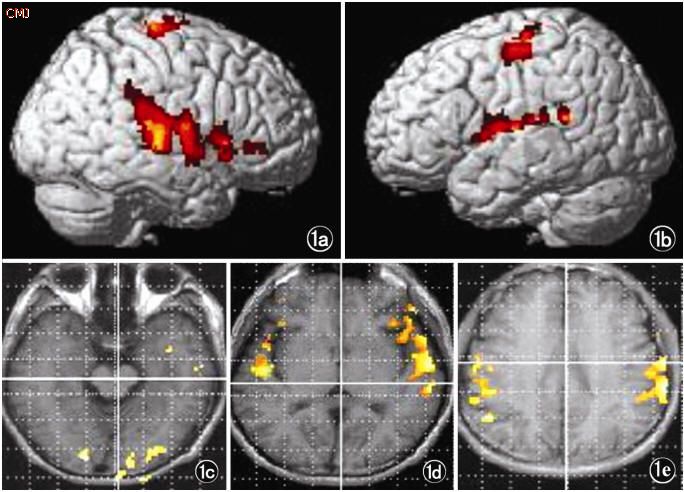

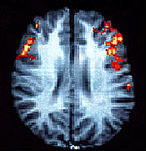

However, as also based on evoked potential studies, the pattern of neurological activity, during the performance of language tasks, does not begin to resemble the adult pattern until the onset of puberty (Hollcomb et al., 1992). Moreover, although the left hemisphere gradually acquires language, the right hemisphere continues to participate even in non-emotional language processing, including reading, as demonstrated by functional imaging studies (Bottini et al., 2004; Cuenod, et al., 1995; Price et al., 1996).

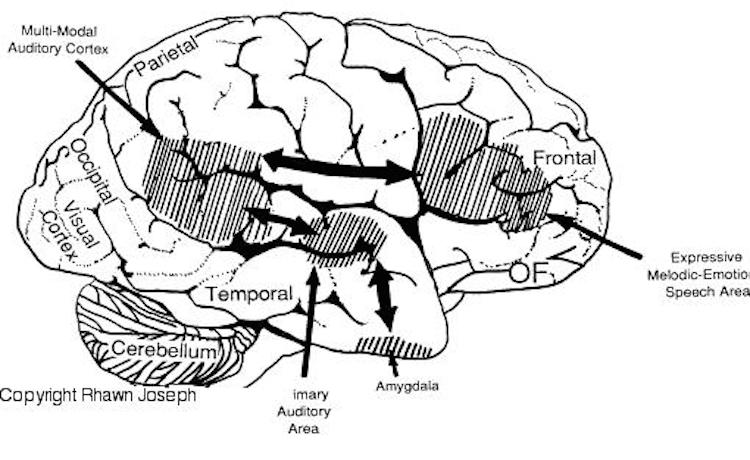

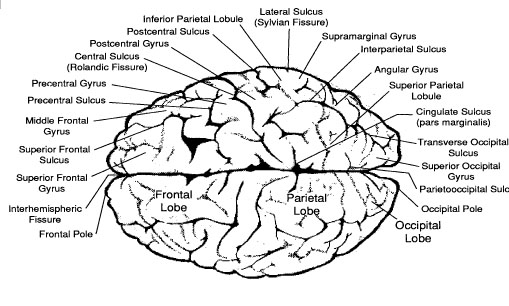

For example, the right temporal and parietal areas are activated when reading (Bottini et al., 2004; Price et al., 1996), and the right temporal lobe becomes highly active when engaged in interpreting the figurative aspects of language (Bottini et al., 2004). Moreover, bilateral frontal activation is seen when speaking--though this activity is greater on the left (Passingham, 2013; Peterson et al., 2018). In part, however, these latter findings may well reflect those aspects of right hemisphere language processing (temporal-parietal) and expression (frontal-parietal) which are concerned with extracting and vocalizing emotional, motivational, personal, and contextual details.

Language activation of the right and left hemisphere

For example, right frontal damage has been associated with a loss of emotional speech and emotional gesturing and a significantly reduced ability to mimic various nonlinguistic vocal patterns (Joseph 2018a; Ross, 2011, 1993; Shapiro & Danly, 1985). In these instances, speech can becomes flat and monotone or characterized by inflectional distortions.

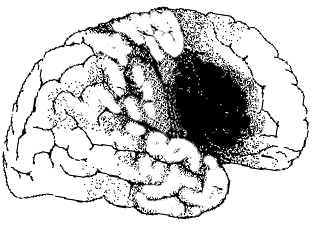

With lesions that involve the right temporal-parietal area, the ability to comprehend or produce appropriate verbal prosody, emotional speech, or to repeat emotional statements is reduced significantly (Gorelick & Ross, 1987; Heilman et al. 2005; Lalande et al. 1992; Ross, 2011; Starkstein et al. 2004; Tucker et al. 2017). Indeed, when presented with neutral sentences spoken in an emotional manner, right hemisphere damage disrupts perception and discrimination (Heilman et al. 2005; Lalande et al. 1992) and the comprehension of emotional prosody (Heilman et al. 2014; Starkstein et al. 2004) regardless of whether it is positive or negative in content. Moreover, the ability to differentiate between different and even oppositional emotional qualities (e.g., "sarcasm vs irony" or "love" vs "hate") can become distorted (Cicone et al. 1980; Kaplan et al. 2017), and the capacity to appreciate and comprehend humor or mirth may be attenuated (Gardner et al. 2005).

The semantic-contextual ability of the right hemisphere is not limited to prosodic and paralinguistic features, however, but includes the ability to process and recognize familiar, concrete, highly imaginable words (J. Day, 2017; Deloch et al. 1987; Ellis & Shephard, 2005; Hines, 1976; Joseph 2018b; Landis et al., 1982; Mannhaupt, 2013), as well as emotional language in general.

The disconnected right hemisphere also can read printed words (Gazzaniga, 1970; Joseph, 1986b, 2018b; Levy, 2013; Sperry, 1982; Zaidel, 2013), retrieve objects with the left hand in response to direct and indirect verbal commands, e.g. "a container for liquids" (Joseph, 2018b; Sperry, 1982), and spell simple three- and four-letter words with cut-out letters (Sperry, 1982). However, it cannot comprehend complex, non-emotional, written or spoken language.

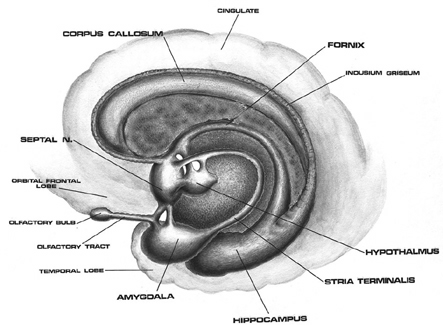

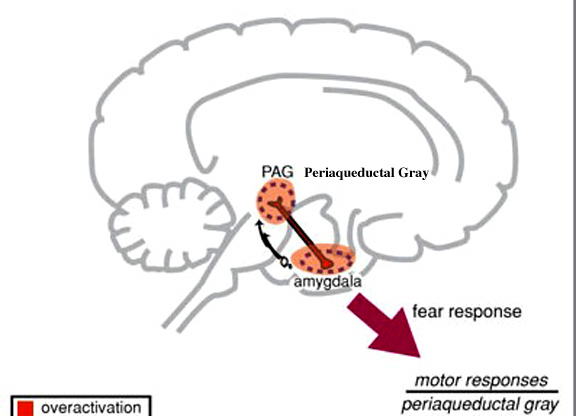

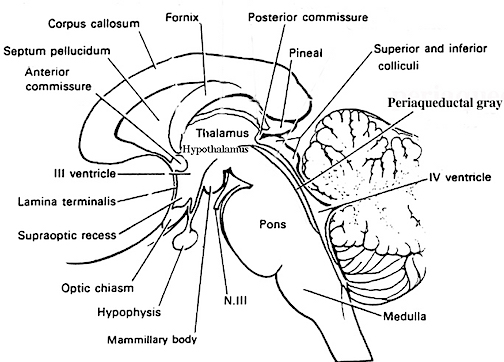

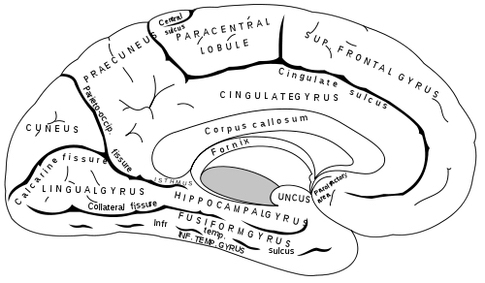

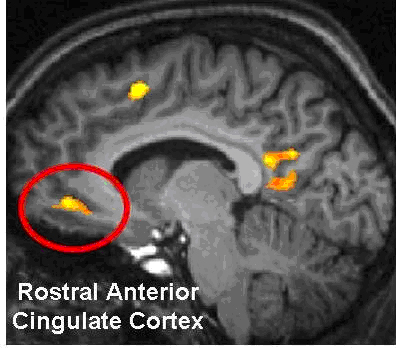

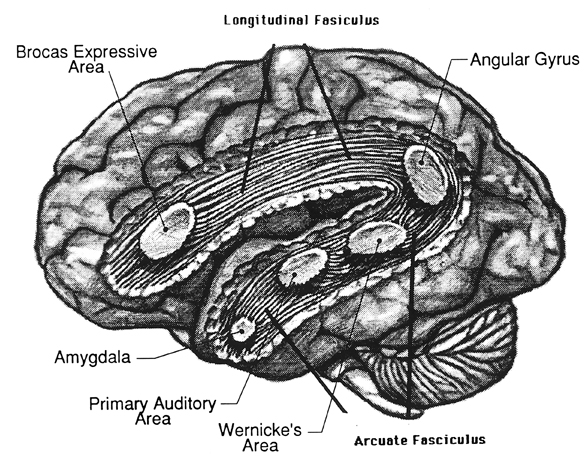

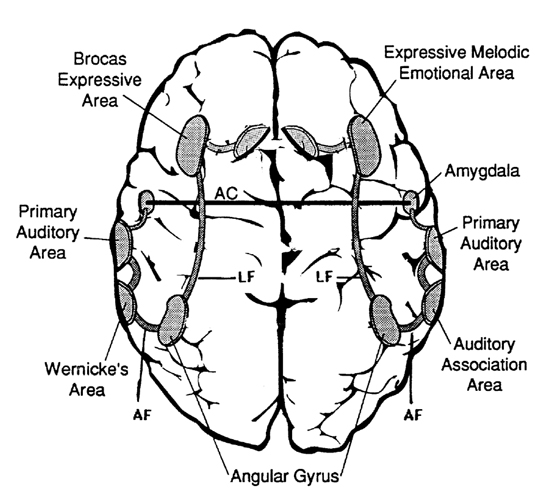

As noted, the right hemisphere dominance for vocal (and non-verbal) emotional expression and comprehension is believed to be secondary to hierarchical neocortical representation of limbic system functions. It may well be dominance by default, however. That is, at one time both hemispheres may well have contributed more or less equally to emotional expression, but with the evolution of language and right handedness, the left hemisphere gradually lost this capacity where it was retained in the right cerebrum (chapter 6). Even so, without the participation of the limbic system, the amygdala and cingulate gyrus in particular, emotional language capabilities would for the most part be nonexistent.

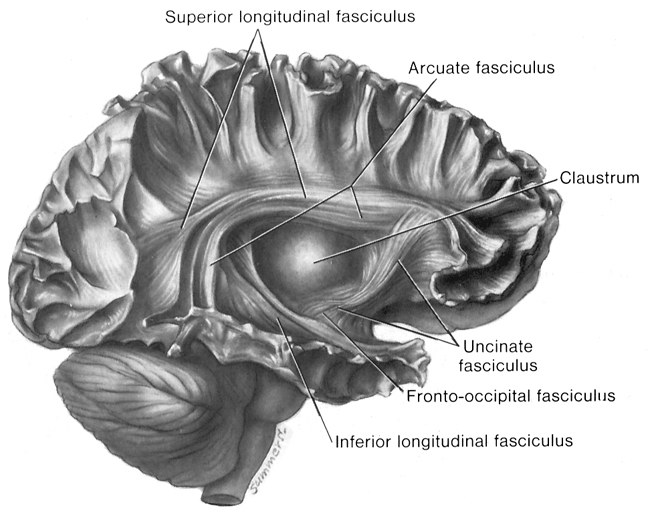

Conversely, subcortical right cerebral lesions involving the anterior cingulate, the amygdala, or these fiber interconnections can also result in emotional expressive and receptive disorders (chapter 15). Similarly, lesions to the left temporal or frontal lobe may result in disconnection which in turn may lead to distortions in the vocal expression or perception of emotional nuances -that is, within the left hemisphere.

SEX DIFFERENCES IN EMOTIONAL SOUND PRODUCTION & PERCEPTION

As detailed in chapter 5 and 15, the evolution of the anterior cingulate gyrus corresponded with the onset of long term maternal care, and presumably the advent of mother-infant vocalization and intercommunication. The presence of an adult female, in fact, appears to promote language production in infants, as well as adult males and other females. Thus non-threatening, complex social-emotional vocalizations are to some degree strongly associated with female-maternal behavior and the desire to form social-emotional attachments (chapter 15).

Across most social-living mammalian species, females tend to produce a greater range of social-emotional (limbic) vocalization (Joseph, 1993, 2009e). For example, human females tend to employ 5-6 different prosodic variations and to utilize the higher registers when conversing. They are also more likely to employ glissando or sliding effects between stressed syllables, and they tend to talk faster as well (Brend, 2005; Coleman, 1971; Edelsky, 1979). Men tend to be more monotone, employing 2-3 variations on average, most of which hovers around the lower registers (Brend, 2005; Coleman, 1971; Edelsky, 1979). Even when trying to emphasize a point males are less likely to employ melodic extremes but instead tend to speak louder. This is not, however, a function of sex differences in the oral-laryngeal structures, but are due to the greater capacity of the female right hemisphere (and limbic system) to express and perceive these nuance, including for example, the sounds of harmony (Evers et al., 2009)

For example, it has been repeatedly demonstrated that females are more emotionally expressive, and are more perceptive in regard to comprehending emotional verbal nuances (Burton & Levy, 2017; Hall, 1978; Soloman & Ali, 2001). This superior sensitivity includes the ability to feel and verbally express empathy (Burton & Levy, 2017; Safer, 2011) and the comprehension of emotional faces (Buck, Miller & Caul, 1974; Buck, Savin, Miller & Caul, 2001; see also Evans et al. 1995). In fact, from childhood to adulthood women appear to be much more emotionally expressive than males in general (Gilbert, 1969; see Brody, 1985; Burton & Levy, 2017 for review).

As detailed in chapters 6 and 7, in addition to the evolution of the "maternal" anterior cingulate gyrus, this language superiority appears to be in part a consequence of the differential activities engaged in by men (hunting) vs females (gathering, food preparation) for much of human history, and the possibility that the female right hemisphere has more neocortical space committed to emotional perception and expression (chapter 7).

As noted, this superiority is probably also a consequence of limbic system sexual differentiation, and the role of the female limbic system in promoting maternal care and communication (see chapter 15). Thus, regardless of culture, human mothers tend to emphasize and even exaggerate social-emotional, and melodic-prosodic vocal features when interacting with their infants (Fernald, 1992; Fernald et al. 2017), which in turn appears to greatly influence infant emotional behavior and attention (Fernald, 2013).

"I did not have sex with that woman..." -President Bill Clinton before his impeachment for lying under oath.

"I am not a crook" --President Richard Nixon before resigning from the Presidency.

It is not uncommon for individuals to lie. However, sometimes they believe their own lies, and this can be the basis for self-deception. In the extreme, however, some individuals following cerebral injury, make up lies that are so bizarre it takes on the form of confabulation.

In contrast to left frontal convexity lesions which can result in speech arrest (Broca's expressive aphasia) and/or significant reductions in verbal fluency, right frontal damage sometimes has been observed to result in speech release, excessive verbosity, tangentiality, and in the extreme, confabulation (Fischer et al. 1995; Joseph, 1986a, 2018a, 2009a).

When secondary to frontal damage, conflabulation seems to be due to disinhibition, difficulty monitoring responses, withholding answers, utilizing external or internal cues to make corrections, or suppressing the flow of tangential and circumstantial ideas (Shapiro et al. 2011; Stuss et al. 1978). When this occurs, the language axis of the left hemisphere becomes overwhelmed and flooded by irrelevant associations (Joseph, 1986a, 2018a, 2009a). In some cases the content of the confabulation may border on the bizarre and fantastical as loosely associated ideas become organized and anchored around fragments of current experience.

MEMORY AND CONFABULATION

As detailed in chapters 10, 19, confablation is associated with right frontal lesions in part because of flooding of the speech areas with irrelevant associations. However, yet another factor is loss of memory--or rather, an inability to retrieval autobiographical and episodic details; even those stored using language. Indeed, the right fontal lobe is directly implicated in episodic and autobiographical memory retrieval--as also recently demonstrated using functional imaging (see chapter 19).

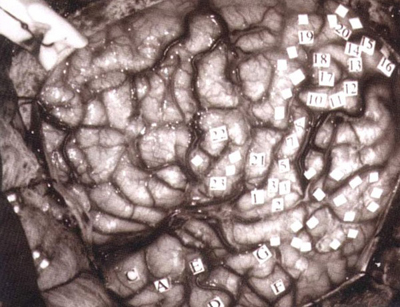

Specifically, episodic memory memories are perceptual and are stored in an autobiographical context, and when engaged in episodic retrieval, there is a significant activation of the right frontal lobe, (Brewer et al., 1998; Dolan et al., 2013; Tulving et al., 2004; Kapur et al., 1995), right thalamus, and right medial temporal lobe (Dolan et al., 2013), even when the tasks requiring verbal processing. For example, in a test of unconscious memory, subjects were presented with word stems of complete words were previously presented there was increased blood flow in the right hippocampus and right frontal lobe (Squire, et al., 1992). Right frontal activation was also seen in a recognition tasks involving sentences viewed the day before (Tulving et al., 2004). Presumably, activity increases in the right frontal lobe as a function of retrieval effort (Kapur et al., 1995), whereas injury to the right frontal lobe results in retrieval failure and thus a gap in the information and memories access, coupled with disinhibition and a flooding of the language axis with irrelevant associations.

GAP-FILLING

Confabulation also can result from lesions that involve the posterior portions of the right hemisphere, immaturity or surgical section of the corpus callosum, or destruction of fiber tracts that lead to the left hemisphere (Joseph, 1982, 1986ab, 2018ab; Joseph et al. 2014). This results in incomplete information transfer and reception within discrete brain regions, so that one area of the brain and mind are disconnected from another.

As a consequence, because the language axis of the left hemisphere is unable to gain access to needed information, it attempts to fill the gap with information that is related in some manner to the fragments received. However, because the language areas are disconnected from the source of needed information, it cannot be informed that what it is saying (or, rather, making up) is erroneous, at least insofar as the damaged modality is concerned.

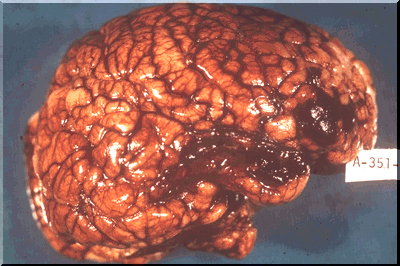

Confabulation and delusional denial also often accompany neglect and body-image disturbances secondary to right cerebral (parietal) damage (Joseph 1982, 1986a, 2018a). For example, the left hemisphere may claim that a paralyzed left leg or arm is normal or that it belongs to someone other than the patient. This occurs in many cases because somesthetic body information no longer is being processed or transferred by the damaged right hemisphere; the body image and the memory of the left half of the body have been deleted. In all these instances, however, although the damage may be in the right hemisphere, it is the speaking half of the brain that confabulates.

Individuals with extensive left-hemisphere damage and/or severe forms of expressive aphasia, although unable to discourse fluently, may be capable of swearing, singing, praying or making statements of self-pity (Gardner, 2005; Goldstein, 1942; Smith, 1966; Smith & Burklund, 1966; Yamadori et al., 2017). Even when the entire left hemisphere has been removed completely, the ability to sing familiar songs or even learn new ones may be preserved (Smith, 1966; Smith & Burklund, 1966) --although in the absence of music the patient would be unable to say the very words that he or she had just sung (Goldstein, 1942). The preservation of the ability to sing has, in fact, been utilized to promote linguistic recovery in aphasic patients, i.e., melodic-intonation therapy (Albert et al. 1973; Helm-Estabrooks, 2013).

Similarly, there have been reports that some musicians and composers who were suffering from aphasia and/or significant left hemisphere impairment were able to continue their work (Alajounine, 1948; Critchley, 1953; Luria, 1973). In some cases, despite severe receptive aphasia and/or although the ability to read written language (alexia) was disrupted, the ability to read music or to continue composing was preserved (Gates & Bradshaw, 2017; Luria, 1973).

One famous example is that of Maurice Ravel, who suffered an injury to the left half of his brain in an auto accident. This resulted in ideomotor apraxia, dysgraphia, and moderate disturbances in comprehending speech (i.e., Wernicke's Aphasia). Nevertheless, he had no difficulty recognizing various musical compositions, was able to detect even minor errors when compositions were played, and was able to correct those errors by playing them correctly on the piano (Alajounine, 1948).

Right hemisphere damage also can disrupt the ability to sing or carry a tune and can cause toneless, monotonous speech, as well as abolish the capacity to obtain pleasure while listening to music (Reese. 1948; Ross, 2011; Shapiro & Danly, 1985), i.e., a condition also referred to as amusia. For example, Freeman and Williams (1953) report that removal of the right amygdala in one patient resulted in a great change in the pitch and timbre of speech and that the ability to sing also was severely affected. Similarly, when the right hemisphere is anesthetized the melodic aspects of speech and singing become significantly impaired (Gordon & Bogen, 1974).

It also has been demonstrated consistently in normals (such as in dichotic listening studies) and with brain-injured individuals that the right hemisphere predominates in the perception (and/or expression) of timbre, chords, tone, pitch, loudness, melody, meter, tempo, and intensity (Breitling et al.1987; Curry, 1967; R. Day et al.1971, Gates & Bradshaw, 2017; Gordon, 1970; Gordon & Bogen, 1974; Kester et al. 2013; Kimura, 1964; Knox & Kimura, 1970; McFarland & Fortin, 1982; Milner, 1962; Molfese et al. 2005; Piazza, 1980; Reese, 1948; Segalowitz & Plantery, 1985; Spellacy, 1970; Swisher et al. 1969; Tsunoda, 2005; Zurif, 1974)--the major components (in conjuction with harmony) of a musical stimulus.

For example, in a functional imaging study, it was found that when professional pianists played Bach (Bach's Italian concerto, third movement) that there was increased activity in the right but not left temporal lobe, whereas when they played scales, activity increased in the left but not right temporal lobe (Parsons & Fox, 2013). Likewise, Evers and colleagues (2009) in evaluating cerebral blood velocity, found that a right hemisphere increase in blood flow when listening to harmony (but not rhythm), among non-musicians in general, and especially among females.

However, this does not appear to be the case with professional musicians who in some respects tend to treat music as a mathematical language that is subject to rhythmic analysis. As noted when professional pianists played scaled, activity increased in the left but not right temporal lobe (Parsons & Fox 2013). Moroever, Evers and colleagues (2009) found that musicians displayed an increase in left hemisphere blood flow when listening to both harmony and rhythm.

ENVIRONMENTAL AND HUMAN/ANIMAL SOUNDS

In addition to music the right hemisphere has been shown to be superior to the left in discerning and recognizing nonverbal, animal, and environmental sounds (Curry, 1967; Joseph, 2018b; Kimura, 1963; King & Kimura, 2001; Knox & Kimura, 1970; Nielsen, 1946; Piazza, 1980; Roland et al. 2011; Schnider et al. 2004; Spreen et al. 1965; Tsunoda, 2005).

Similarly, damage that involves select areas within the right hemisphere not only disturb the capacity to discern musical and social-emotional vocal nuances, but may disrupt the ability to perceive, recognize, or disciminate between a diverse number of sounds which occur naturally within the environment (Fujii et al., 2017; Joseph, 1993; Nielsen, 1946; Schnider et al. 2004; Spreen et al. 1965), such as water splashing, a door banging, applause, or even a typewriter; this is a condition which also plagues the disconnected left hemisphere (Joseph, 2018b).

A 47-year old woman I examined who was subsequently found to have a calcium cyst growing from the skull into the right superior temporal lobe, was able to name pictures of animals, tools and household objects. However, she was almost completely unable to recognize and correctly name animal and humans sounds (e.g. a baby crying, a crowd cheering, a lion roaring) which had been briefly presented, but was better able to recognize non-living sounds such as a creaking door, or a hammer hammering--though these abilities were also compromised. However, coupled with other findings to be reviewed below, there is some possibility that the right temporal lobe is better able to recognize living and true environmental sounds, whereas the left may be better able to recognize and name non-living sounds.

The possibility has been raised that music, verbal emotion, and nonverbal environmental-living sounds are, in some manner, phylogenetically linked (Joseph, 1982, 2018a, 1993). For example, it is possible that right hemisphere dominance for music may be a limbic outgrowth and/or strongly related to its capacity to discern and recognize environmental acoustics as well as its ability to mimic these and other nonverbal and emotional nuances. That is, music may have been invented as a form of mimicry, and/or as a natural modification of what has been described as "limbic language" -(a term coined by Joseph 1982).

For example, it is somewhat probable that primitive man and woman's first exposure to the sounds of music was environmentally embedded, for obviously musical sounds are heard frequently throughout nature (e.g. birds singing, the whistling of the wind, the humming of bees or insects). For example, bird songs can encompass sounds that are "flute-like, truly chime- or bell-like, violin-or guitar-like" and "some are almost as tender as a boy soprano" (Hartshorne, 1973, p. 36).

MUSIC AND EMOTION

Music is related strongly to emotion and, in fact, may not only be "pleasing to the ear," but invested with emotional significance. For example, when played in a major key, music sounds happy or joyful. When played in a minor key, music often is perceived as sad or melancholic. We are all familiar with the "blues" and perhaps at one time or another have felt like "singing for joy," or have told someone, "You make my heart sing!"

Interestingly, it has been reported that music can act to accelerate pulse rate (Reese, 1948), raise or lower blood pressure, and, thus, alter the rhythm of the heart's beat. Rhythm, of course, is a major component of music.

Left Hemisphere Musical Contributions.

There is some evidence to indicate that certain aspects of pitch, time sense and rhythm, are mediated to a similar degree by both cerebral hemispheres (Milner, 1962), with rhythm being associated with increased left cerebral activity (Evers et al., 2009). Of course, time sense and rhythm are also highly important in speech perception. These findings support the possiblity of a left hemisphere contribution to music. In fact, some authors have argued that receptive amusia is due to left hemisphere damage and that expressive amusia is due to right hemisphere dysfunction (Wertheim, 1969).

It also has been been reported that some musicians tend to show a left hemisphere dominance in the perception of certain aspects of music (Gates & Bradshaw, 2017), particularly rhythm (Evers et al., 2009). Indeed, when the sequential and rhythmical aspects of music are emphasized, the left hemisphere becomes increasingly involved (Breitling et al. 2014; Halperin et al. 1973). In this regard, it seems that when music is treated as a type of language to be acquired or when its mathematical and temporal-sequential features are emphasized (Breitling et al., 2014), the left cerebral hemisphere becomes heavily involved in its production and perception; especially in professional musicians (Evers et al., 2009).

However, even in non-musicians the left hemisphere typically displays if not dominance, then an equal capability in regard to the production of rhythm. In this regard, just as the right hemisphere makes important contributions to the perception and expression of language, it also takes both halves of the brain to make music.