Rhawn Gabriel Joseph, Ph.D.

Brain Research Laboratory

BrainMind.com

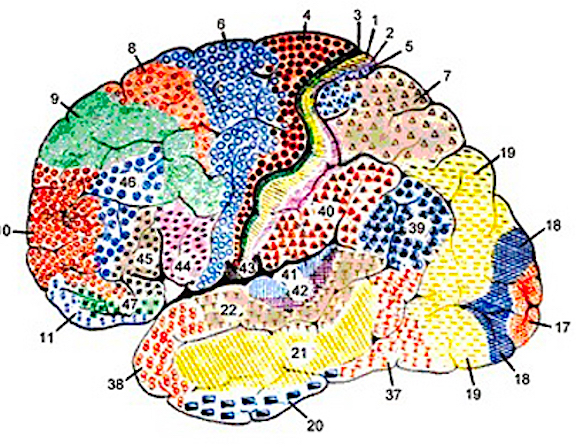

TEMPORAL LOBE TOPOGRAPHY

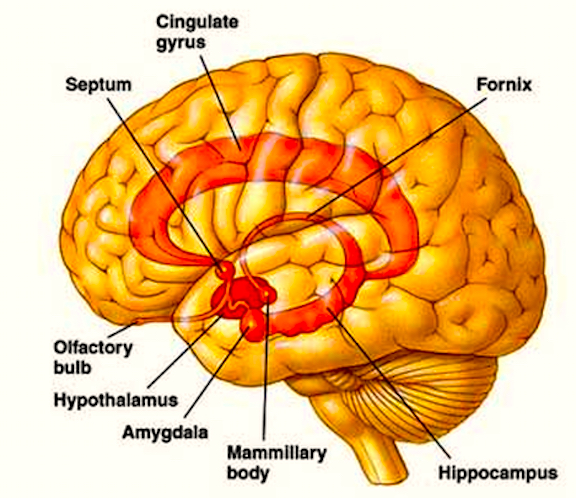

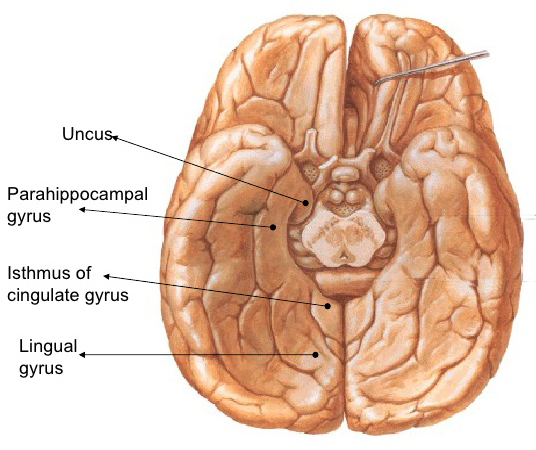

The temporal lobe is the most heterogenous of the four lobes of the human brain, as it consists of six layered neocortex, four to five layered mesocortex, and 3 layered allocortex, with the hippocampus and amygdala forming its limbic core.

Because so much of the temporal lobe evolved from these limbic nucle it consists of a mixture of allocortex, mesocortex, and neocortex, with allocortex and mesocortex being especially prominent in and around the medial-anterior inferiorally located uncus, beneath which and which abuts the amygdala and hippocampus.

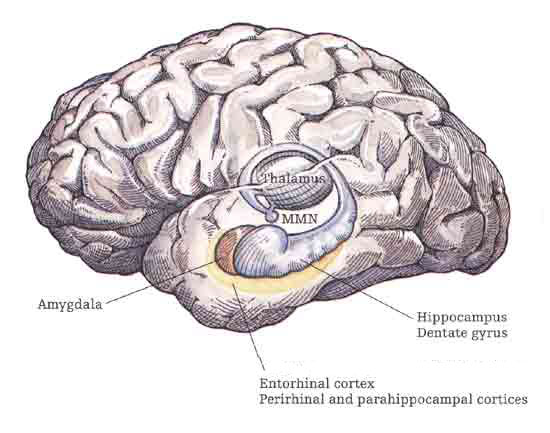

Given the role of the amygdala and hippocampus in memory, emotion, attention, and the processing of complex auditory and visual stimuli, the temporal lobe became similarly organized (Gloor, 1997). Broadly considered, the neocortical surface of the temporal lobes can be subdivided into three main convolutions, the superior, middle, and inferior temporal gyri, which in turn are separated and distinguished by the sylvian fissure and the superior, middle, and inferior temporal sulci. Each of these subdivision performs different (albeit overlapping) functions, i.e. auditory, visual, and auditory-visual-affective perception including memory storage.

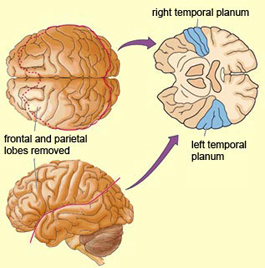

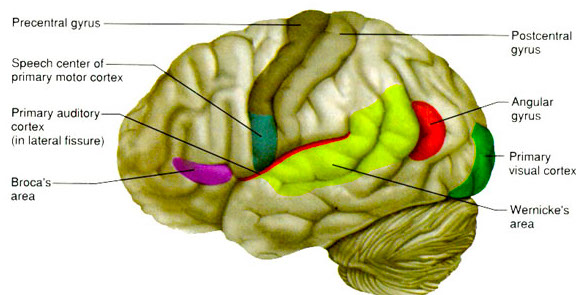

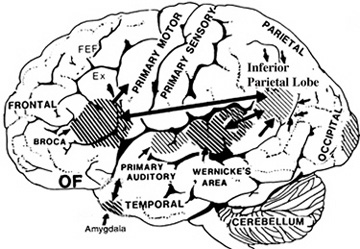

Specifically, the anterior and superior temporal lobes are concerned with complex auditory and linguistic functioning and the comprehension of language (Binder et al., 1994; Edeline et al. 1990; Geschwind 1965; Goodglass & Kaplan, 2013; Keser et al. 2011; Nelken et al., 2003; Nishimura et al., 2006; Price, 1997). The importance of the superior temporal lobe in auditory functioning, and (in the left hemisphere) language, is indicated by the fact that the superior temporal, including the primary auditory area, is larger in humans versus other primates and mammals. In addition, Heschl's gyri are larger on the left, and the left planum temporal is 10 times larger (among the majority of the population) than its counterpart on the right (Geschwind & Levinsky, 1968). The auditory areas, however, extend along the axis of the superior temporal lobe, extending in a anterior-inferior and posterior-superior arc, such that the connections of the primary auditory area extends outward in all directions innervating, in a temporal sequences, the second and third order auditory association areas (Pandya & Yeterian, 1985), association areas which in turn project back to the primary auditory area.

The inferior and medial temporal lobe harbors the amygdala and hippocampus, performs complex visual integrative activities including visual closure, and contains neurons which respond selectively to faces and complex geometric and visual stimuli (Gross & Graziano 1995; Nakamura et al. 2012; Rolls 1992; Tovee et al. 2004). The inferior and middle temporal lobes, are the recipients of one two diverging (dorsal and ventral) streams of visual input arising from within the occipital lobe and thalamus (Ungerlieder & Mishkin, 1982); i.e. the pulvinar and dorsal medial nucleus of the thalamus. The dorsal stream is more concerned with the detection of motion and movement, orientation, binocular disparity, whereas the ventral stream is concerned with the discrimination of shapes, textures, objects and faces, including individual faces (Baylis et al., 1985; Perrett et al., 1984, 1992). This information flows from the primary visual to visual association areas and is received and processed in the temporal lobes and is then shunted to parietal lobe, and to the amygdala and entorhinal cortex (the gateway to the hippocampus) where it may then be learned and stored in memory.

The temporal lobes, however, also receive extensive projections from the somesthetic and visual association areas, and processes gustatory, visceral, and olfactory sensations (Jones & Powell, 1970; Previc 1990; Seltzer & Pandya, 1978) including the feeling of hunger (Fisher 1994). Hence, the temporal lobes perform an exceedingly complex array of divergent and interrelated functions.

FUNCTIONAL OVERVIEW

The right and left temporal lobe are functionally lateralized, with the left being more concerned with non-emotional language functions, including, via the inferior-medial and basal temporal lobes reading and verbal (as verbal-visual) memory. For example, as determined based on functional imaging, when reading and speaking the left posterior temporal lobe becomes highly active, due, presumably to its involvement in lexical processing (Binder et al., 1994; Howard et al., 2009). The superior temporal lobe (and supramarginal gyrus) also becomes more active when reading aloud than when reading silently (Bookheimer, et al., 1995), and becomes active during semantic processing as does the left angular gyrus (Price, 1997). These same temporal areas are activated during word generation (Shaywitz, et al., 1995; Warburton, et al., 2016), and sentence comprehension tasks (Bottini, et al., 2004; Fletcher et al., 2015). and (in conjunction with the angular gyrus) becomes highly active when retrieving the meaning of words during semantic processing and semantic decision tasks (Price, 1997). Likewise, single cell recordings from the auditory areas in the temporal lobe demonstrate that neurons become activated in response to speech, including the sound of the patient's own voice (Creutzfeldt, et al., 1989).

By contrast, the right is more concerned with perceiving emotional and melodic auditory signals (Evers et al., 2013; Parsons & Fox, 1997; Ross, 1993), and is dominant for storing and recalling emotional and visual memories (Abrams & Taylor, 1979; Cimino, et al. 1991; Cohen, Penick & Tarter, 1974; Deglin & Nikolaenko, 1975; Kimura, 1963; Shagass et al.,1979; Wexler, 1973). The right temporal lobe becomes highly active when engage in interpreting the figurative aspects of language (Bottini et al., 1994).

However, there is considerable functional overlap and these structures often become simultaneously activated when performing various tasks. For example, as based on functional imaging, when reading, the right posterior temporal cortex also becomes highly active (Bookheimer, et al., 1995; Bottini et al., 1994; Price, et al., 2016), and when making semantic decisions (involving reading words with similar meanings), there is increased activity bilaterally (Shaywitz, et al., 1995).

Presumably, in part, both temporal lobes become activated when speaking and reading, due to the left temporal lobe's specialization for extracting the semantic, temporal, sequential, and the syntactic elements of speech, thereby making language comprehension possible. By contrast, the right temporal lobe becomes active as it attempts to perceive, extract and comprehend the emotional (as well as the semantic) and gestalt aspects of speech and written language.

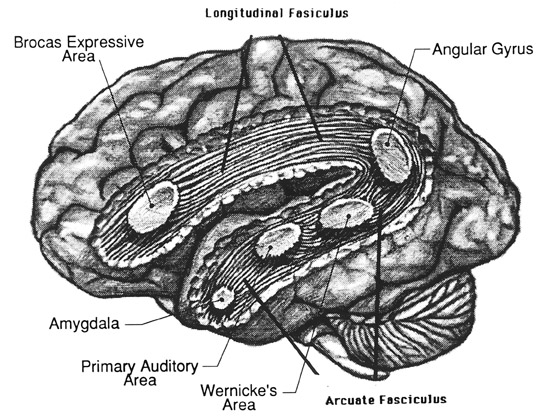

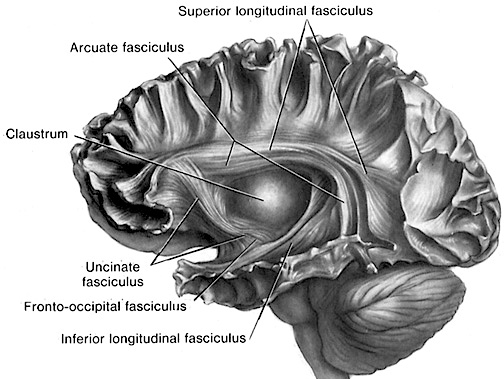

AUDITORY NEOCORTEX

Although the various cytoarchitectural functional regions are not well demarcated via the use of Brodmann's maps, it is possible to very loosely define the superior-temporal and anterior-inferior and anterior middle temporal lobes as auditory cortex (Pandya & Yeterian, 1985). These regions are linked together by local circuit (inter-) neurons and via a rich belt of projection fibers which include the arcuate and inferior fasciculus which project in a anterior-inferior and posterior-superior arc innervating (inferiorally) the amygdala and entorhinal cortex (Amaral et al., 1983) and posteriorally the inferior parietal lobule--as is evident based on brain dissection. It is also via the arcuate and inferior fasciculus that the inferior temporal lobe, entorhinal cortex (the "gateway to the hippocampus") as well as the amygdala (receive from) and transfer complex auditory information to the primary and secondary auditory cortex which simultaneously receives auditory input from the medial geniculate of the thalamus, the pulvinar, and (sparingly) the midbrain (Amaral et al., 1983; Pandya & Yeterian, 1985). As will be detailed below, it is within the primary and neocortical auditory association areas where linguistically complex auditory signals are analyzed and reorganized so as to give rise to complex, grammatically correct, human language.

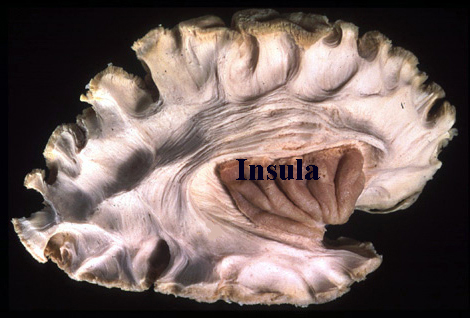

It is noteworthy that immediately beneath the insula and approaching the auditory neocortex is a thick band of amygdala-cortex, the claustrum. Over the course of evolution the claustrum apparently split off from the amygdala due to the expansion of the temporal lobe and the passage of additional axons coursing throughout the white matter (Gilles et al., 1983) including the arcuate fasciculus. Nevertheless, the claustrum maintains rich interconnections with the amygdala, the insula, and to some extent, the auditory cortex (Gilles et al., 1983). This is evident from dissection of the human brain which reveals that the fibers of the arcuate fasciculus do not merely pass through this structure (breaking it up in the process) but sends and receives fibers from it.

[-INSERT FIGURE 5 & 6 ABOUT HERE-]

In over 80-90% of right handers and in over 50% to 80% of left handers, the left hemisphere is dominant for expressive and receptive speech (Frost et al., 2013; Pujol, et al., 2013). In humans, the auditory cortex including Wernicke's (i.e. the planum temporale) is generally larger on the left temporal lobe (Geschwind & Levitsky, 1968; Geschwind & Galaburda 1985; Habib et al. 1995; Wada et al., 1975; Witelson & Pallie, 1973). Specifically, as originally determined by Geschwind & Levitsky (1968), the planum temporal is larger in the left hemisphere in 65% of brains studied, is larger on the right in 25%, whereas there is no difference in 10%. Geschwind, Galaburda and colleagues argue that the larger left planum temporale is a significant factor in the establishment of left hemisphere dominance for language.

FILTERING, FEEDBACK & TEMPORAL-SEQUENTIAL REORGANIZATION

The old cortical centers located in the midbrain and brain stem evolved long before the appearance of neocortex and have long been adapted and specialized for performing a considerable degree of information analysis (Buchwald et al. 1966). This is evident from observing the behavior of reptiles and amphibians where auditory cortex is either absent or minimally developed.

Moreover, many of these old cortical nuclei also project back to each other such that each subcortical structure might hear and analyze the same sound repeatedly (Brodal 1981; Pandya & Yeterian, 1985). In this manner the brain is able heighten or diminish the amplitude of various sounds via feedback adjustment (Joseph, 1993; Luria, 1980). In fact, not only is feedback provided, but the actual order of the sound elements perceived can be rearranged when they are played back.

This same process continues at the level of the neocortex which has the advantage of being the recipient of signals that have already been highly processed and analyzed (Buchwald et al. 1966; Edeline et al. 1990; Scharz & Tomlinson 1990). Primary auditory neurons are especially responsive to the temporal sequencing of acoustic stimuli (Wolberg & Newman, 1972). It is in this manner, coupled with the capacity of auditory neurons to extract non-random sounds from noise, that language related sounds begin to be organized and recognized (e.g. Nelken et al., 2013; Scwazrz & Tomlinson 1990).

For example, neurons located in the primary auditory cortex can determine and recognize differences and similarities between harmonic complex tones and demonstrated auditory response patterns that vary in response to lower and higher frequency and to specific tones (Nelken et al. 2013; Scwarz & Tomlinson 1990). Some display "tuning bandwithdts" for pure tones, whereas others are able to identify up to seven components of harmonic complex tones. In this manner, pitch can also be discerned (e.g. Pantev et al. 1989). Sustained Auditory Activity.

One of the main functions of the primary auditory neocortical receptive area appears to be the retention of sounds for brief time periods (up to a second) so that temporal and sequential features may be extracted and discrepancies in spatial location identified; i.e. so that we can determine from where a sound may have originated (see Mills & Rollman 1980). This prolonged activity, presumably also allows for additional processing and so that comparisons to be made with sounds that were just previously received and those which are just arriving. Hence, as based on functional imaging, the left temporal lobe becomes increasingly active as word length increases (Price, 1997), due presumably to the increased processing necessary.

Moreover, via their sustained activity, these neurons are able to prolong (perhaps via a perseverating feedback loop with the thalamus) the duration of certain sounds so that they are more amenable to analysis--which may explain why activity increases in response to unfamiliar words and as word length increases (Price, 1997). In this manner, even complex sounds can be broken down into components which are then separately analyzed. Hence, sounds can be perceived as sustained temporal sequences. It is perhaps due to this attribute that injuries to the superior temporal lobe result in short-term auditory memory deficits as well as disturbances in auditory discrimination (Hauser, 1997; Heffner & Heffner, 1986).

Although it is apparent that the auditory regions of both cerebral hemispheres are capable of discerning and extracting temporal-sequential rhythmic acoustics (Milner, 1962; Wolberg & Newman, 1972), the left temporal lobe apparently contains a greater concentration of neurons specialized for this purpose as the left half of the brain is clearly superior in this capacity (Evers et al., 2013).

For example the left hemisphere has been repeatedly shown to be specialized for sorting, separating and extracting in a segmented fashion, the phonetic and temporal-sequential or linguistic-articulatory features of incoming auditory information so as to identify speech units. It is also more sensitive to rapidly changing acoustic cues be they verbal or non-verbal as compared to the right hemisphere (Shankweiler & Studdert-Kennedy, 1967; Studdert-Kennedy & Shankweiler, 1970). Moreover, via dichtoic listening tasks, the right ear (left temporal lobe) has been shown to be dominant for the perception of real words, word lists, numbers, backwards speech, morse code, consonants, consonant vowell syllables, nonsense syllables, the transitional elements of speech, single phonemes, and rhymes. In addition, and as based on functional imaging, activity significantly increases in the left hemisphere during language tasks (Nelken et al., 2013; Nishimura et al., 2013), including reading (Binder et al., 1994; Price, 1997).

In part the association of the left hemisphere and left temporal lobe with performing complex temporal-sequential and linguistic analysis is due to its interconnections with the inferior parietal lobule (see chapters 6, 11)--a structure which also becomes highly active when reading and naming (Bookheimer, et al., 1995; Menard, et al., 2016; Price, 1997; Vandenberghe, et al., 2016) and which acts as a phonological storehouse that becomes activated during short-term memory and word retrieval (Demonet, et al., 2004; Paulesu, et al., 2013; Price, 2017).

As noted in chapters 6, 11, the inferior parietal lobule is in part an outgrowth of the superior temporal lobe but also consists of somesthetic and visual neocortical tissue. However, the inferior parietal lobule also acts to impose temporal sequences on incoming auditory, as well as visual and somesthetic stimuli, and also serves to provide (via its extensive interconnections with surrounding brain tissue) and integrate related associations thus making complex and grammatically correct human language possible (chapters 5, 11).

However, the language capacities of the left temporal lobe are also made possible via feedback from "subcortical" auditory neurons, and via sustained (vs diminished) activity and analysis. That is, because of these "feedback" loops the importance and even order of the sounds perceived can be changed, filtered or heightened; an extremely important development in regard to the acquisition of human language (Joseph, 1993; Luria, 1980). In this manner sound elements composed of consonants, vowels, and phonemes and morphemes can be more readily identified, particularly within the auditory neocortex of the left half of the brain (Cutting 1974; Shakweiler & Studdert-Kennedy 1966, 1967; Studdert-Kennedy & Shankweiler, 1970).

For example, normally a single phoneme may be scattered over several neighboring units of sounds. A single sound segment may in turn carry several successive phonemes. Therefore a considerable amount of reanalysis, reordering, or filtering of these signals is required so that comprehension can occur (Joseph 1993). These processes, however, presumably occurs both at the neocortical and old cortical level. In this manner a phoneme can be identified, extracted and analyzed and placed in its proper category and temporal position (see edited volume by Mattingly & Studdert-Kennedy, 1991 for related discussion).

Take, for example, three sound units, "t-k-a," which are transmitted to the superior temporal auditory receiving area. Via a feedback loop the primary auditory area can send any one of these units back to the thalamus which again sends it back to the temporal lobe thus amplifying the signal and/or which allows for rearranging their order, "t-a-k," or "K-a-t." A multitude of such interactions are in fact possible so that whole strings of sounds can be arranged or rearranged in a certain order (Joseph 1993). Mutual feed back characterizes most other neocortical-thalamic interactions as well, be it touch, audition, or vision (Brodal 1981; Carpenter 1991; Parent 1995).

Via these interactions and feedback loops sounds can be repeated, their order can be rearranged, and the amplitude on specific auditory signals can be enhanced whereas others can be filtered out. It is in this manner, coupled with experience and learning (Edeline et al. 1990; Diamond & Weinberger 1989) that fine tuning of the nervous system occurs so that specific signals are attended to, perceived, processed, committed to memory and so on. Indeed, a significant degree of plasticity in response to experience as well as auditory filtering occurs throughout the brain not only in regard to sound, but visual and tactual information as well (Greenough et al. 1987; Hubel & Wiesel, 1970; Juliano et al. 1994). Moreover, the same process occurs when organizing words for expression.

This ability to perform so many operations on individual sound units has in turn greatly contributed to the development of human speech and language. For example, the ability to hear human speech requires that temporal resolutions occur at great speed so as to sort through the overlapping and intermixed signals being perceived. This requires that these sounds are processed in parallel, or stored briefly and then replayed in a different order so that discrepancies due to overlap in sounds can be adjusted for (see Mattingly & Studdert-Kennedy, 1991 for related discussion), and this is what occurs many times over via the interactions of the old cortex and the neocortex. Similarly, when speaking or thinking, sound units must also be arranged in a particular order so that what we say is comprehensible to others and so that we may understand our own verbal thoughts.

HEARING SOUNDS & LANGUAGE

Humans are capable of uttering thousands of different sounds all of which are easily detected by the human ear. And yet, although own vocabulary is immense, human speech actually consists of about 12-60 units of sound depending, for example, if one is speaking Hawaiian vs English. The English vocabulary consists of several hundred thousand words which are based on the combinations of just 45 different sounds.

Animals too, however, are capable of a vast number of utterances. In fact monkeys and apes employ between 20-25 units of sound, whereas a fox employs 36. However, these animals cannot string these sounds together so as to create a spoken language. Most animals tend to use only a few units of sound at one time which varies depending on their situation, e.g. lost, afraid, playing.

Humans combine these sounds to make a huge number of words. In fact, employing only 13 sound units, humans are able to combine them to form five million word sounds (see Fodor & Katz 1964; Slobin 1971)

PHONEMES

As noted, the auditory areas are specialized to perceive a variety of sounds including those involving temporal sequences and abrupt change sin acoustics--characteristics which also define phonemes, which are the smallest units of sound and which are considered the building blocks of human language. For example, p and b as in bet vs pet are phonemes. When phonemes are strung together as a unit they in turn comprise morphemes. Morphemes such as "cat" are composed of three phonemes, "k,a,t". Hence, phonemes must be arranged in a particular temporal order in order to form a morpheme, and phoneme perception is associated with the auditory area of the left temporal lobe (Ojemann, 1991).

Morphemes in turn make up the smallest unit of meaningful sounds such as those used to signal relationships such as "er" as in "he is older than she." All languages have rules that govern the number of phonemes, their relationships to morphemes and how morphemes may be combined so as to produce meaningful sounds (Fodor & Katz 1964; Slobin 1971).

Each phoneme is composed of multiple frequencies which are in turn processed in great detail once they are transmitted to the superior temporal lobe. As noted, the primary auditory area is tonotopically organized, such that related albeit differing auditory frequencies are analyzed by adjoining cell columns. As also noted, however, it is the left temporal lobe which has been shown to be dominant for the perception of real words, word lists, numbers, syllables, the transitional elements of speech, as well as single phonemes, consonants, and consonant-vowell syllables.

CONSONANTS AND VOWELS

In general, there are two large classes of speech sounds: consonants and vowels. Consonants by nature are brief and transitional and have identification boundaries which are sharply defined. These boundaries enable different consonants to be discerned accurately (Mattingly & Studdert-Kennedy, 1991). Vowels are more continuous in nature and in this regard, the right half of the brain plays an important role in their perception.

Consonants are more important in regard to left cerebral speech perception. This is because they are composed of segments of rapidly changing frequencies which includes the duration, direction and magnitude of sounds segments interspersed with periods of silence. These transitions occur in 50 msecs or less which in turn requires that the left half of the brain take responsibility for perceiving them.

In contrast, vowels consist of a slowly changing or steady frequencies with transitions taking 350 or more msec. In this regard, vowels are more like natural environmental sounds which are more continuous in nature, even those which are brief such as a snap of a twig. They are also more likely to be processed and perceived by the right half of the brain, though the left cerebrum also plays a role in their perception (see chapter 11).

The differential involvement of the right and left hemisphere in processing consonants and vowels is a function of their neuroanatomical organization and the fact that the left cerebrum is specialized for dealing with sequential information. Moreover, the left temporal lobe is able to make fine temporal discriminations with intervals between sounds as small as 50 msec. However, the right hemisphere needs 8-10 times longer and has difficulty discriminating the order of sounds if they are separated by less than 350 msecs.

Hence, consonants are perceived in a wholly different manner from vowels. Vowels yield to nuclei involved in "continuous perception" and are processed by the right (as well as left) half of the brain. Consonants are more a function of "categorical perception" and are processed in the left half of cerebrum. In fact, the left temporal lobe acts on both vowels and consonants during the process of perception so as to sort these signals into distinct patterns of segments via which these sounds become classified and categorized (Joseph 1993).

Nevertheless, be they processed by the right or left brain, both vowels and consonants are significant in speech perception. Vowels are particularly important when we consider their significant role in communicating emotional status and intent.

GRAMMAR & AUDITORY CLOSURE

Despite claims regarding "universal grammars" and "deep structures," it is apparent that human beings speak in a decidedly non-grammatical manner with many pauses, repetitions, incomplete sentences, irrelevant words, and so on. However, this does not prevent comprehension since the structure of the nervous system enables H. sapiens sapiens to perceptually alter word orders so they make sense, and even fill in words which are left out.

For example, when subjects heard a taped sentence in which a single syllable (gis) from the word "legislatures," had been deleted and filled in with static (i.e. le...latures), the missing syllable was not noticed. Rather, all subjects heard "legislatures" (reviewed in Farb 1975)

Similarly, when the word "tress" was played on a loop of tape 120 times per minute (tresstresstress....") subjects reported hearing words such as dress, florists, purse, Joyce, and stress (Farb 1975). In other words they organized these into meaningful speech sounds which were then coded and perceived as words.

The ability to engage in gap filling, sequencing, and to impose temporal order on incoming (supposedly grammatical speech) is important because human speech is not always fluent as many words are spoken in fragmentary form and sentences are usually four words or less . Much of it also consists of pauses and hesitations, "uh" or "err" sounds, stutters, repetitions, and stereotyped utterances, "you know," "like".

Hence, a considerable amount of reorganization as well as filling in must occur prior to comprehension. Therefore, these signals must be rearranged in accordance with the temporal sequential rules imposed by the structure and interaction of Wernicke's area, the inferior parietal lobe and the nervous system -what Noam Chompsky (1957) referred to as "deep structure"- so that they may be understood.

Consciously, however, most people fail to realize that this filling in and reorganization has even occurred, unless directly confronted by someone who claims that she said something she believes she didn't. Nevertheless, this filling in and process of reorganization greatly enhances comprehension and communication.

UNIVERSAL GRAMMARS

Regardless of culture, race, environment, geographical location, parental verbal skills or attention, children the world over go through the same steps at the same age in learning language (Chompsky, 1972). Unlike reading and writing, the ability to talk and understand speech is innate and requires no formal training. One is born with the ability to talk, as well as the ability to see, hear, feel, and so on. However, one must receive training in reading, spelling and mathematics as these abilities are acquired only with some difficulty and much effort. On the other hand, just as one must be exposed to light or he will lose the ability to see complex forms, one must be exposed to language or he will lose the ability to talk or understand human speech.

In his book Syntactic Structures, Chompsky (1957) argues that all human beings are endowed with an innate ability to acquire language as they born able to speak in the same fashion, albeit according to the tongue of their culture, environment and parents. They possess all the rules which govern how language is spoken and they process and express language in accordance with these innate temporal-sequential motoric rules which we know as grammar.

Because they possess this structure, which in turn is imposed by the structure of our nervous system, children are able to learn language even when what they hear falls outside this structure and is filled with errors. That is, they tend to produce grammatically correct speech sequences, even when those around them fail to do so. They disregard errors because they are not processed by their nervous system which acts to either impose order even where there is none, or to alter or delete the message altogether. It is because humans possess these same Wernicke-inferior parietal lobe "deep structures" that speakers are also able to realize generally when a sentence is spoken in a grammatically incorrect fashion.

It is also because apes, monkeys, and dogs and cats do not possess these deep structures that they are unable to speak or produce grammatically complex sequences or gestures. Hence, their vocal production does not become punctuated by temporal-sequential gestures imposed upon auditory input or output by the Language Axis.

LANGUAGE ACQUISITION: FINE TUNING THE AUDITORY SYSTEM

The auditory cortex is organized so as to extract non-random sounds form noise, and in this manner in adaptive for perceiving and detecting animals sounds and human speech (Nelken et al., 2013). However, by time humans have reached adulthood, they have learned to attend to certain language-related sounds and to generally ignore those linguistic features that are not common to speakers native tongue. Some sounds are filtered and ignored as they are irrelevant or meaningless. Humans also lose the ability to hear various sounds because of actual physical changes, such as deterioration and deafness, which occur within the auditory system.

Initially, however, beginning at birth and continuing throughout life there is a much broader range of generalized auditory sensitivity. It is this generalized sensitivity that enables children to rapidly and more efficiently learn a foreign tongue, a capacity that decreases as they age (Janet et al. 1984). It is due, in part, to these same changes that generational conflicts regarding what constitutes "music" frequently arise.

Nevertheless, since much of what is heard is irrelevant and is not employed in the language the child is exposed to, the neurons involved in mediating their perception either drop out and die from disuse, which further restricts the range of sensitivity. This further aids the fine tuning process so that, for example, one's native tongue can be learned (Janet et al. 1984; Joseph 1993).

For example, no two languages have the same set of phonemes. It is because of this that to members of some cultures certain English words, such as pet and bet, sound exactly alike. Non-English speakers are unable to distinguish between or recognize these different sound units. Via this fine tuning process, only those phonemes essential to one's native tongue are attended to.

Language differs not only in regard to the number of phonemes, but the number which are devoted to vowels vs consonants and so on. Some Arabic dialects have 28 consonants and 6 vowels. By contrast, the English language consists of 45 phonemes which include 21 consonants, 9 vowels, 3 semivowels (y, w, r),4 stress, 4 pitches, 1 juncture (pauses between words) and 3 terminal contours which are used to end sentences (Fodor & Katz 1964; Slobin 1971).

It is from these 45 phonemes that all the sounds are derived which make up the infinity of utterances that comprise the English language. However, in learning to attend selectively to these 45 phonemes, as well as to specific consonants and vowels, required that the nervous system become fine tuned to perceiving them while ignoring others. In consequence, those cells which are unused, die (Joseph 1993).

Children are able to learn their own as well as foreign languages with much greater ease than adults because initially infants maintain a sensitivity to a universal set of phonetic categories (e.g. Janet et al. 1984). Because of this they are predisposed to hearing and perceiving speech and anything speech-like regardless of the language employed.

These sensitivities are either enhanced or diminished during the course of the first few years of life so that those speech sounds which the child most commonly hears becomes accentuated and more greatly attended to such that a sharpening of distinctions occurs (Janet et al. 1984). However, this generalized sensitivity in turn declines as a function of acquiring a particular language and presumably the loss of nerve cells not employed (see chapter 15 for related discussion). The nervous system becomes fine tuned so that familiar language-like sounds become processed and ordered in the manner dictated by the nervous system; i.e., the universal grammatical rules common to all languages.

Fine tuning is also a function of experience which in turn exerts tremendous influence on nervous system development and cell death. Hence, by the time most people reach adulthood they have long learned to categorize most of their new experiences into the categories and channels that have been relied upon for decades.

Nevertheless, in consequence of this filtering, sounds that arise naturally within one's environment can be altered, rearranged, suppressed, and thus erased. By fine tuning the auditory system so as to learn culturally significant sounds and so that language can be acquired occurs at a sacrifice. It occurs at the expense of one's natural awareness of their environment and its orchestra of symphonic sounds. In other words (at least from the perspective of the left hemisphere), the fundamental characteristics of reality are subject to language based alterations (Sapir 1966; Whorf 1956).

LANGUAGE AND REALITY

As detailed in chapters 2, 11, and elsewhere (Joseph 1982, 1988a, 1992b, 1993), language is a tool of consciousness, and the linguistic aspects of consciousness are dependent on the functional integrity of the left half of the brain. In that left cerebral conscious experience is, in large part, dependent on and organized in regard to linguistic and temporal-sequential modes of comprehension and expression, language, therefore can shape as well as determine the manner in which perceptual reality is conceived -at least by the left hemisphere.

According the Edward Sapir (1966) "Human beings are very much at the mercy of the particular language which has become the medium of their society...the real world is to a large extent built up on the language habits of the group. No two language are ever sufficiently similar to be considered as representing the same social reality."

According to Benjamin Whorf (1956) "language.... is not merely a reproducing instrument for voicing ideas but rather is itself the shaper of ideas... We dissect nature along lines laid down by language." However, Whorf believed that it was not just the words we used but grammar which has acted to shape human perceptions and thought. A grammatically imposed structure forces perceptions to conform to the mold which gives them not only shape, but direction and order.

Hence, distinctions imposed by temporal order and the employment of linguistically based categories and the labels and verbal associations which enable them to be described can therefore enrich as well as alter thoughts and perceptual reality. This is because language is intimately entwined with conscious experience.

For example, Eskimos possess an extensive and detailed vocabulary which enables them to make fine verbal distinctions between different types of snow, ice, and prey, such as seals. To a man born and raised in Kentucky, snow is snow and all seals may look the same. But if that same man from Kentucky had been raised around horses all his life he may in turn employ a rich and detailed vocabulary so as to describe them, e.g. appaloosa, paint, pony, stallion, and so on. However, to the Eskimo a horse may be just a horse and these other names may mean nothing to him. All horses look the same.

Moreover, both the Eskimo and the Kentuckian may be completely bewildered by the thousands of Arabic words associated with camels, the twenty or more terms for rice used by different Asiatic communities, or the seventeen words the Masai of Africa use to describe cattle.

Nevertheless, these are not just words and names, for each cultural group are also able to see, feel, taste, or smell these distinctions as well. An Eskimo sees a horse but a breeder may see a living work of art whose hair, coloring, markings, stature, tone, height, and so on speak volumes as to its character, future, and genetic endowment.

Through language one can teach others to attend to and to make the same distinctions and to create the same categories. In this way, those who share the same language and cultural group, learn to see and talk about the world in the same way, whereas those speaking a different dialect may in fact perceive a different reality. Thus, the linguistic foundations of conscious experience, and thus linguistic-perceptual consciousness can be shaped by language.

For example, when A.F. Chamberlain (1903), visited the Kootenay and Mohawk Indians of Brittish Columbia during the late 1800s, he noted that they even heard animal and bird sounds differently from him. For example, when listening to some owls hooting, he noted that to him it sounded like "tu-whit-tu-whit-tu-whit," whereas the Indians heard "Katskakitl." However, once he became accustomed to their language and began to speak it, he soon developed the ability to hear sounds differently once he began to listen with his "Indian ears." When listening to a whip poor will, he noted that instead of saying whip-poor-will, it was saying "kwa-kor-yeuh."

Observations such as these thus strongly suggest that if one were to change languages they might change their perceptions and even the expression of their conscious thoughts and attitudes. Consider for example, the results from an experiment reported by Farb (1975). Bilingual Japanese born women married to American Serviceman were asked to answer the same question in English and in Japanese. The following responses were typical.

"When my wishes conflict with my family's..."

"....it is a time of great unhappiness (Japanese)

....I do what I want. (English)

"Real friends should...."

"...help each other." (Japanese)

"...be very frank." (English)

Obviously, language does not shape all attitudes and perceptions. Moreover, language is often a consequence of these differential perceptions, which in turn requires the invention of new linguistic labels so as to describe these new experiences. Speech of course is also filtered through the personality of the speaker, whereas the listener is also influenced by their own attitudes, feelings, beliefs, prejudices and so on, all of which can affect what is said, how it is said, and how it is perceived and interpreted (Joseph 1993).

In fact, regardless of the nature of a particular experience, be it visual, olfactory or sexual, language not only influences perceptual and conscious experiences but even the ability to derive enjoyment from them, for example, by labeling them cool, hip, sexy, bad, or sinful, and by instilling guilt or pride. In this manner, language serves not only to label and filter reality, but affects one's ability to enjoy it.

SPATIAL LOCALIZATION, ATTENTION & ENVIRONMENTAL SOUNDS

In conjunction with the inferior colliculus and the frontal lobe (Graziano et al., 2013), and due to bilateral auditory input, the primary auditory area plays a significant role in orienting to and localizing the source of various sounds (Sanchez-Longo & Forster, 1958); for example, by comparing time and intensity differences in the neural input from each ear. A sound arising from one's right will reach and sound louder to the right ear as compared to the left.

Indeed, among mammals, a consideral number of auditory neurons respond or become highly excited only in response to sounds from a particular location (Evans & Whitfield, 1968). Moreover, some of these neurons become excited only when the subject looks at the source of the sound (Hubel et al., 1959). Hence, these neurons act so that location may be identified and fixated upon. In addition to the frontal lobe (Graziano et al., 2013) these complex interactions probably involve the parietal area (7), as well as the midbrain colliculi and limbic system. As based on lesions studies in humans, the right temporal lobe is more involved than the left in discerning location (Nunn et al., 2013; Penfield & Evans, 1934; Ploner et al., 199; Shankweiler, 1961).

There is also some indication that certain cells in the auditory area are highly specialized and will respond only to certain meaningful vocalizations (Wollberg & Newman, 1972). In this regard they seemed to be tuned to respond only to specific auditory parameters so as to identify and extract certain meaningful features, i.e. feature detector cells. For example, some cells will respond to cries of alarm and sounds suggestive of fear or indicating danger, whereas others will react only to specific sounds and vocalizations.

Nevertheless, although the left temporal lobe appears to be more involved in extracting certain linguistic features and differentiating between semantically related sounds (Schnider et al. 1994), the right temporal region is more adept at identifying and recognizing acoustically related sounds and non-verbal environmental acoustics (e.g. wind, rain, animal noises), prosodic-melodic nuances, sounds which convey emotional meaning, as well as most aspects of music including temp and meter (Heilman et al. 1975, 1984; Joseph, 1988a; Kester et al. 1991; Schnider et al. 1994; Spinnler & Vignolo 1966).

Indeed, the right temporal lobes spatial-localization sensitivity coupled with its ability to perceive and recognize environmental sounds no doubt provided great survival value to early primitive man and woman. That is, in response to a specific sound (e.g. a creeping predator), one is able to immediately identify, localize, locate, and fixate upon the source and thus take appropriate action. Of course, even modern humans relie upon the same mechanisms to avoid cars when walking across streets or riding bicycles, or to ascertain and identify approaching individuals, etc.

PURE WORD DEAFNESS

With a destructive lesion involving the left auditory receiving area, Wernicke's area becomes disconnected from almost all sources of acoustic input and patients are unable to recognize or perceive the sounds of language, be it sentences, single words, or even single letters (Hecaen & Albert, 1978). All other aspects of comprehension are preserved, including reading, writing, and expressive speech.

Moreover, if the right temporal region is spared, the ability to recognize musical and environmental sounds is preserved. However, the ability to name or verbally describe them is impaired --due to disconnection and the inability of the right hemisphere to talk.

Pure word deafness is most common with bilateral lesions in which case environmental sound recognition is also effected (e.g. Kazui et al. 1990). In these instances the patient is considered cortically deaf. Pure word deafness is more common with bilateral lesions simply because this prevents the any remaining intact auditory areas in the left hemisphere from receiving auditory input from the right temporal lobe via the corpus callosum.

Pure word deafness, when due to a unilateral lesion of the left temporal lobe, is partly a consequence of an inability to extract temporal-sequential features from incoming sounds. Hence, linguistic messages cannot be recognized. However, pure word deafness can sometimes be partly overcome if the patient is spoken to in an extremely slowed manner (Albert & Bear, 1974). The same is true of those with Wernicke's aphasia.

AUDITORY AGNOSIA

An individual with cortical deafness, due to bilateral lesions suffers from a generalized auditory agnosia involving words and non-linguistic sounds. However, in many instances an auditory agnosia, with preserved perception of language may occur with lesions restricted to the right temporal lobe (Fujii et al., 1990). In these instances, an indvidual loses the capability to correctly discern environmental (e.g. birds singing, doors closing, keys jangling) and acoustically related sounds, emotional-prosodic speech, as well as music (Nielsen, 1946; Ross, 1993; Samson & Zattore, 1988; Schnider et al. 1994; Spreen et al. 1965; Zatorre & Hapern, 1993).

These problems are less likely to come to the attention of a physician unless accompanied by secondary emotional difficulties. That is, most individuals with this disorder, being agnosic, would not know that they have a problem and thus would not complain. If they are their families notice (for example, if a patient does not respond to a knock on the door) the likelihood is that the problem will be attributed to faulty hearing or even forgetfulness.

However, because such individuals may also have difficulty discerning emotional- melodic nuances, it is likely that they will misperceive and fail to comprehend a variety of paralinguistic social-emotional messages; a condition referred to as social-emotional agnosia (Joseph, 1988a) and phonagnosia (van Lancker, et al., 1988). This includes difficulty correctly identifying the voices of loved ones or friends, or discerning what others may be implying, or in appreciating emotional and contextual cues, including variables such as sincerity or mirthful intonation. Hence, a host of behavioral difficulties may arise (see chapter 10).

For example, a patient may complain that his wife no longer loves him, and that he knows this from the sound of her voice. In fact, a patient may notice that the voices of friends and family sound in some manner different, which, when coupled with difficulty discerning nuances such as humor and friendliness may lead to the development of paranoia and what appears to be delusional thinking. Indeed, unless appropriately diagnoses it is likely that the patients problem will feed upon and reinforce itself and grow more severe.

It is important to note that rather than completely agnosic or word deaf, patients may suffer from only partial deficits. In these isntances they may seem to be hard of hearing, frequently misinterpret what is said to them, and/or slowly develop related emotional difficulties.

THE AUDITORY ASSOCIATION AREAS

The auditory area although originating in the depths of the superior temporal lobe extends in a continuous belt-like fashion posteriorly from primary and association (e.g. Wernicke's) area toward the inferior parietal lobule, and via the arcuate fasciculus onward toward Broca's area. Indeed, in the left hemisphere, this massive rope of interconnections forms an Axis such that Wernickes Area, the inferior parietal lobule, and Broca's area. These areas often become activated in parallel or simultaneously during language tasks, and together, are able to mediate the perception and expression of most forms of language and speech (Joseph, 1982, Goodglass & Kaplan, 2000; Ojemann, 1991).

The rope-like arcuate and inferior and superior fasciculi are bidirectional fiber pathways, however, that run not only from Wernickes through to Broca's area but extends inferiorly deep into the temporal lobe where contact is established with the amygdala. Hence, via these connections auditory input comes to be assigned emotional-motivational significance, whereas verbal output becomes emotionally-melodically colored . Within the right hemisphere, these interconnections which include the amygdala appear to be more extensively developed.

WERNICKE'S AREA

Following the analysis performed in the primary auditory receiving area, auditory information is transmitted to the immediately adjacent association cortex (Brodmanns area 22), where more complex forms of processing take place. In both hemispheres this region partly surrounds the primary area and then extends posteriorly merging with the inferior parietal lobule with which it maintains extensive interconnections. Within the left hemisphere the more posterior portion of area 22, and part of area 42 corresponds to Wernicke's area.

Wernickes area, and the corresponding region in the right hemisphere are not merely auditory association areas as they also receive a convergence of fibers from the somesthetic and visual association cortices (Jones & Powell, 1970; Zeki, 1978b). These interlinking pathways include the inferior and middle temporal lobes which are also multi-modal areas concerned with language, somesthesis, and complex visual functioning (Buchel et al., 1998; Luders et al., 2016; Nobre et al., 1994; Price, 1997), and the immediately adjacent inferior parietal lobule which becomes active when viewing words (Bookheimer, et al., 1995; Vandenberghe, et al., 2015; , Menard, et al., 2016; Price, 1997) when performing syllable judgements (Price, 1997), and when reading ( Bookheimer, et al., 1995; Menard, et al., 2016; Price, et al., 2011).

The auditory association area (area 22) also receives fibers from the contralateral auditory association area via the corpus callosum and anterior commissure and projects to the frontal convexity and orbital region, the frontal eye fields, and cingulate gyrus (Jones & Powell, 1970). Hence, Wernicke's area receives input from a variety of convergence zones, and is involved in multimodal as well as auditory associational analysis and becomes highly active during a variety of language tasks (Buchel et al., 1998; Price, 1997).

Broadly considered, Wernicke's area encompasses a large expanse of tissue that extends from the posterior superior and middle temporal lobe to the IPL and up a over the parietal operculum, around the sylvian fissure, extending just beyond the supramarginal gyrus. Electrical stimulation, the surgical destruction, or lesions of this region results in significant language impariments (Kertesz, 1979; Penfield & Roberts, 1959; Ojemann, 1991). However, this vast expanse of cortex is actually made up of a mosaic of speech area, each concerned with different aspects of language, including comprehesion, word retrieval, naming, verbal memory, and even expressive speech.

For example, only 36% of stimulation sites in the classically defined Wernicke's area (anterior to the IPL), interfere with naming, whereas phoneme identification is significantly impaired (Ojemann, 1991). By contrast, and as noted above, the IPL (which is an extension of Wernicke's area) becomes highly active when retrieving words or engaged in naming. In this regard, the IPL and Wernicke's area interact so as to comprehend and express the sounds and words of language--all of which is then transmitted via the arcuate fasiculus to Broca's area.

RECEPTIVE APHASIA

When the posterior portion of the left auditory association area is damaged there results severe receptive aphasia i.e. Wernicke's aphasia. Individuals with Wernickes aphasia, in addition to severe comprehension deficits usually suffer abnormalities involving expressive speech, reading, writing, repeating, word finding, etc. Spontaneous speech, however, is often fluent, and sometimes hyperfluent such that they speak at an increased rate and seem unable to bring sentences to an end --as if the temporal-sequential separations between words have been extremely shortened or in some instances abolished. In addition, many of the words spoken are contaminated by neologistic and paraphasic distortions. As such, what they say is often incomprehensible (Chirstman 1994; Goodglass & Kaplan 2013; Hecaen & Albert, 1978). Hence, this disorder has also been referred to as fluent aphasia.

Individuals with Wernicke's aphasia, however, are still able to perceive the temporal-sequential pattern of incoming auditory stimuli to some degree (due to preseveration of the primary region). Nevertheless, they are unable to perceive spoken words in their correct order, cannot identify the pattern of presentation (e.g. two short and three long taps) and become easily overwhelmed (Hecaen & Albert, 1978; Luria, 1980).

Patients with Wernicke's aphasia, in addition to other impairments, are necessarily "word deaf". As these individuals recover from their aphasia, word deafness is often the last symptom to disapear (Hecaen & Albert, 1978).

SCHIZOPHRENIA & THE TEMPORAL LOBE

It has been repeatedly reported that a significant minority of individuals with temporal lobe epilepsy suffer from prolonged and/or repeated instances of major psychopathology (Bruton et al. 1994; Buchsbaum, 1990; Falconer, 1973; Flor-Henry, 1969, 1983; Gibbs, 1951; Jensen & Larsen, 1979; Perez & Trimble 1980; Sherwin, 1977, 1981; Slater & beard, 1963; Taylor, 1972; Trimble 1991, 2016). Conversely, those with affective disturbances, e.g. mania, have been found to suffer from seizures involving the right temporal lobe (Bear et al., 1982; Falconer & Taylor, 1970; Flor-Henry, 1969; Trimble 1991, or right frontal lobe (chapter 19), although depression may be more likely with left vs right hemisphere injuries (see chapter 11).

Estimates of psychosis and affective disorders among individuals suffering from temporal lobe epilepsy, be it a single episode or chronic psychiatric condition have varied from 2-81%. Probably a more realistic figure is 3% to 7% (see Trimble, 1991, 1992, 2016).

There are several distinct neurological explanations for the association between temporal lobe damage and psychotic and affective disorders. As discussed in detail in previous chapter 10, the right hemisphere is dominant for most aspects of emotional functioning. Hence, when damaged or abnormally activated, such as from a seizure or chronic subclinical seizure activity, emotional functioning becomes altered and disturbed. Moreover, since the amygdala and hippocampus are often secondarily aroused (or hyperaroused), the possibility of abnormal emotionality is enhanced even further.

In this regard, Gloor et al., (1982) in a depth electrode stimulation study of patients suffering from epilepsy presented evidence indicating that all experiential and emotional alterations were encountered following right amygdala and hippocampal activation, with the majority of phenomenon elicited from the amygdala. As discussed in chapter 13, the limbic system seem to be lateralized and the right amygdala and hippocampus appear to be more involved in emotional functioning . Hence, abnormal activation of these nuclei (such as during the course of a temporal lobe seizure) are more likely to give rise to abnormal emotional reactions, with the exception of depression which is common with left frontal and left temporal lobe injuries.

In addition, some individuals with temporal lobe seizures may complain of emotional blunting. Hence, rather than a limbic hyperconnection, these individuals suffer from a disconnection; similar to what occurs with a left frontal injury.

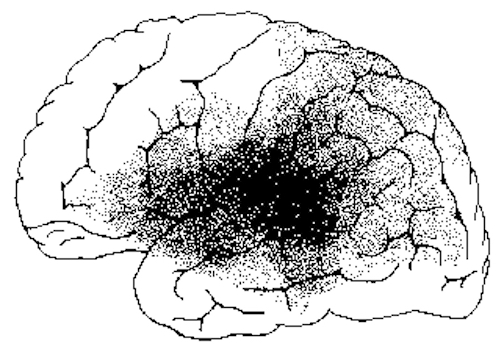

It has also been repeatedly demonstrated in histological studies that the temporal lobe of schizophrenics are characterized by an excessive focal temporal lobe neuronal damage including gliosis (Akbarian et al. 1993; Bruder, et al., 2013; Bruton 1988; Bruton et al. 1990; Harrison, 2013; Kwon et al., 2013; Stevens 1982; Taylor 1972). Hence, these patients may experience auditory hallucinations and are more likely to demonstrate formal thought disorders and aphasic thinking including the production of neologisms and syntactical speech errors (Kwon et al., 2013), as well as emotional (or thought) blocking or blunting due to limbic system-temporal lobe disconnection (Perez & Trimble, 1980; Slater & Beard, 1963). As often occurs with receptive aphasia and left temporal lobe dysfunction, individuals suffering from schizphrenia may be unaware of their illness (Amador et al. 1994; Lysaker, et al., 2013; Wilson et al. 1986).

Hence, certain subgroups of schizophrenics display significant abnormalities involving speech processing, such that the semantic, temporal-sequential, and lexical aspects of speech organization and comprehension are disturbed and deviantly constructed (Chaika, 1982; Clark et al. 1994; Flor-Henry, 1983; Hoffman, 1986; Hoffman et al. 1986; Rutter, 1979). Indeed, significant similarities between schizophrenic discourse and aphasic abnormalties have been reported (Faber, Abrams, Taylor, Kasprisin et al. 1983). It is often difficult to determine what a schizophrenic individual may be talking about, particularly in that some of these individuals complain that what they say often differs from what they intended to say (Chapman, 1966). Temporal-sequentual (i.e. syntactical) abnormalities have also been noted in their ability to reason. The classic example being: "I am a virgin; the Virgin Mary was a virgin; therefore I am the Virgin Mary."

Hence, there is considerable evidence indicating that a significant relationship exists between abnormal left hemisphere and left temporal lobe functioning and schizophrenic language, thought and behavior. As detailed in chapter 19, the left frontal region and medial frontal cortices are also implicated in the genesis of schiophrenic-like abnormalities including catatonia (chapter 19).

However, in contrast to those with frontal lobe schizophrenia who are more likely to present with "negative" symptoms and emotional blunting, temporal lobe schziophrenics are likely to present with "positive" symptoms, including retention of the capacity to experience and express "warmth" (Crow, 1980; Crowe & Kuttner 1991). Emotional expression, however, tends to be abnormal, inappropriate or incongruent, and/or exaggerated (Bear & Fedio, 1977; Crow, 1980; Crowe & Kuttner 1991).

It has also been repeatedly reported that irritative lesions more greatly involves the lateral convexity of the left temporal lobe are he most likely to result in schizophrenic-like disturbances (DeLisi et al. 1991; Dauphinais et al. 1990; Flor-Henry 1983; Harrison, 2013; Perez et al. 1985; Rossi et al. 1990, 1991; Sherwin 1981). Given the role of the temporal lobe in language (and the fact that the superior temporal lobe is derived from amygdala and hippocampal tissue) it is also not uncommon for these individuals to demonstrate aphasic abnormalities in their thought and speech (Chaika, 1982; Clark et al. 1994; Flor-Henry, 1983; Hoffman, 1986; Hoffman et al. 1986; Perez & Trimble, 1980; Rutter, 1979; Slater & Beard, 1963).

However, the type and form of temporal lobe schizophrenia may differ dependent on which regions have been compromised. In some instances the amygdala and hippocampus are involved, (Bogerts et al. 1985; Brown et al. 1986; Falkai & Bogerts 1986) in which case memory and emotional functioning may be most severely effected, and patients may experience visual hallucinations. Temporal lobe schizophrenics with limbic involvement may behave violently and aggressively (Crow & Kuttner 1991; Lewis et al. 1982; Taylor 1969). They may also experience extreme feelings of religiousness (Bear, 1979, Trimble, 1991, 1992), fear, paranoia, and depression (Crow & Kuttner 1991; Perez & Trimble, 1980; Trimble, 1991, 1992), as well as hallucinations.

[-INSERT FIGURE 26 ABOUT HERE-]

APHASIA & PSYCHOSIS

Since the left temporal lobe is intimately involved in all aspects of language comprehension as well as the organization of linguistic expression, altered neocortical activity involving this region is likely to result in significant disruptions in language functioning and the formulation of linguistic thought. As described in chapter 11, individuals with left temporal lobe damage and Wernicke's aphasia are likely to be diagnosed as suffering from a formal thought disorder due to their fluent aphasic language disturbances their failure to comprehend.

Conversely, several authors have noted significant similarities between schizophrenic discourse and aphasia (Faber et al. 1983; Hillbom. 1951), such that the semantic, temporal-sequential, and lexical aspects of speech organization and comprehension are disturbed and deviantly constructed (Chaika, 1982; Flor-Henry, 1983; Hoffman, 1986; Hoffman, Stopek & Andreasen, 1986; Rutter, 1979). Similar findings have been reported for individuals developing post-traumatic schizophrenic-like symptoms following head injury and missile wounds (Hillbom, 1951). Moreover, just as some aphasic individuals may be unaware of their disorder, similar findings have been noted with schizophrenics and those with schizoaffective disorders (Amador et al. 1994).

TEMPORAL LOBE SEIZURES, PSYCHOSIS & APHASIA

Patients with left temporal seizures commonly become globally aphasic during the course of their seizure. When spoken to or if their name is repeatedly called they may make only fleeting eye contact, grunt or utter partial words such as "huh?" Some individuals remain aphasic for seconds to minutes after seizure termination as well.

Language impairments, verbal memory disorders and associated linguistic deficiencies are usually apparent even between seizures if adequately assessed. Moreover, individuals with left sided foci tend to have lower (WAIS-R) Verbal IQ's as compared to their Performance IQ's and as compared to those with right sided foci. This is not surprising as language is represented in the left hemisphere. However, in some cases, excessive activity within the left (or right) temporal (or frontal) lobe can actually result in what appears to be enhanced functional activity.

It is possible that abnormal activity in the left temporal lobe, as well as the presence of an active lesion, can result in significant alterations in not only language, but the organization of linguistic thought such that the patient appears psychotic. In this regard, abnormal thought formation may become a characteristic pattern. In fact, even the neuroanatomical representation of language can be drastically altered due to left cerebral damage (Joseph, 1986a; Novelly & Joseph, 1983). Moreover, if the left temporal region is periodically disconnected from centers mediating emotion, patients may demonstrate what appears to be emotional blunting as well as a formal thought disorder.

BETWEEN SEIZURE PSYCHOSES

The range of disturbances among those with temporal lobe epilepsy includes a strikingly high rate of sexual abberation as well as hyposexuality, aggressiveness, paranoia, depression, deepening of emotion, intensification of religious concerns, disorders of thought, depersonalization, hypergraphia, complex visual and auditory halluciantions and schizophrenia (Bear, 1982; Bear et al., 1982; Flor-Henry, 1969, 1983; Sherwin, 1977, 1981; Schiff et al., 1982; Stevens et al. 1979; Taylor, 1975). However, this association has also been disputed (see Gold et al. 1994).

Frequently the seizure related disturbances in emotional functioning waxe and wane, such that some patients experience islands of normality followed by islands of psychosis. Possibly these changes are a function in variations in subclinical seizure activity and kindling.

In some instances, the psychiatric disturbance may develop over days as a prelude to the actual onset of a seizure, due presumably to increasing levels of abnormal activity until the seizure is triggered. In other cases, particularly in regard to depression, the alterations in personality and emotionality may occur following the seizure and may persist for weeks or even months (Trimble 1991, 2016).

In yet other instances, patients may act increasingly bizzare for weeks, experience a seizure, and then behave in a normal fashion for some time ("forced normalization", Flor-Henry 1983), only to again begin acting increasingly bizzarre. This has been referred to as an inter-ictal (between seizure) psychosis and is possibly secondary to a build up of abnormal activity in the temporal lobes and limbic system (Bear, 1979; Flor-Henry 1969, 1983) Hence, once the seizure occurs, the level of abnormal activity is decreased and the psychosis goes away only to gradually return as abnormal activity again builds up.

Caveats

Some authors have argued that there is no significant relationship between these psychotic disorders and temporal lobe epilepsy (Gold et al. 1994; Stevens & Hermann, 1981), whereas others have drawn attention to possible social-developmental contributions (Matthews et al., 1982). That is, growing up with a seizure disorder, feeling victimized, not knowing when a seizure may strike, loss of control over ones life, etc. can independently create significant emotional aberrations.

It is also important to emphasize that it is probably only a subpopulation of individuals with temporal lobe epilepsy who come to the attention of most researchers; e.g. those with the most serious or intractable problems. Thus one should not immediately view an individual with temporal lobe (or any type of epilepsy) as immediately suspect for emotional-psychotic abnormalities. Nevertheless, it is noteworthy that not only is there an association between the temporal lobe and certain forms of schizophrenia, but that temporal lobe abnormalities have been noted across generations of individuals and families afflicted with psychotic disturbances (Honer et al. 1994).